As AI tools rapidly evolve, how are they shaping the culture of architectural design? Keir Regan-Alexander, director of Arka.Works, explores the opportunities and tensions at the intersection of creativity and computation — challenging architects to rethink what it means to truly design in the age of AI

An awful lot has been happening recently in the AI image space, and I’ve written and rewritten this article about three times to try and account for everything. Every time I think it’s done, there seems to be another release that moves the needle. That’s why this article is in two parts; first I want to look at recent changes from Gemini and GPT-4o and then take a deeper dive into Midjourney V7 and give a sense of how architects are using these models.

I’ll start by describing all the developments and conclude by speculating on what I think it means for the culture of design.

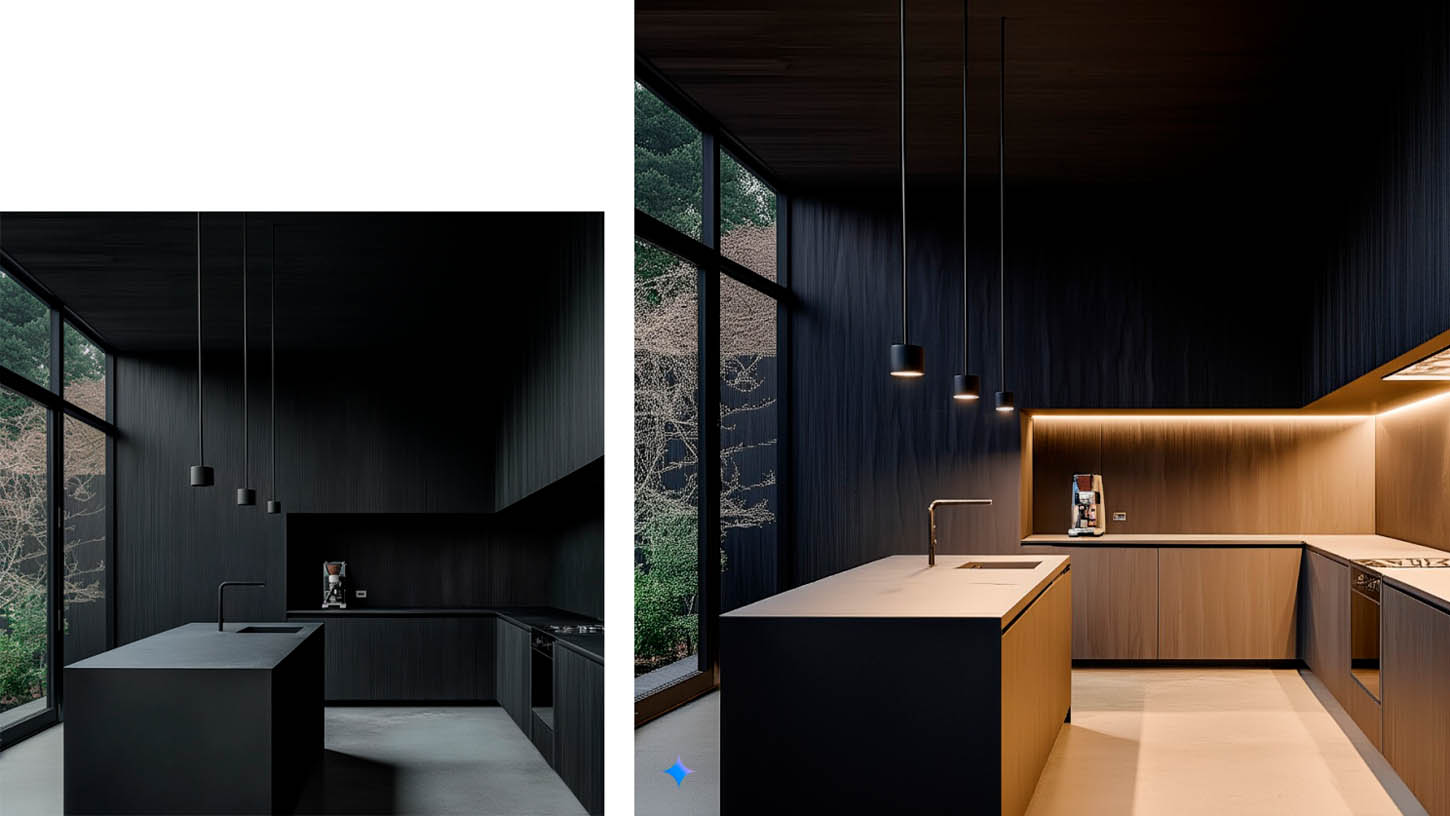

Right off the bat, let’s look at exactly what we’re talking about here. In the figure above you’ll see a conceptual image for a modern kitchen, all in black. This was created with a text prompt in Midjourney. After that I put the image into Gemini 2.0 (inside Google AI Studio) and asked it:

“Without changing the time of day or aspect ratio, with elegant lighting design, subtly turn the lights (to a low level) on in this image – the pendant lights and strip lights over the counter”

Why is this extraordinary?

Well, there is no 3D model for a start. But look closer at the light sources and shadows. The model knew where exactly to place the lights. It knows the difference between a pendant light and a strip light and how they diffuse light. Then it knows where to cast the multi-directional shadows and also that the material textures of each surface would have diffuse, reflective or caustic illumination qualities. Here’s another one (see below). This time I’m using GPT-4o in Image Mode.

Create an image of an architectural sample board based on the building facade design in this image”

Why is this one extraordinary?

Again, no 3D model and with only a couple of minor exceptions, the architectural language of specific ornamentation, materials, colours and proportion have all been very well understood. The image is also (in my opinion) very charming. During the early stages of design projects, I have always enjoyed looking at the local “Architectural Taxonomy” of buildings in context and this is a great way of representing it.

If someone in my team had made these images in practice I would have been delighted and happy for them to be included in my presentations and reports without further amendment.

A radical redistribution of skills

There is a lot of hype in AI which can be tiresome, and I always want to be relatively sober in my outlook and to avoid hyperbole. You will probably have seen your social media feeds fill with depictions of influencers as superhero toys in plastic wrappers, or maybe you’ve observed a sudden improvement in someone’s graphic design skills and surprisingly judicious use of fonts and infographics … that’s all GPT-4o Image Mode at work.

Find this article plus many more in the May / June 2025 Edition of AEC Magazine

👉 Subscribe FREE here 👈

So, despite the frenzy of noise, the surges of insensitivity towards creatives and the abundance of Studio Ghibli IP infringement surrounding this release – in case it needs saying just one more time – in the most conservative of terms, this is indeed a big deal.

The first time you get a response from these new models that far exceeds your expectations, it will shock you and you will be filled with a genuine sense of wonder. I imagine the reaction feels similar to the first humans to see a photograph in the early c19th – it must have seemed genuinely miraculous and inexplicable. You feel the awe and wonder, then you walk away and you start to think about what it means for creators, for design methods … for your craft … and you get a sinking feeling in your stomach. For a couple of weeks after trying these new models for the first time I had a lingering feeling of sadness with a bit of fear mixed in.

These techniques are so accessible in nature that we should expect to see our clients briefing us with ever-more visual material. We therefore need to not be afraid or shocked when they do

I think this feeling was my brain finally registering the hammer dropping on a long-held hunch; that we are in an entirely new industry whether we like it or not and even if we wanted to return to the world of creative work before AI, it is impossible. Yes, we can opt to continue to do things however we choose, but this new method now exists in the world and it can’t be put back in the box.

I’ll return to this internal conflict again in my conclusion. If we set aside the emotional reaction for a moment, the early testing I’ve been doing in applying these models to architectural tasks suggest that, in both cases, the latest OpenAI and Google releases could prove to be “epoch defining” moments for architects and for all kinds of creatives who work in the image and video domains.

This is because the method of production and the user experience is so profoundly simple and easy compared to existing practices, that the barrier for access to image production in many, many realms has now come right down.

Again, we may not like to think about this from the perspective of having spent years honing our craft, yet the new reality is right in front of us and it’s not going anywhere. These new capabilities from image models can only lead to a permanent change in the working relationship between the commissioning client and the creative designer, because the means of production for graphical and image production have been completely reconfigured. In a radical act of forced redistribution, the access to sophisticated skill sets is now being packaged up by the AI companies to anyone who pays the licence fee.

What has not become distributed (yet) is wise judgement, deep experience in delivery, good taste, entirely new aesthetic ideas, emotional human insight, vivid communication and political diplomacy; all attributes that come with being a true expert and practitioner in any creative and professional realm.

These are qualities that for now remain inalienable and should give a hint at where we have to focus our energies in order to ensure we can continue to deliver our highest value for our patrons, whomever they may be. For better or worse, soon they will have the option to try and do things without us.

Chat-based image creation & editing

For a while, attempting to produce or edit images within chat apps has produced only sub-standard results. The likes of “Dall-E” which could be accessed only within otherwise text-based applications had really fallen behind and were producing ‘instantly AI identifiable images’ that felt generic and cheesy. Anything that is so obviously AI created (and low quality) means that we instantly attribute a low value to it.

As a result, I was seeing designers flock instead to more sophisticated options like Midjourney v6.1 and Stable Diffusion SDXL or Flux, where we can be very particular about the level of control and styling and where the results are often either indistinguishable from reality or indistinguishable from human creations. In the last couple of months that dynamic has been turned upside down; people can now achieve excellent imagery and edits directly with the chat-based apps again.

The methods that have come before, such as MJ, SD and Flux are still remarkable and highly applicable to practice – but they all require a fair amount of technical nous to get consistent and repeatable results. I have found through my advisory work with practices that having a technical solution isn’t what matters most’ it’s having it packaged up and made enjoyable enough to use that it’s able to make change to rigid habits.

A lesser tool with a great UX will beat a more sophisticated tool with a bad UX every time.

These more specialised AI image methods aren’t going away, and they still represent the most ‘configurable’ option, but text-based image editing is a format that anyone with a keyboard can do, and it is absurdly simple to perform.

More often than not, I’m finding the results are excellent and suitable for immediate use in project settings. If we take this idea further, we should also assume that our clients will soon be putting our images into these models themselves and asking for their ideas to be expressed on top…

We might soon hear our clients saying; “Try this with another storey”, “Try this but in a more traditional style”, “Try this but with rainscreen fibre cement cladding”, “Try this but with a cafe on the ground floor and move the entrance to the right”, “Try this but move the windows and make that one smaller”…

You get the picture.

Again, whether we like this idea or not (and I know architects will shudder even thinking of this), when our clients received the results back from the model, they are likely to be similarly impressed with themselves, and this can only lead to a change in briefing methods and working dynamics on projects.

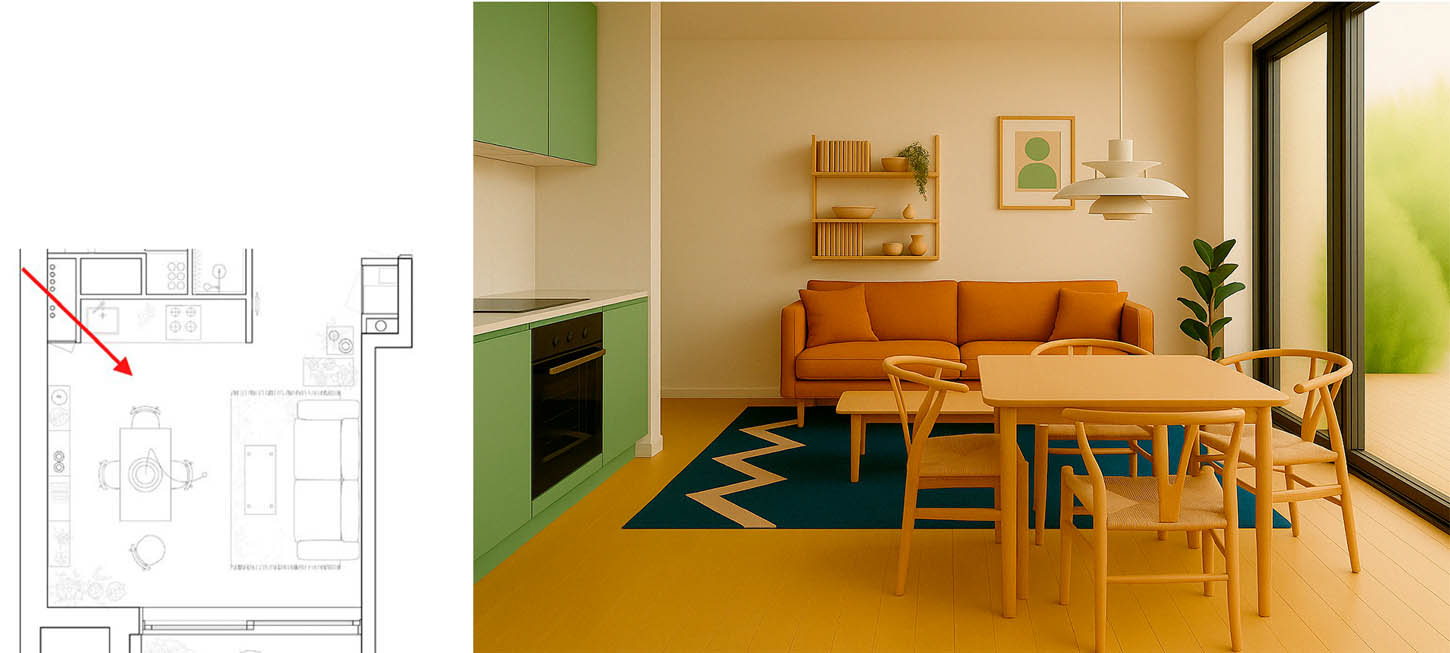

To give a sense of what I mean exactly, in the image below I’ve included an example of a new process we’re starting to see emerge whereby a 2D plan can be roughly translated into a 3D image using 4o in Image Mode. This process is definitely not easy to get right consistently (the model often makes errors) and also involves several prompting steps and a fair amount of nuance in technique. So far, I have also needed to follow up with manual edits.

Despite those caveats, we can assume that in the coming months the models will solve these friction points too. I saw this idea first validated by Amir Hossein Noori (co-founder of the AI Hub) and while I’ve managed to roughly reproduce his process, he gets full credit for working it out and explaining the steps to me – suffice to say it’s not as simple as it first appears!

Conclusion: the big leveller

1) Client briefing will change

My first key conclusion from the last month is that these techniques are so accessible in nature that we should expect to see our clients briefing us with ever-more visual material. We therefore need to not be afraid or shocked when they do.

I don’t expect this shift to happen overnight, and I also don’t think all clients will necessarily want to work in this way, but over time it’s reasonable to expect this to become much more prevalent and this would be particularly the case for clients who are already inclined to make sweeping aesthetic changes when briefing on projects.

Takeaway: As clients decide they can exercise greater design control through image editing, we need to be clearer than ever on how our specialisms are differentiated and to be able to better explain how our value proposition sets us apart. We should be asking; what are the really hard and domain-specific niches that we can lean into?

2) Complex techniques will be accessible to all

Next, we need to reconsider technical hurdles as being a ‘defensive moat’ for our work. The most noticeable trend in the last couple of years is that the things that appear profoundly complicated at first, often go on to become much more simple to execute later.

As an example, a few months ago we had to use ComfyUI (a complex node-based interface for using Stable Diffusion) for ‘re-lighting’ imagery. This method remains optimal for control, but now for many situations we could just make a text request and let the model work out how to solve it directly. Let’s extrapolate that trend and assume that as a generalisation; the harder things we do will gradually become easier for others to replicate.

Muscle memory is also a real thing in the workplace, it’s often so much easier to revert back to the way we’ve done things in the past. People will say “Sure it might be better or faster with AI, but it also might not – so I’ll just stick with my current method”. This is exactly the challenge that I see everywhere and the people who make progress are the ones who insist on proactively adapting their methods and systems.

The major challenge I observe for organisations through my advisory work is that behavioural adjustments to working methods when you’re under stress or a deadline are the real bottleneck. The idea here is that while a ‘technical solution’ may exist, change will only occur when people are willing to do something in a new way. I do a lot of work now on “applied AI implementation” and engagement across practice types and scales. I see again and again that there are pockets of technical innovation and skills with certain team members, but I also see that it’s not being translated into actual changes in the way people do things across the broader organisation. This is a lot to do with access to suitable training, but also to do with a lack of awareness that improving working methods are much more about behavioural incentives than they are about ‘technical solutions’.

In a radical act of forced redistribution, the access to sophisticated skill sets are now being packaged up by the AI companies to anyone who pays the licence fee

There is an abundance of new groundbreaking technology now available to practices, maybe even too much – we could be busy for a decade with the inventions of the last couple of years alone. But in the next period, the real difference maker will not be technical, it will be behavioural. How willing are you to adapt the way you’re working and try new things? How curious is your team? Are they being given permission to experiment? This could prove a liability for larger practices and make smaller, more nimble practices more competitive.

Takeaway: Behavioural change is the biggest hurdle. As the technical skills needed for the ‘means of creative production become more accessible to all, the challenge for practices in the coming years may not be all about technical solutions, it will be more about their willingness and ability to adjust behaviour and culture. The teams who succeed won’t be the people who have the most technically accomplished solutions, more likely it will be those who achieve the most widespread and practical adaptations of their working systems.

3) Shifting culture of creativity

I’ve seen a whole spectrum of reactions towards Google and OpenAI’s latest releases and I think it’s likely that these new techniques are causing many designers a huge amount of stress as they consider the likely impacts on their work. I have felt the same apprehension many times too. I know that a number of ‘crisis meetings’ have taken place in creative agencies for example, and it is hard for me to see these model releases as anything other than a direct threat to at least a portion of their scope of creative work.

This is happening to all industries, not least across computer science, after all – LLMs can write exceptional code too. From my perspective, it’s certainly coming for architecture as well, and if we are to maintain the architect’s central role in design and place making, we need to shift our thinking and current approach or our moat will gradually be eroded too.

The relentless progression of AI technology cares little about our personal career goals and business plans and when we consider the sense of inevitability of it all – I’m left with a strong feeling that the best strategy is actually to run towards the opportunities that change brings, even if that means feeling uncomfortable at first.

Among the many posts I’ve seen celebrating recent developments from thought leaders and influencers seeking attention and engagement, I can see a cynical thread emerging … of (mostly) tech and sales people patting themselves on the back for having “solved art”.

The posts I really can’t stand are the cavalier ones that actually seem to rejoice at the idea of not needing creative work anymore and salivating at the budget savings they will make … they seem to think you can just order “creative output” off a menu and that these new image models are a cure for some kind of long held frustration towards creative people.

Takeaway: The model “output” is indeed extraordinarily accomplished and produced quickly, but creative work is not something that is “solvable”; it either moves you or it doesn’t and design is similar — we try to explain objectively what great design quality is, but it’s hard. Certainly it fits the brief – yes, but the intangible and emotional reasons are more powerful and harder to explain. We know it when we see it.

While AIs can exhibit synthetic versions of our feelings, for now they represent an abstracted shadow of humanness – it is a useful imitation for sure and I see widespread applications in practice, but in the creative realm I think it’s unlikely to nourish us in the long term. The next wave of models may begin to ‘break rules’ and explore entirely new problem spaces and when they do I will have to reconsider this perspective.

We mistake the mastery of a particular technique for creativity and originality, but the thing about art is that it comes from humans who’ve experienced the world, felt the emotional impulse to share an authentic insight and cared enough to express themselves using various mediums. Creativity means making something that didn’t exist before.

That essential impulse, the genesis, the inalienably human insight and direction is still for me, everything. As we see AI creep into more and more creative realms (like architecture) we need to be much more strategic about how we value the specifically human parts and for me that means ceasing to sell our time and instead learning to sell our value.

In part 2 I will be looking in depth at Midjourney and how it’s being used in practice, I’ll also be looking specifically at the latest release (V7) in more detail, until then — thanks for reading.

Catch Keir Regan-Alexander at NXT BLD

Keir Regan-Alexander is director at Arka Works, a creative consultancy specialising in the Built Environment and the application of AI in architecture.

He will be speaking on AI at AEC Magazine’s NXT BLD in London on 11 June.