If 2023 was the year that Autodesk announced its ambitions for AI, 2024 was when it fleshed out some of the details. But, as Greg Corke reports, there’s still a long journey ahead

The Autodesk AI brand debuted in Las Vegas last year at Autodesk University, but the launch lacked any real substance. Despite a flashy logo there were no significant new AI capabilities to back it up. The event seemed more like a signal of Autodesk’s intent to add greater focus on AI in the future — building on its past achievements. It came at a time where ‘AI-anything’ was increasing share valuations of listed companies.

Fast forward 12 months and at Autodesk University 2024 in San Diego the company delivered more clarity on its evolving AI strategy — on stage and behind the scenes in press briefings. Autodesk also introduced a sprinkling of new AI features with many focused on modelling productivity, signalling that progress is being made. However, most of these were for manufacturing with little to excite customers in Architecture, Engineering and Construction (AEC), other than what had already been announced for Forma.

In his keynote, CEO Andrew Anagnost took a cautious tone, warning that it’s still early days for AI despite the growing hype from the broader tech industry.

Anagnost set the scene for the future. “We’re looking at how you work. We’re finding the bottlenecks. We’re getting the right data flowing to the right places, so that you can see past the hype to where there’s hope, so that you can see productivity rather than promises, so that you can see AI that solves the practical, the simple, and dare I even say, the boring things that get in your way and hold back you and your team’s productivity.”

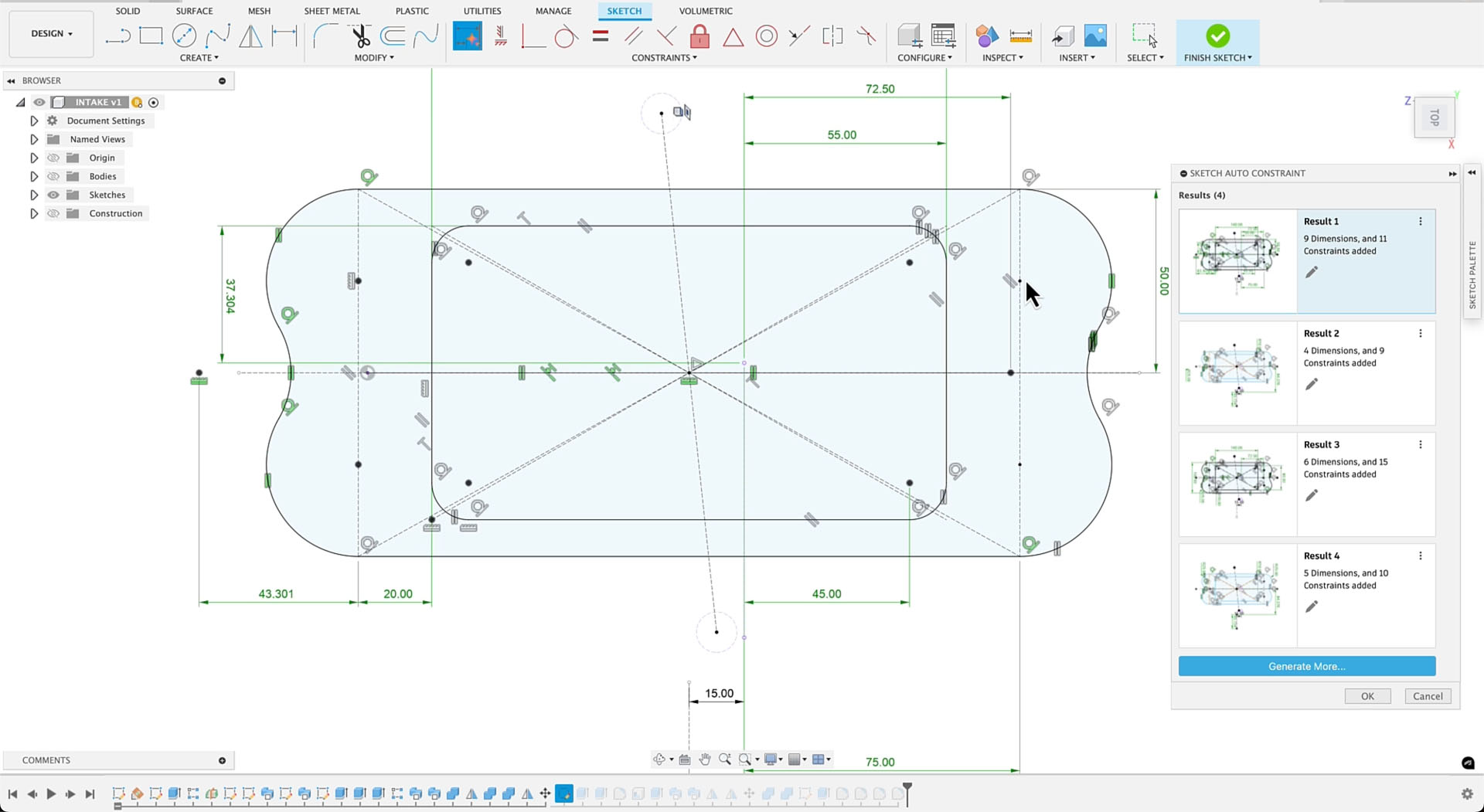

One of those ‘boring things’ is sketch constraints, which govern a sketch’s shape and geometric properties in parametric 3D CAD software like Autodesk Fusion, which is used for product design and manufacturing.

Fusion’s new AI-powered sketch auto-constrain feature streamlines this process by analysing sketches to detect intended spatial relationships between aspects of the design.

Automatically constraining sketches is just the starting point in Autodesk’s broader vision to use AI to optimise and automate 3D modelling workflows. As Anagnost indicated, the company is exploring how AI models can be taught to understand deeper elements of 3D models, including features, constraints, and joints.

At AU, no reference was made to similar modelling productivity tools being developed for Autodesk’s AEC products, including Forma. However, Amy Bunszel, executive VP, AEC at Autodesk, told AEC Magazine that the AEC team will learn from what happens in Fusion.

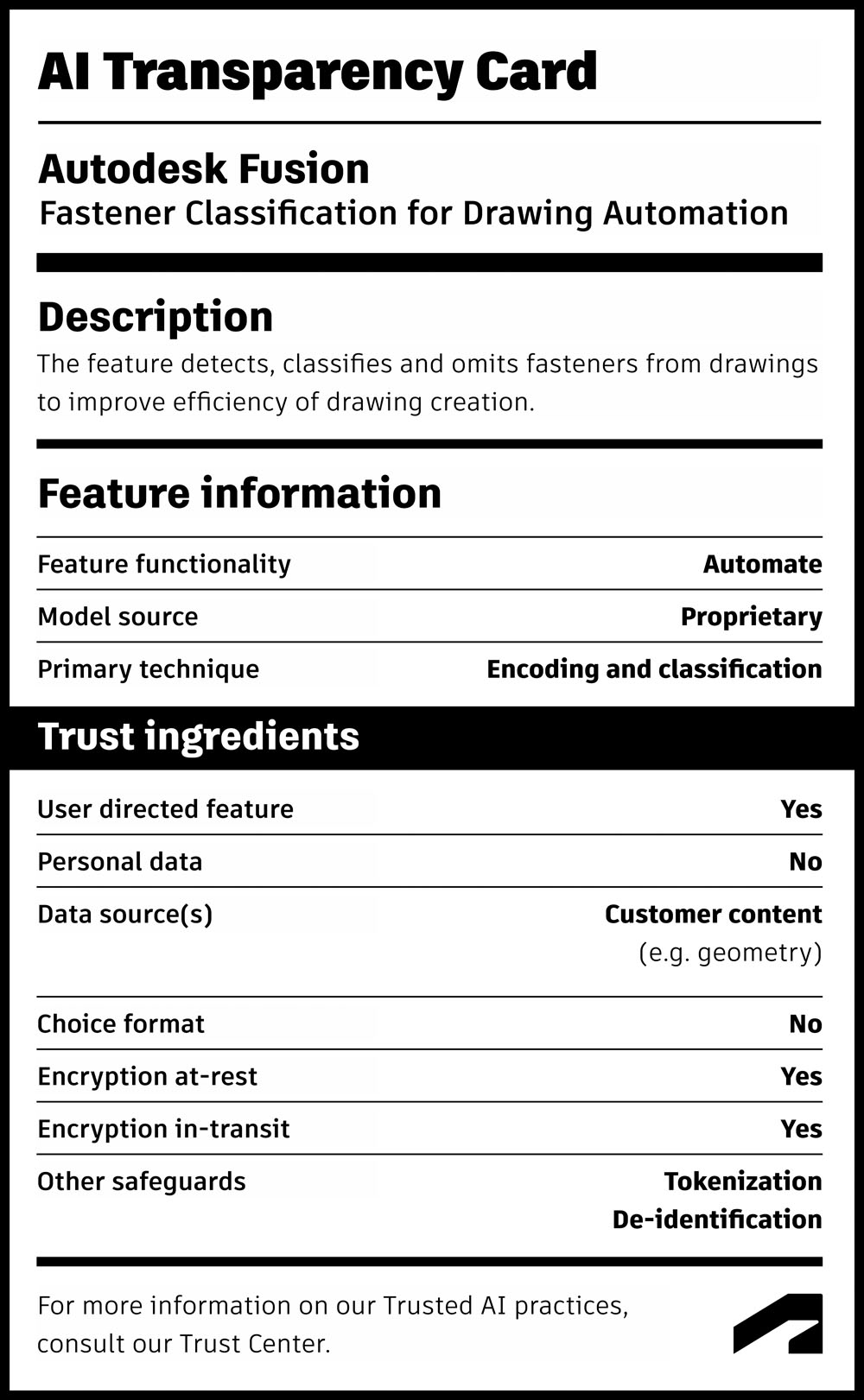

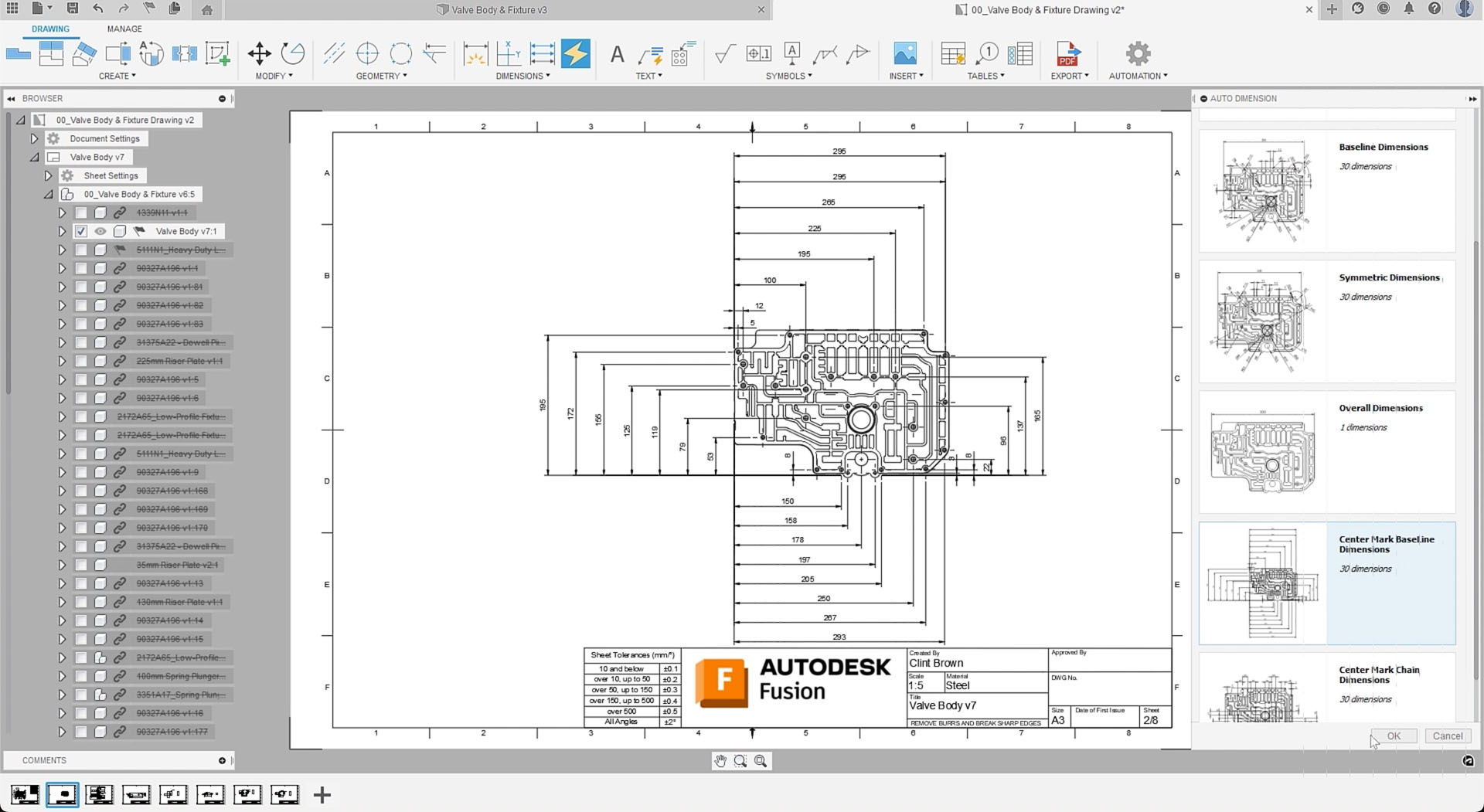

Another ‘boring’ task ripe for automation is the production of drawings. This labour-intensive process is currently a hot topic across the CAD sector (read this AEC Magazine article).

This capability is also coming first to Autodesk’s product design and manufacturing product. With Drawing Automation for Fusion, Autodesk is using AI to automate the process, down to the precise placement of annotations.

With the click of a button, the AI examines 3D models and does the time-consuming work of generating the 2D drawings and dimensions required to manufacture parts. The technology has evolved since its initial release earlier this year and now accelerates and streamlines this process even more by laying out drawing sheets for each component in a model and applying a style. Early next year, the technology will be able to recognise standard components like fasteners, remove them from drawing sets, and automatically add them to the bill of materials for purchase.

Once again, this feature will first appear in Fusion, but sources have confirmed plans to extend automated drawing capabilities to Revit—a significant development given the BIM tool’s widespread use for documentation. There’s also potential for autonomous drawings in Forma, although Forma would first need the ability to generate drawings. During the AU press conference, CEO Andrew Anagnost hinted that drawing capabilities might be in Forma’s future, which, if realised, could potentally impact how much customers rely on Revit as a documentation tool in the long term.

Both of Autodesk’s new AI-powered features are designed to automate complex, repetitive, and error-prone processes, significantly reducing the time that skilled designers spend on manual tasks. This allows them to focus on more critical, high-value activities. But, as Anagnost explained, Autodesk is also exploring how AI can be used to fundamentally change the way people work.

One approach is to enhance the creative process and Form Explorer is a new automotive-focused generative AI tool for Autodesk Alias, designed to bridge the gap between 2D ideation and traditional 3D design. It learns from a company’s historical 3D designs, then applies that unique styling language.

Lessons learned from Form Explorer are also helping Autodesk augment and accelerate creativity in other areas of conceptual design.

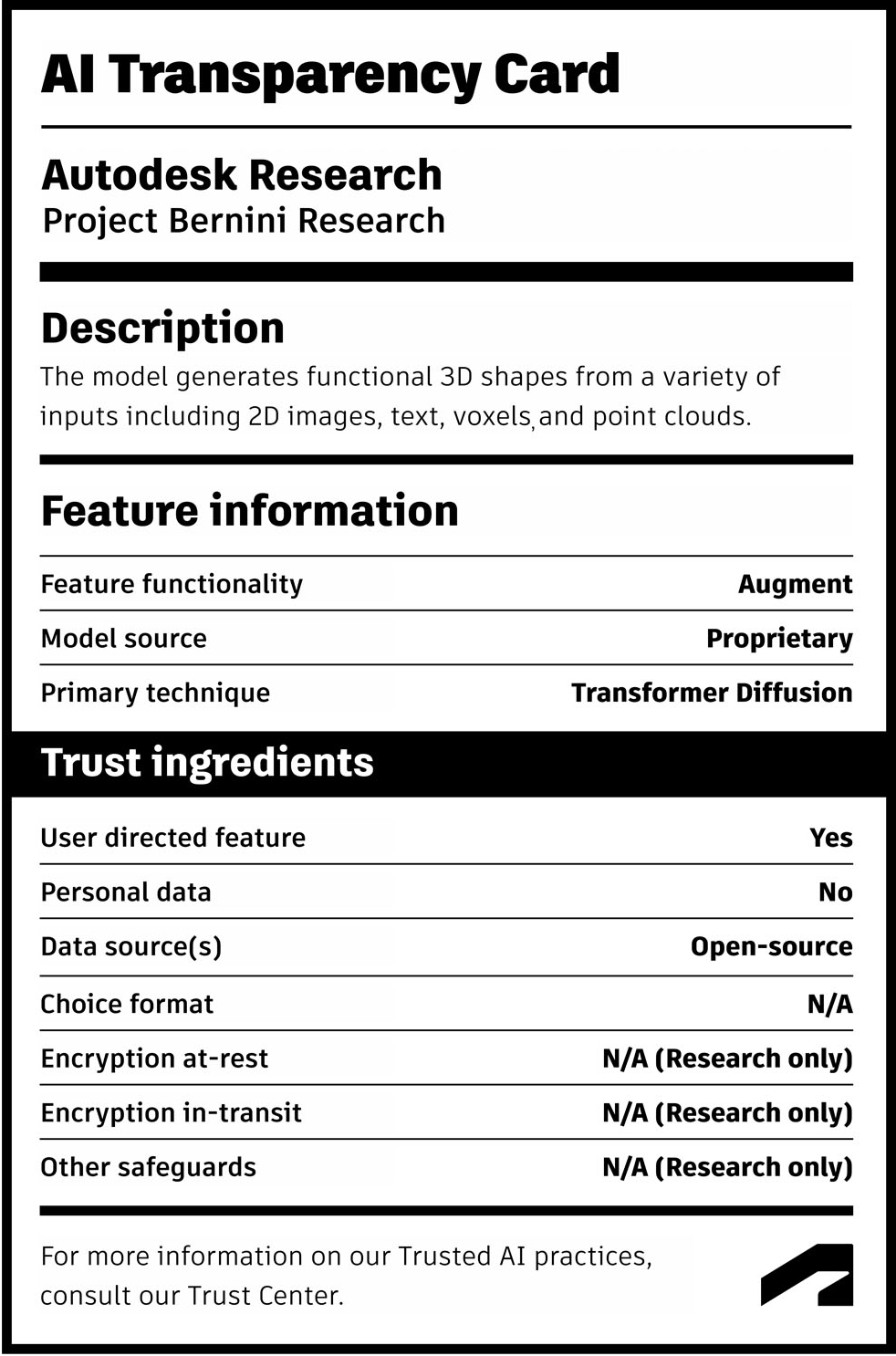

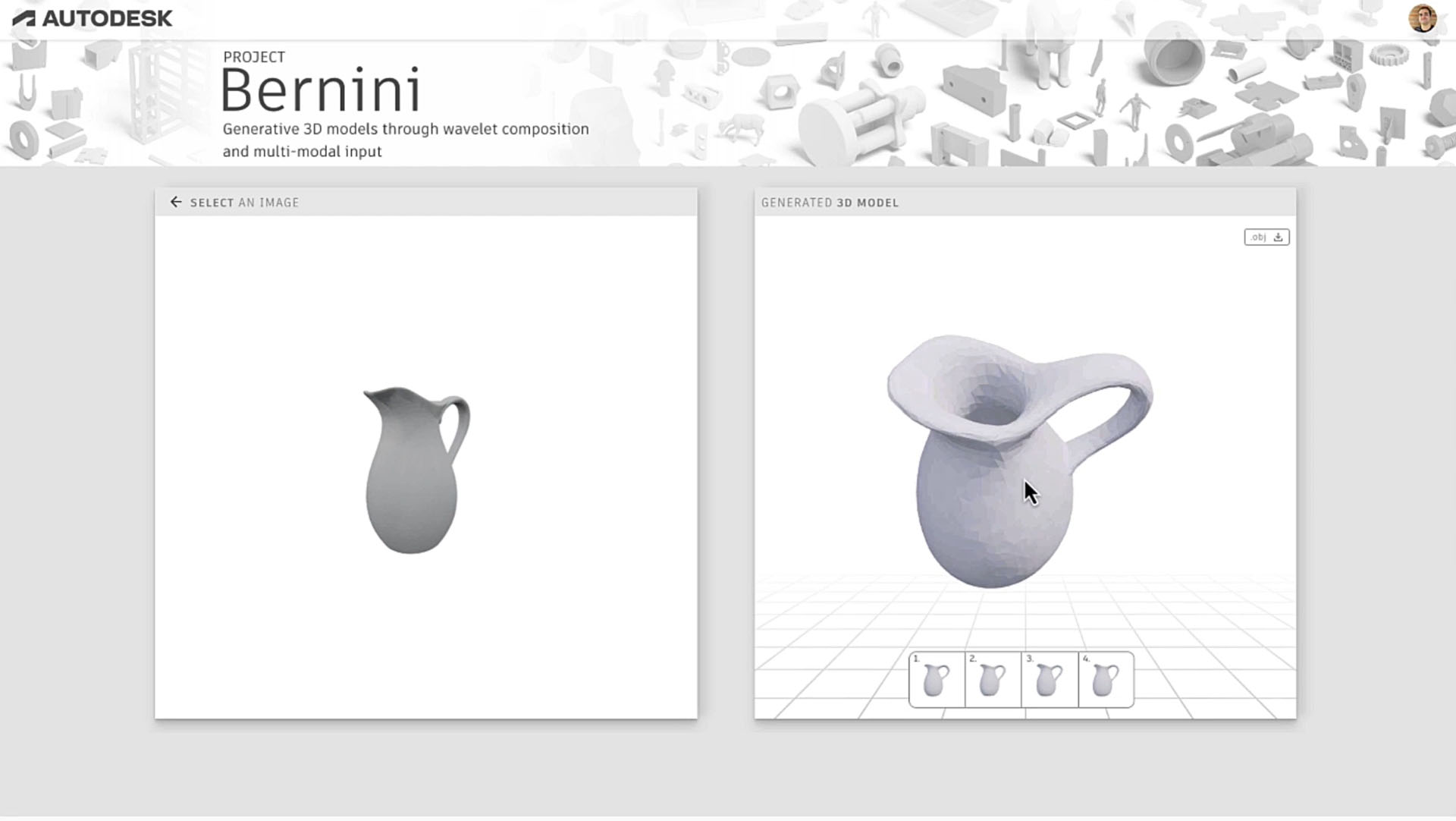

Project Bernini is an experimental proof-of-concept research project that uses generative AI to quickly generate 3D models from a variety of inputs including a single 2D image, multiple images showing different views of an object, point clouds, voxels, and text. The generated models are designed to be ‘functionally correct’, so a pitcher, for example, will be empty inside. As the emphasis is on the geometry, Bernini does not apply colours and textures to the model.

Project Bernini is not designed to replace manual 3D modelling. “Bernini is the thing that helps you get to that first stage really quickly,” said Mike Haley, senior VP of research at Autodesk. “Nobody likes the blank canvas.”

Project Bernini is industry agnostic and is being used to explore practical applications for manufacturing, AEC and media and entertainment. At AU the emphasis was on manufacturing, however, where one of the ultimate aims is to learn how to produce precise geometry that can be converted into editable geometry in Fusion.

However, there’s a long way to go before this is a practical reality. There is currently no established workflow, plus Bernini has been trained on a limited set of licensed public data that cannot be used commercially.

AI for AEC

Autodesk is also working on several AI technologies specific to AEC. Nicolas Mangon, VP, AEC industry strategy at Autodesk, gave a brief glimpse of an outcome-based BIM research project which he described as Project Bernini for AECO.

He showed how AI could be used to help design buildings made from panellised wood systems, by training it on past BIM projects stored in Autodesk Docs. “[It] will leverage knowledge graphs to build a dataset of patterns of relationship between compatible building components, which we then use to develop an auto complete system that predicts new component configurations based on what it learned from past projects,” he said.

Mangon showed how the system suggests multiple options to complete the model driven by outcomes such as construction costs, fabrication time and carbon footprint. This, he said, ensures that when the system proposes the best options, the results are not only constructible, but also align with sustainability, time and cost targets.

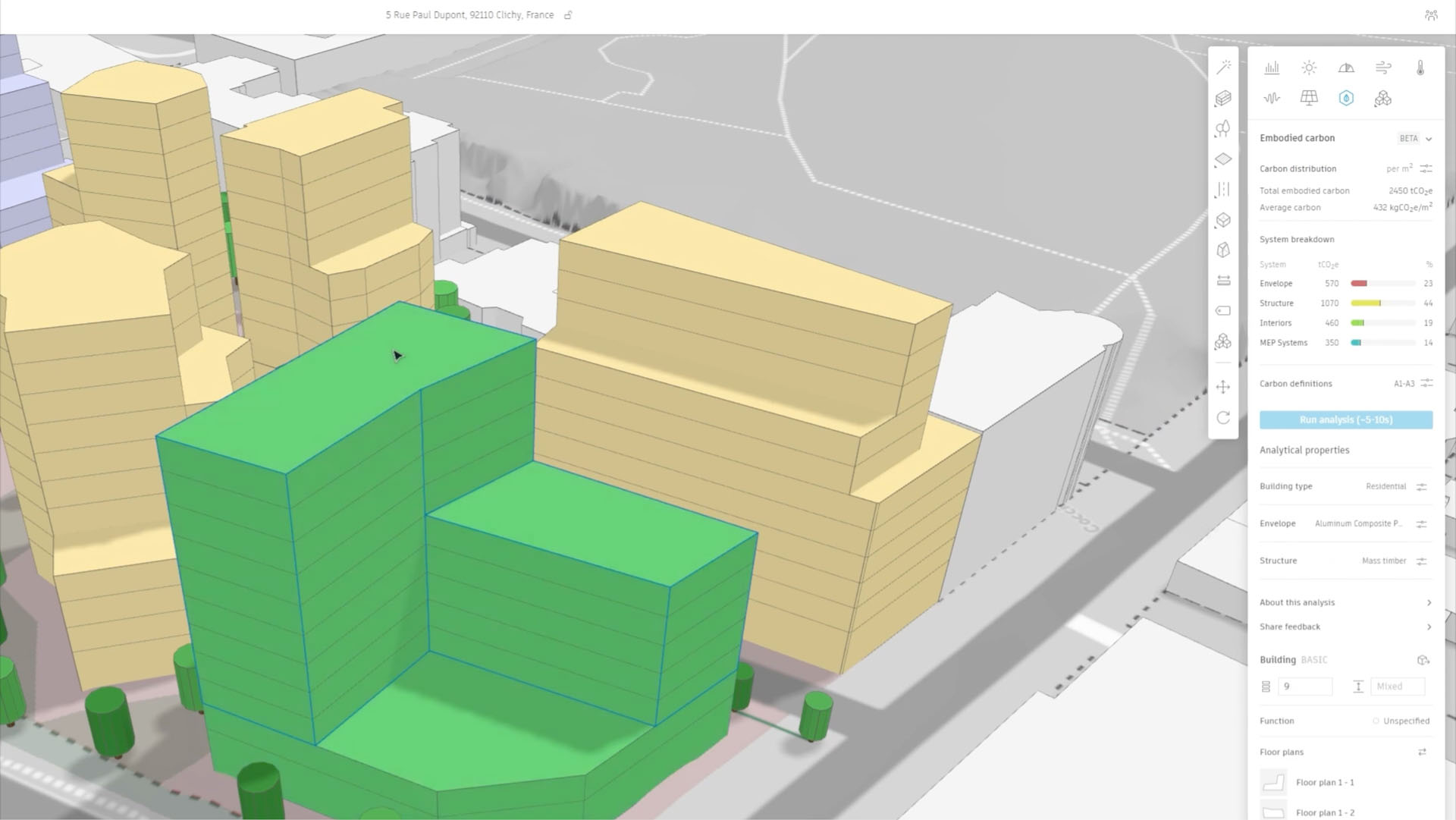

Another AEC focused AI tool, currently in beta, is Embodied Carbon Analysis in Autodesk Forma, which is designed to give rapid site-specific environmental design insights. “It lets you quickly see the embodied carbon impact at the earliest conceptual design phase, giving you the power to make changes when the cost is low,” said Bunszel.

The software uses EHDD’s C.Scale API which applies machine learning models based on real data from thousands of buildings. The technology helps designers balance trade-offs between embodied carbon, sun access, sellable area, and outdoor comfort etc.

Embodied Carbon Analysis in Autodesk Forma follows other AI-powered features within the software. With ‘Rapid Noise Analysis’ and ‘Rapid Wind Analysis’, for example, Forma uses machine learning to predict ground noise and wind conditions in real time.

Autodesk AI is also providing insights in hydraulic modelling through Autodesk InfoDrainage, as Bunszel explained, “You can place a pond or swale on your site and quickly see the impact on overland flows and the surrounding flood map.”

Simple AI

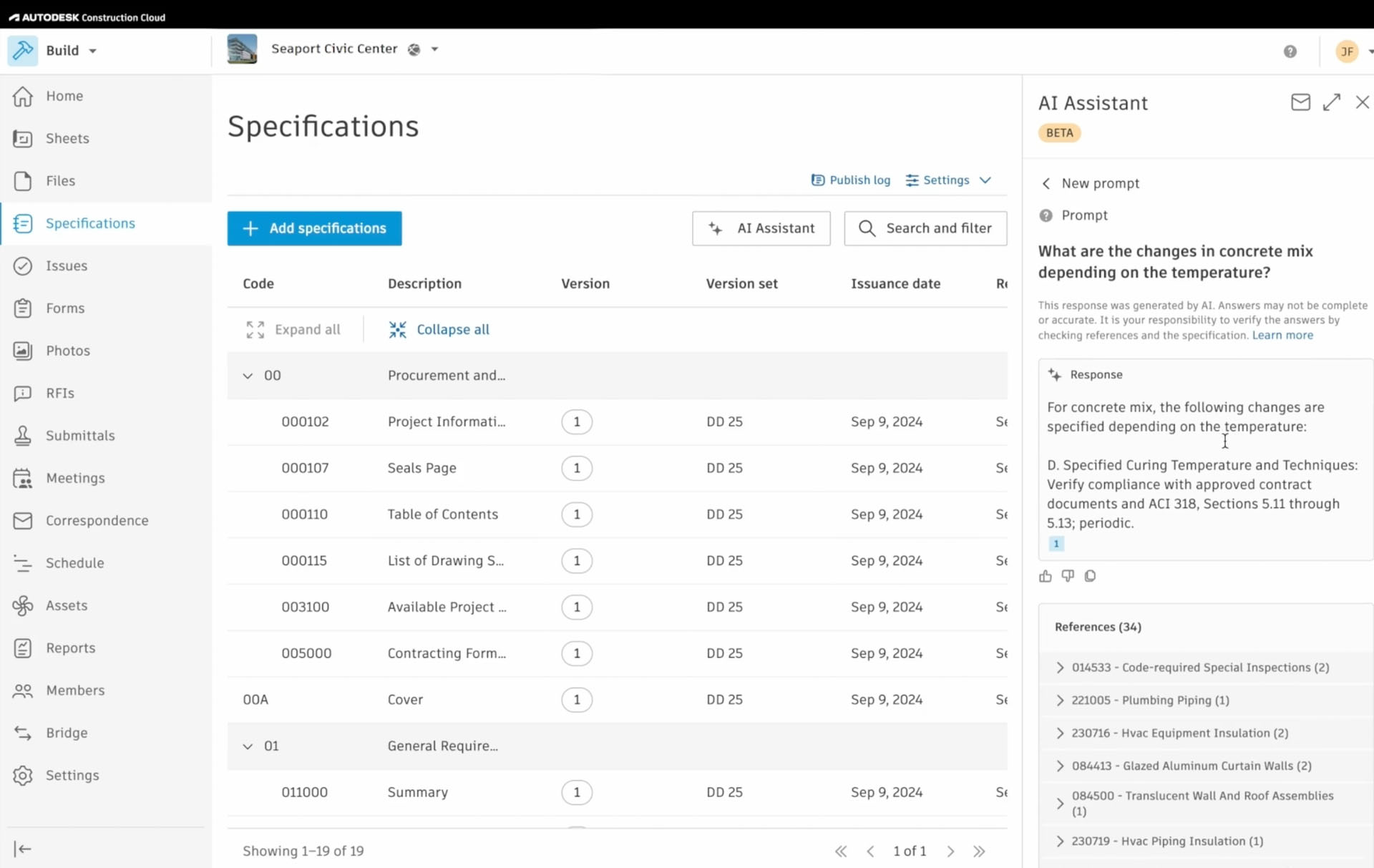

Autodesk is also diving into the world of general purpose AI through the application of Large Language Models (LLMs). With Autodesk Assistant, customers can use natural language to ask questions about products and workflows.

Autodesk Assistant has been available on Autodesk’s website for some time and is now being rolled out gradually inside Autodesk products.

“The important thing about the system, is it’s going to be context-aware, so it’s understanding what you’re working on, what project you’re on, what data you’ve run, maybe what you’ve done before, where you are within your project, that kind of thing,” said Haley.

With the beta release of Autodesk Assistant in Autodesk Construction Cloud, for example, users can explore their specification documents through natural language queries, as Bunszel explained, “You can ask the assistant using normal everyday language to answer questions, generate lists or create project updates,” she said, adding that it gives you access to intuitive details from your specifications that usually require lots of clicking or page turning or highlighting to find.

Getting connected

Like most software developers Autodesk is harnessing the power of LLMs or vision models, such as ChatGPT and Gemini. “We can use them, we can adapt them, we can fine tune them to our customers’ data and workflows,” said Haley, citing the example of Autodesk Assistant.

But, as Haley explained, language and vision models don’t have any sense of the physical world, so Autodesk is focusing much of its research on developing a family of foundation models that will eventually deliver CAD geometry with ‘high accuracy and precision’.

Autodesk’s foundation models are being trained to understand geometry, shape, form, materials, as well as how things are coupled together and how things are assembled.

“Then you also get into the physical reasoning,” added Haley. “How does something behave? How does it move? What’s the mechanics of it? How does a fluid flow over the surface? What’s the electromechanical properties of something?”

According to Anagnost, the ultimate goal for Autodesk is to get all these foundation models talking together, but until this happens, you can’t change the paradigm.

“Bernini will understand the sketch to create the initial geometry, but another model might understand how to turn that geometry into a 3D model that actually can be evolved and changed in the future,” he said. “One might bring modelling intelligence to the table, one might bring shape intelligence to the table, and one might be sketch driven, the other one might be sketch aware.”

To provide some context for AEC, Autodesk CTO Raji Arasu said, “In the future, these models can even help you generate multiple levels of detail of a building.”

AI model training

Model training is a fundamental part of AI, and Anagnost made the point that data must be separated from methods, “You have to teach the computer to speak a certain language,” he said. “We’re creating training methods that understand 3D geometry in a deep way. Those training methods are data independent.”

With Project Bernini Autodesk is licensing public data to essentially create a prototype for the future. “We use the licence data to show people what’s possible,” said Anagnost.

For Bernini, Autodesk claims to have used the largest set of 3D training data ever assembled, comprising 10 million examples, but the generated forms that were demonstrated — a vase, a chair, a spoon, a shoe, and a pair of glasses — were still primitive. As Tonya Custis, senior director AI Research, admitted there simply isn’t enough 3D data anywhere to build the scale of model required, highlighting that the really good large language and image models are trained on the entire internet.

“It’s very hard to get data at scale that very explicitly ties inputs to outputs,” she said. “If you have a billion cat pictures on the internet that’s pretty easy to get that data.”

The billion-dollar question is where will Autodesk get its training data from? At AU last year, several customers expressed concern about how their data might be used by Autodesk for AI training.

This was a hot topic again this year and in the press conference Anagnost provided more clarity. He told journalists that for a generative AI technology like Bernini, where there’s a real possibility it could impact on intellectual property, customers will need to opt in.

But that’s not the case for so-called ‘classic productivity’ AI features like sketch auto-constrain or automated drawings, “No one has intellectual property on how to constrain a sketch,” said Anagnost. “[For] that we just train away.”

This point was echoed by Hooper in relation to automated drawings, “Leveraging information that we have in Fusion about how people actually annotate drawings is not leveraging people’s core IP,” he said.

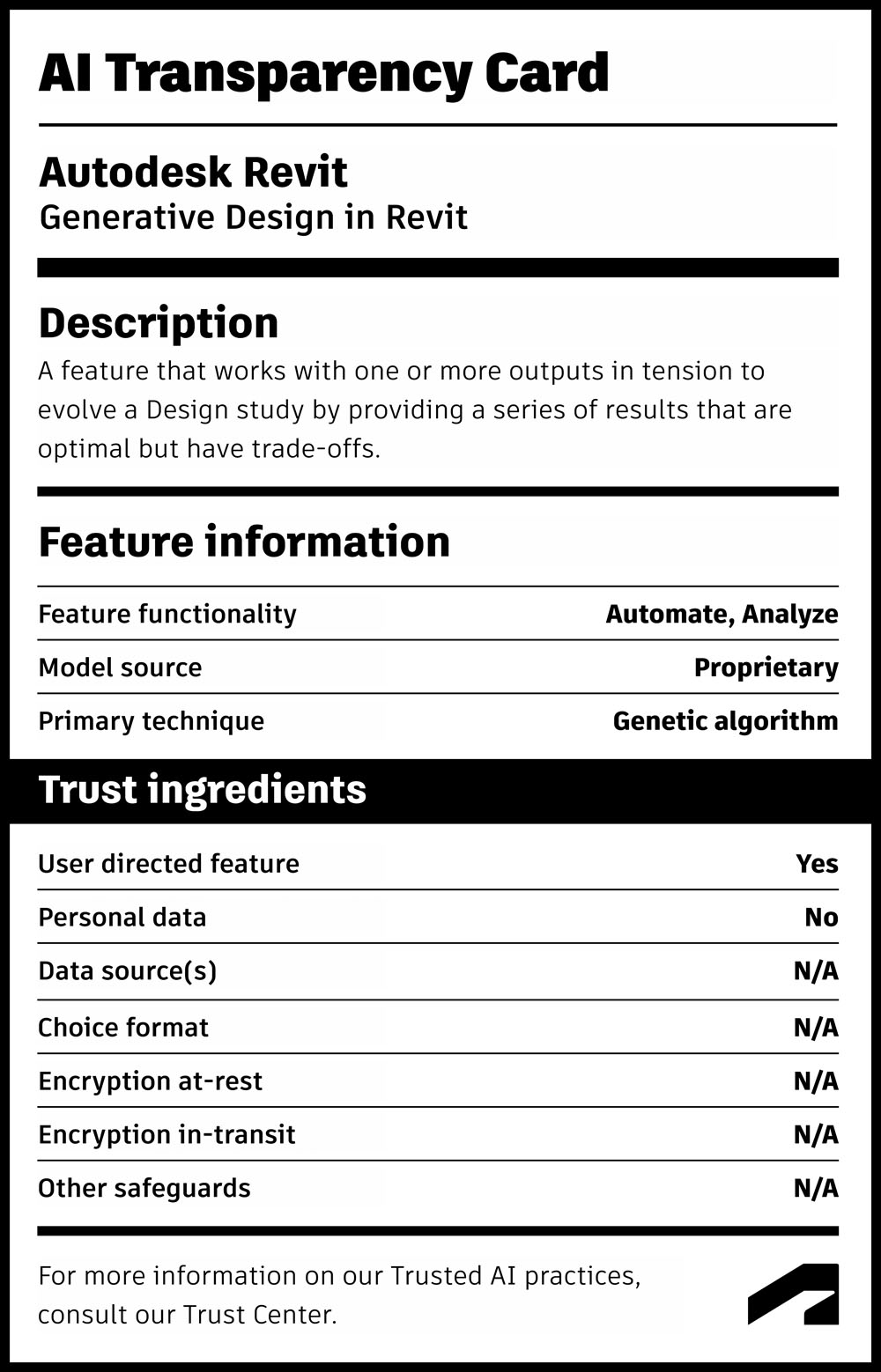

To help bring more transparency to how Autodesk is using customer data for training its AI models, Autodesk has created a series of Autodesk AI transparency cards which will be made available for each new AI feature. “These labels will provide you a clear overview of how each AI feature is built, the data that is being used, and the benefits that the feature offers,” said Arasu.

Of course, some firms will not want to share their data under any circumstances. Anagnost believes that this may lead to a bifurcated business model with customers, where Autodesk builds some foundational intelligence into its models and then licenses them to individual customers so they can be fine-tuned with private data.

AI compute

AI requires substantial processing power to function efficiently, particularly when it comes to training. With Autodesk AI, everything is currently being done in the cloud. This can be expensive but, as Anagnost boasted: “We have negotiating power with AWS that no customer would have with AWS.”

Relying on the cloud means that in order to use features in Fusion like auto constraints or drawing automation, you must be connected to the Internet.

This might not be the case forever, however. Arasu told AEC Magazine that AI inferencing [the process of using a trained AI model to make predictions or decisions based on new data] could go local. She noted some of Autodesk’s customers have powerful workstations on their desktops, implying that by using the cloud for compute would mean a waste of their own resources.

All about the data

It goes without saying that data is a critical component of Autodesk’s AI strategy, particularly when it comes to what Autodesk calls outcome-based BIM, as Mangon explained, “Making your data from our existing products available to the Forma Industry Cloud will create a rich data model that powers an AI-driven approach centred on project outcomes, so you can optimise decisions about sustainability, cost, construction time and even asset performance at the forefront of the project.”

To fully participate in Autodesk’s AI future, customers will need to get their data into the cloud-based common data environment, Autodesk Docs, which some customers are reluctant to do, for fear of being locked in with limited data access only through APIs.

Autodesk Docs can be used to manage data from AutoCAD, Revit, Tandem, Civil 3D, Autodesk Workshop XR, with upcoming support for Forma. It also integrates with third-party applications including Rhino, Grasshopper, Microsoft Power BI and soon Tekla Structures.

The starting point for all of this is files but, over time, with the Autodesk AEC Data Model API, some of this data will become granular. The AEC Data Model API enables the break-up of monolithic files, such as Revit RVT and AutoCAD DWG, into ‘granular object data’ that can be managed at a sub-file level.

“With the AEC Data Model API, you can glimpse into the future where data is not just an output, but a resource,” said Sasha Crotty, Sr. Director, AEC Data, Autodesk. “We are taking the information embedded in your Revit models and making it more accessible, empowering you to extract precisely the data you need without having to dive back into the model each time you need it.”

Crotty gave the example of US firm Avixi, which is using the API to extract Revit data and gain valuable insights through Power BI dashboards.

When the AEC Data Model API launched in June, it allowed the querying of key element properties from Revit RVT files. Autodesk is now starting to granularize the geometry, and at AU it announced it was making Revit geometric data available in a new private beta. For more on the AEC Data Model API read this AEC Magazine article.

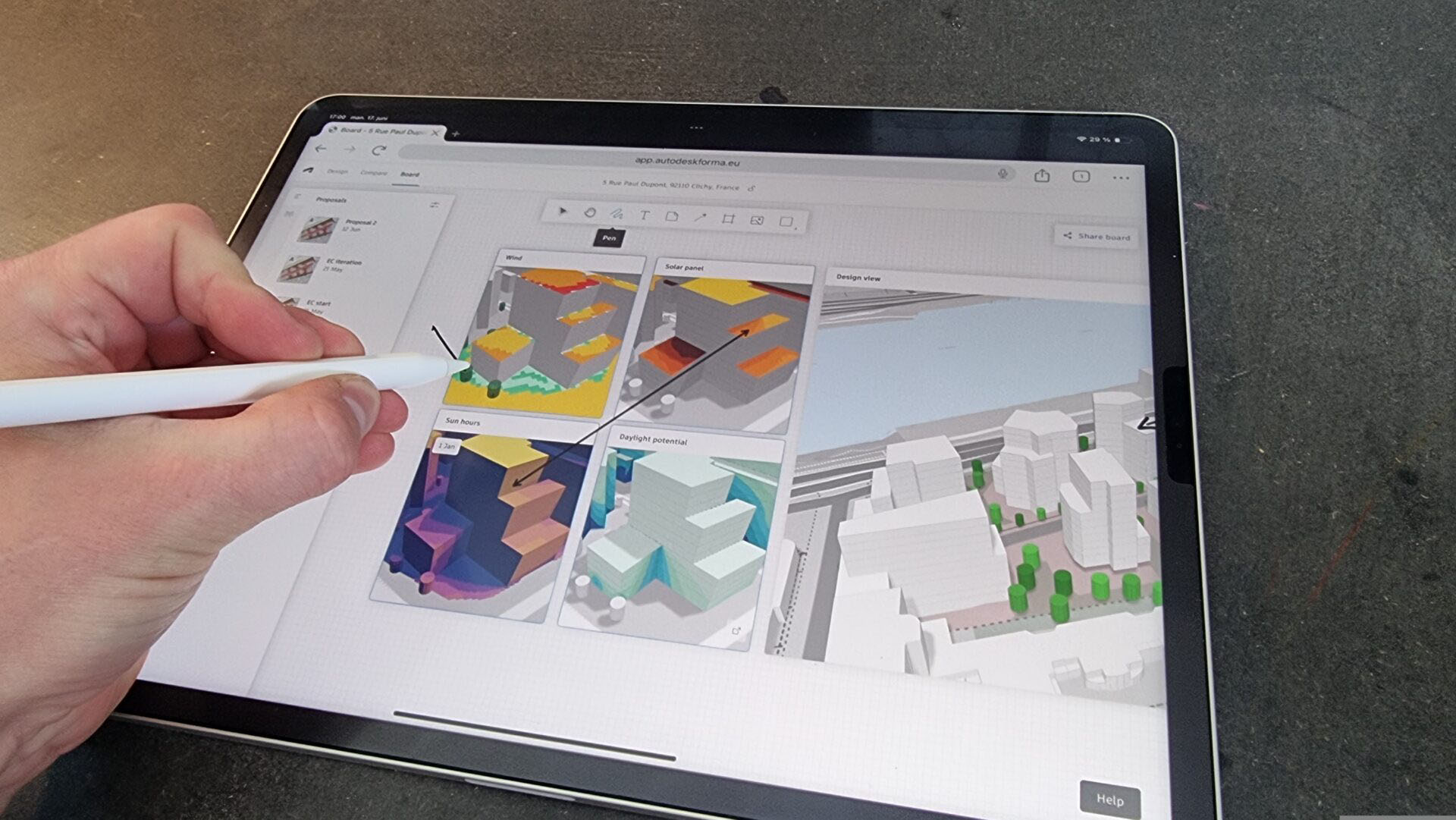

Autodesk Docs is also being used to feed data into Forma Board, a digital whiteboard and collaboration tool that allows project stakeholders to present and discuss concepts.

“Forma Board lets you pull in visuals from Forma and other Autodesk products through Docs, and now you can demonstrate the impact of sun or noise, ask for feedback on specific concepts, and much more,” said Bunszel.

Revit also got some airtime, but the news was a little underwhelming. Bunszel shared her favourite Revit 2025 update – the ability to export to PDF in the background without stopping your work. Meanwhile, manufacturing customers were being shown the future, with new features coming to Inventor 2026 such as associative assembly mirror and part simplification.

In the press conference Anagnost reiterated how Forma is different to Revit. “It is driven by outcomes,” he said. “We not trying to redo Revit in the cloud.”

Anagnost added that Forma is going to start moving downstream into things that Revit ‘classically does well. “It doesn’t mean it has to swallow all of Revit, and you know that would take a long time, but it can certainly do things that that Revit does today as it expands,” he said.

An iterative future

Autodesk is beginning to add clarity to its AI strategy. It is addressing AI from two angles: bottom up, bringing automation to repetitive and error prone tasks, and top down with technologies like Project Bernini that in the future could fundamentally change the way designers and engineers work. The two will eventually meet in the middle.

Autodesk is keen to use AI to deliver practical solutions and the automation of drawings and constraints in Fusion should deliver real value to many firms right now, freeing up skilled engineers at a time when they are in short supply.

We expect automated drawings will find their way into Autodesk AEC products soon, but it’s hard to tell if Autodesk has any concrete plans to use AI for modelling productivity.

As to pushing data into Autodesk Docs to get the maximum benefit out of AI, the fear that some customers have of getting trapped in the cloud is unlikely to go away any time soon.

Meanwhile, it’s clear there’s still a long way to go before the AI foundation models being explored in Project Bernini can deliver CAD geometry with ‘high accuracy and precision’.

While Bernini is starting to understand how to create basic geometry, the 3D models need more detail, and Autodesk must also work out how they can be of practical use inside CAD. With rapid advances in text-to-image AI, one also wonders what additional value text-to-CAD might bring to concept design. One could also ask whether product designers, architects or engineers would even want to use something like this to kickstart their design process. As the technology is still so embryonic it’s very hard to tell. It’s also important to remember that Bernini is a proof-of-concept, designed to explore what’s possible, rather than a practical application.

Meanwhile, as Autodesk continues to develop the complex AI training methods, there’s also the challenge of sourcing data for training. It will be interesting to see how Autodesk’s trust relationship with customers plays out.

While Autodesk’s long-term plan is to get multiple foundation models to talk together, this doesn’t mean we are heading for true design automation any time soon.

At AU Anagnost admitted that the day where AI can automatically deliver final outcomes from an initial specification is further away than one might think. “For those of you who are trying to produce an epic work of literature with ChatGPT, you know you have to do it iteratively,” he said. That same iterative process will apply to AI for design for some time to come.