Chaos is blending generative AI with traditional visualisation, rethinking how architects explore, present and refine ideas using tools like Veras, Enscape, and V-Ray, writes Greg Corke

From scanline rendering to photorealism, real-time viz to realt-ime ray tracing, architectural visualisation has always evolved hand in hand with technology.

Today, the sector is experiencing what is arguably its biggest shift yet: generative AI. Tools such as Midjourney, Stable Diffusion, Flux, and Nano Banana are attracting widespread attention for their ability to create compelling, photorealistic visuals in seconds — from nothing more than a simple prompt, sketch, or reference image.

The potential is enormous, yet many architectural practices are still figuring out how to properly embrace this technology, navigating practical, cultural, and workflow challenges along the way.

The impact on architectural visualisation software as we know it could be huge. But generative AI also presents a huge opportunity for software developers.

Find this article plus many more in the September / October 2025 Edition

👉 Subscribe FREE here 👈

Like some of its peers, Chaos has been gradually integrating AI-powered features into its traditional viz tools, including Enscape and V-Ray. Earlier this year, however, it went one step further by acquiring EvolveLAB and its dedicated AI rendering solution, Veras.

Veras allows architects to take a simple snapshot of a 3D model or even a hand drawn sketch and quickly create ‘AI-rendered’ images with countless style variations. Importantly, the software is tightly integrated with CAD / BIM tools like SketchUp, Revit, Rhino, Archicad and Vectorworks, and offers control over specific parts within the rendered image.

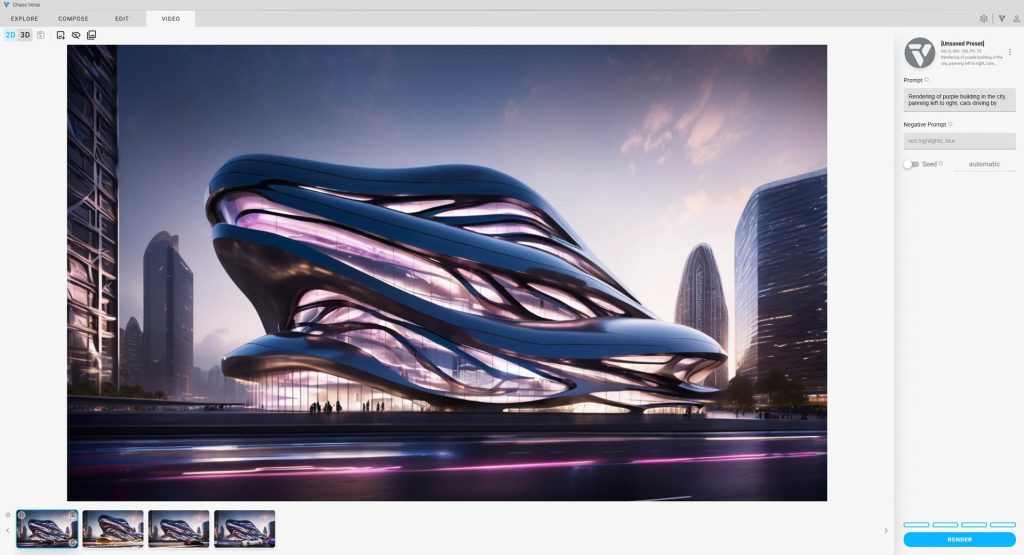

With the launch of Veras 3.0, the software’s capabilities now extend to video, allowing designers to generate short clips featuring dynamic pans and zooms, all at the push of a button.

“Basically, [it takes] an image input for your project, then generates a five second video using generative AI,” explains Bill Allen, director of products, Chaos. “If it sees other things, like people or cars in the scene, it’ll animate those,” he says.

This approach can create compelling illusions of rotation or environmental activity. A sunset prompt might animate lighting changes, while a fireplace in the scene could be made to flicker. But there are limits. “In generative AI, it’s trying to figure out what might be around the corner [of a building], and if there’s no data there, it’s not going to be able to interpret it,” says Allen.

Chaos is already looking at ways to solve this challenge of showcasing buildings from multiple angles. “One of the things we think we could do is take multiple shots – one shot from one angle of the building and another one – and then you can interpolate,” says Allen.

Model behaviour

Veras uses Stable Diffusion as its core ‘render engine’. As the generative AI model has advanced, newer versions of Stable Diffusion have been integrated into Veras, improving both realism and render speed, and allowing users to achieve more detailed and sophisticated results.

“We’re on render engine number six right now,” says Allen. “We still have render engine, four, five and six available for you to choose from in Veras.”

But Veras does not necessarily need to be tied to a specific generative AI model. In theory it could evolve to support Flux, Nano Banana or whatever new or improved model variant may come in the future.

But, as Allen points out, the choice of model isn’t just down to the quality of the visuals it produces. “It depends on what you want to do,” he says. “One of the reasons that we’re using Stable Diffusion right now instead of Flux is because we’re getting better geometry retention.”

One thing that Veras doesn’t yet have out of the box is the ability for customers to train the model using their own data, although as Allen admits, “That’s something we would like to do.”

In the past Chaos has used LORAs (Low-Rank Adaptations) to fine-tune the AI model for certain customers in order to accurately represent specific materials or styles within their renderings.

Roderick Bates, head of product operations, Chaos, imagines that the demand for fine tuning will go up over time, but there might be other ways to get the desired outcome, he says. “One of the things that Veras does well is that you can adjust prompts, you can use reference images and things like that to kind of hone in on style.”

Post-processing

While Veras experiments with generative creation, Chaos is also exploring how AI can be used to refine output from its established viz tools using a variety of AI post-processing techniques.

Chaos AI Upscaler, for example, enlarges render output by up to four times while preserving photorealistic quality. This means scenes can be rendered at lower resolutions (which is much quicker), then at the click of a button upscaled to add more detail.

While AI upscaling technology is widely available – both online and in generic tools like Photoshop – Chaos AI Upscaler benefits from being directly accessible at the click of a button directly inside the viz tools like Enscape that architects already use. Bates points out that if an architect uses another tool for this process, they must download the rendered image first, then upload it to another place, which fragments the workflow. “Here, it’s all part of an ecosystem,” he explains, adding that it also avoids the need for multiple software subscriptions.

Chaos is also applying AI in more intelligent ways, harnessing data from its core viz tools. Chaos AI Enhancer, for example, can improve rendered output by refining specific details in the image. This is currently limited to humans and vegetation, but Chaos is looking to extend this to building materials.

“You can select different genders, different moods, you can make a person go from happy to sad,” says Bates, adding that all of this can be done through a simple UI.

There are two major benefits: first, you don’t have to spend time searching for a custom asset that may or may not exist and then have to re-render; second, you don’t need highly detailed 3D asset models to achieve the desired results, which would normally require significant computational power, or may not even be possible in a tool like Enscape.

With Veras 3.0, the software’s capabilities now extend to video, allowing designers to generate short clips featuring dynamic pans and zooms, all at the push of a button

The real innovation lies in how the software applies these enhancements. Instead of relying on the AI to interpret and mask off elements within an image, Chaos brings this information over from the viz tool directly. For example, output from Enscape isn’t just a dumb JPG — each pixel carries ‘voluminous metadata’, so the AI Enhancer automatically knows that a plant is a plant, or a human is a human. This makes selections both easy and accurate.

As it stands, the workflow is seamless: a button click in Enscape automatically sends the image to the cloud for enhancement.

But there’s still room for improvement. Currently, each person or plant must be adjusted individually, but Chaos is exploring ways to apply changes globally within the scene. Chaos

AI Enhancer was first introduced in Enscape in 2024 and is now available in Corona and V-Ray 7 for 3ds Max, with support for additional V-Ray integrations coming soon.

AI materials

Chaos is also extending its application of AI into materials, allowing users to generate render-ready materials from a simple image. “Maybe you have an image from an existing project, maybe you have a material sample you just took a picture of,” says Bates. “With the [AI Material Generator] you can generate a material that has all the appropriate maps.”

Initially available in V-Ray for 3ds Max, the AI Material Generator is now being rolled out to Enscape. In addition, a new AI Material Recommender can suggest assets from the Chaos Cosmos library, using text prompts or visual references to help make it faster and easier to find the right materials.

Cross pollination

Chaos is uniquely positioned within the design visualisation software landscape. Through Veras, it offers powerful oneclick AI image and video generation, while tools like Enscape and V-Ray use AI to enhance classic visualisation outputs. This dual approach gives Chaos valuable insight into how AI can be applied across the many stages of the design process, and it will be fascinating to see how ideas and technologies start to cross-pollinate between these tools.

A deeper question, however, is whether 3D models will always be necessary. “We used to model to render, and now we render to model,” replies Bates, describing how some firms now start with AI images and only later build 3D geometry.

“Right now, there is a disconnect between those two workflows, between that pure AI render and modelling workflow – and those kind of disconnects are inefficiencies that bother us,” he says.

For now, 3D models remain indispensable. But the role of AI — whether in speeding up workflows, enhancing visuals, or enabling new storytelling techniques — is growing fast. The question is not if, but how quickly, AI will become a standard part of every architect’s viz toolkit.