3D design, collaboration and simulation platform gets new connectors, real time ray traced subsurface scattering shader and Microsoft 365 integration.

Nvidia has expanded the reach of its 3D design, collaboration and simulation platform, Nvidia Omniverse, by introducing several new connectors that link standard applications through the Universal Scene Description (USD) framework.

These include Blender, Cesium, Unity and Vectorworks. Omniverse Connectors for Azure Digital Twin, Blackshark.ai, and NavVis are coming soon, adding to the hundreds that are already available, such as Revit, SketchUp, Archicad, and 3ds Max.

Nvidia is also adding new features to the platform through the forthcoming Omniverse Kit 105 release. This includes the ‘first real time ray traced subsurface scattering shader’, as Richard Kerris, VP Omniverse platform development, Nvidia explains, “When light hits an object, depending on what that object is, a light can be refracted or split or shattered through the different types of surfaces.

“So when light hits marble or it hits something like skin, it doesn’t just bounce off of it, there’s actually parts where the light goes in, and it scatters around, but it’s very computationally hard to do.

“We were the first ones a few years ago to do real time photorealistic ray tracing and now adding to that the first real time ray trace subsurface scattering.”

Other forthcoming new features include performance improvements thanks to new runtime data transfer and scene optimisers for large ‘worlds’ and more ‘sim ready’ assets, now in the hundreds.

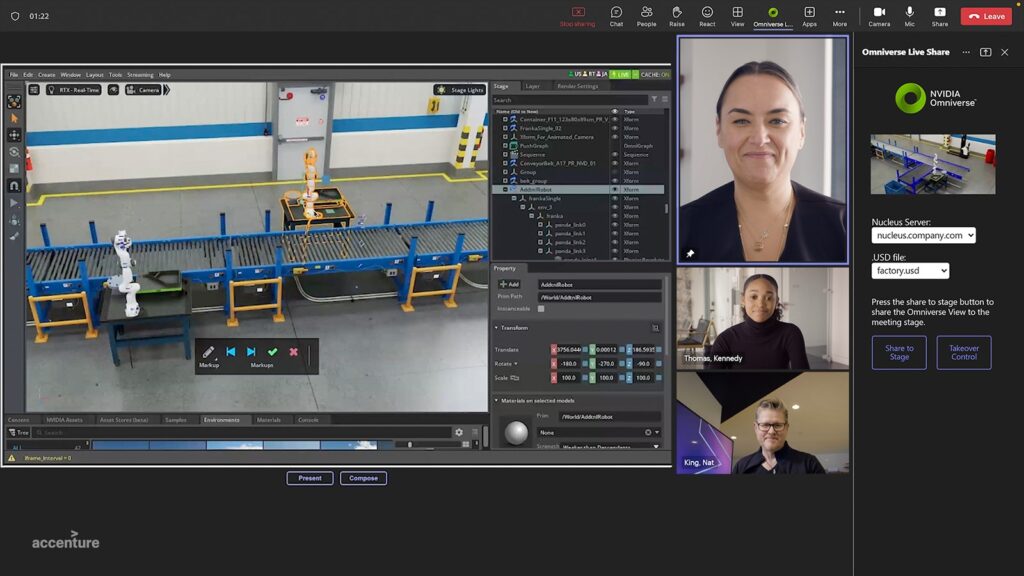

Nvidia has also been working closely with Microsoft to bring Omniverse Cloud to Microsoft Azure. The next stage is to bring this to Microsoft 365 to make Omniverse viewers available inside application such as Teams. From a Teams call, for example, participants will be able to teleport into a 3D environment to work and better understand what’s taking place. “Each of them will have their own experience in that 3D environment, collaboratively,” says Kerris.

“Integrating this [Omniverse] into Teams is just a natural progression of how we communicate. It’s the manipulation of the 3D world in much the same way you can do today in the 2D paradigm of the web,” he adds.

Users won’t need local processing for this. “You’ll be streaming out, in much the same way that you access the cloud through a browser,” says Kerris.

Omniverse will also be connected to the Azure IoT ecosystem, delivering real world sensor inputs from Azure Digital Twin to Omniverse models.

Everyone can be a developer

Nvidia is working to harness the power of ChatGPT for Omniverse. Kerris explains that end users will be able to use ChatGPT and instruct it to write code which they can then drop into Omniverse.

“You’ll have an idea for something, and you’ll just be able to tell it to create something and a platform like Omniverse will allow you to realise it and see your vision come to life,” he says.

Developers can also use AI-generated inputs to provide data to Omniverse extensions like Camera Studio, which generates and customises cameras in Omniverse using data created in ChatGPT. See video below

Nvidia has also announced Nvidia Picasso, a cloud service for AI powered image, video and 3D applications, designed for software developers, not for end users. “Imagine just typing in what you need, and it creates a USD-based model that you can put into Omniverse and continue on,” explained Kerris.

Finally, Nvidia has introduced its third generation of OVX, a computing system for large-scale digital twins running within Nvidia Omniverse Enterprise, powered by Nvidia L40 GPUs and Bluefield-3 DPUs.