A technology that can create mm accurate ‘reality meshes’ from photographs looks set to give laser scanning some serious competition. By Greg Corke and Martyn Day

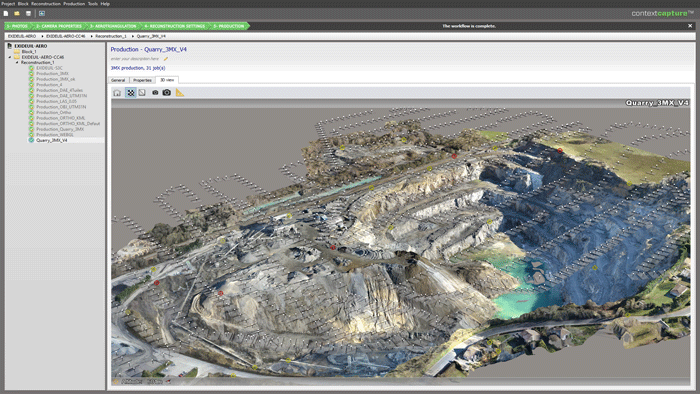

In early 2015 Bentley acquired a French developer called Acute3D, which created a product called ‘Smart3DCapture’. The software essentially converted high resolution photographs into accurate, bitmapped 3D meshed models through photogrammetry.

A similar technology was championed by Autodesk under the 123D Catch brand. This was actually a past licence deal between Autodesk and Acute3D. So Bentley also appears to have pulled off a bit of a coup.

While laser scanners remain high ticket items, it seems lots of humble photographs when combined with good algorithms can produce up to 1mm accuracy meshed surveys of buildings, landscapes or cityscapes, combining aerial photos from drones of helicopters, together with terrestrial photographs.

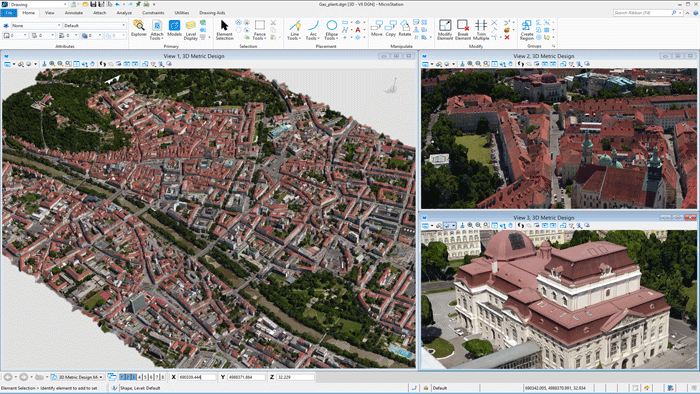

It is possible to rapidly create lifelike 3D models out of photographs. At YII in London we were shown real-world uses of Acute3D’s impact on civil, city, power, and planning, all within a seamless Bentley ecosystem. It was incredibly impressive.

Greg Bentley explained that at the beginning of 2015 Bentley, while demonstrating MicroStation Connect Edition to an existing client, ESM Productions, also showed Acute3D’s technology.

It turns out ESM Productions had been tasked with planning the Pope’s visit to Philadelphia in September 2015. At 60 acres, the size of the public venue was huge so it was a Herculean task.

Between Bentley and ESM, a plan was hatched and Bentley took thousands of photos of Philadelphia from ground level and from helicopter. A reality model was then created for the design team to work off.

Considering Acute3D had only just been acquired by Bentley and it had yet to be properly integrated into MicroStation this also put some pressure on the Bentley development team.

The net result was 28,000 photographs (28GB of data) turned into one reality mesh, from which one square mile was refined.

ESM Logistics imported the mesh into MicroStation and the company’s designers set-to adding in the 56,400 temporary facilities in detail using MicroStation’s standard 3D and 2D commands. The resulting models can be easily shared via a browser or tablet.

The success of the use of reality modelling for ESM Productions meant that the company now wants to use it on all of its events, irrespective of size. Having sweated alongside its customer on this major job, Bentley has also speedily integrated the technology into MicroStation and produced a flavour called ContextCapture.

Scott Mirkin, co-founder and executive producer, ESM Productions, said of Bentley ContextCapture, “In the end, we experienced dramatic risk reduction, better decision making, exceptional timeliness, and greater efficiency. The goal we set with Bentley to test the applicability of reality modelling as a mission-critical event planning technology was completely validated, and we are now planning to offer this new value to our clients going forward. In fact, we were so impressed that we are creating a documentary highlighting our use and the outcomes we achieved.”

The photographs for this project were all taken in one day and processed overnight, which would have been impossible to achieve with traditional laser scanning methods.

It seems that laser scanning / point clouds has some serious competition.

There are a number of interesting applications from as-built modelling to site progress monitoring (it is a whole lot easier to mount a consumer camera on a drone than a laser scanner). However, photogrammetry’s key requirement is that it needs light, so not ideal for overnight surveys or when down a mineshaft!

At the recent Bentley Year In Infrastructure event in London, Greg Bentley took the Philadelphia reality model one stage further, showing one of Foster and Partner’s designs for Comcast’s new HQ in context within an Acute3D generated city model.

We were then taken inside the building and using LumenRT various lighting and shading options were demonstrated, as seen throughout the year with views out over Philadelphia. The potential for conceptual design and checking design options / impacts is truly mind-blowing.

ContextCapture uses GPUs to process the hundreds or thousands of photos needed to create a reality mesh. To speed processing time for very large models (typically involving more than 30 gigapixels of imagery) users can use the dedicated ContextCapture Center in the cloud, which is architected for grid computing with multiple GPUs.

This article is part of an in depth technology feature on Bentley Systems.

Read Bentley merges design with reality.

If you enjoyed this article, subscribe to AEC Magazine for FREE