Company introduces Project DIGITS, a tiny desktop AI supercomputer and launches AI foundation models for RTX AI PCs and workstations

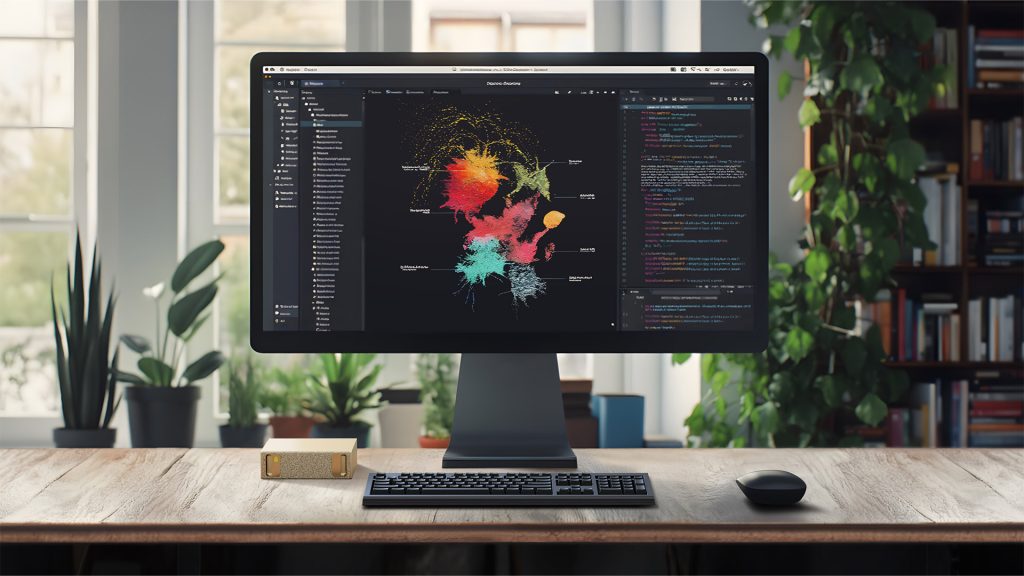

Nvidia has announced Project DIGITS, a tiny desktop system that is designed to allow AI developers and researchers to prototype, fine-tune and test large AI models on the desktop.

The ‘personal AI supercomputer’ is powered by the GB10 Grace-Blackwell superchip, a shrunk down version of the Arm-based Grace CPU and Blackwell GPU system-on-a-chip (SoC) that is used in datacentre AI supercomputers.

This article is part of AEC Magazine’s 2025 Workstation Special report

Project DIGITS systems are equipped with 128 GB of memory, allowing developers to run up to 200-billion-parameter large language models (LLMs). Using Nvidia ConnectX networking, two Project DIGITS machines can be linked to run up to 405-billion-parameter models.

The idea is that users can develop and run inference on AI models using their own desktop system, then ‘seamlessly deploy’ the models on accelerated cloud or datacentre infrastructure.

“Any user doing AI development could use a Project DIGITS system on their desktop to complement their current AI datacentre or cloud resources, or perhaps offload their laptop or desktop system when they need to work with larger models or need more compute power,” said Allen Bourgoyne, Nvidia’s director of product marketing for enterprise platforms.

Project DIGITS uses the Nvidia DGX based OS, which sits on top of Ubuntu Linux, and runs the entire Nvidia AI software stack, including DGX cloud. Project DIGITS will be available in May from Nvidia and top partners, starting at $3,000.

One alternative to Project DIGITS is to do AI development on a desktop workstation with one or more Nvidia RTX GPUs. High-end Nvidia RTX GPUs, such as the Nvidia RTX 6000 Ada, should offer more AI performance but max out at 48 GB of VRAM. To work with larger AI models, multiple GPUs can be used together – up to four in high-end desktop workstations like the HP Z8 Fury G5 – but as Bourgoyne explains, users will need to ensure their models and tools can deal with multi-GPU and multi-memory footprints.

Nvidia is also making it easier for developers and enthusiasts to build AI agents and creative workflows on workstations and PCs. The company has announced AI foundation models — neural networks trained on immense amounts of raw data — which are optimised for performance on Nvidia RTX and GeForce GPUs.

Nvidia has said it will also release a pipeline of NIM microservices for RTX AI PCs from top model developers such as Black Forest Labs, Meta, Mistral and Stability AI. Use cases span LLMs, vision language models, image generation, speech, embedding models for retrieval-augmented generation (RAG), PDF extraction and computer vision.

NIM microservices are also available to PC users through AI Blueprints — reference AI workflows that can run locally on RTX PCs. With these blueprints, Nvidia says developers can generate images guided by 3D scenes, create podcasts from PDF documents, and more.

The AI Blueprint for 3D-guided generative AI for example, gives artists finer control over image generation. With this blueprint, simple 3D objects laid out in a 3D renderer like Blender can be used to guide AI image generation. The artist can create 3D assets by hand or generate them using AI, place them in the scene and set the 3D viewport camera. Then, a prepackaged workflow powered by the FLUX NIM microservice will use the current composition to generate high-quality images that match the 3D scene, as demonstrated in the video below.