Greg Corke reports on the latest developments in reality modelling at Bentley Systems, including streamable, scalable reality meshes and automatic mesh classification using deep learning.

Reality modelling at Bentley Systems has come a long way in a very short time. It was only a few years ago that the company was talking up point clouds as being the new fundamental data type, just like 2D vectors, 3D solids and 2D raster. Now, everything is about the reality mesh and using it to capture site conditions, as-built projects or even entire cities.

The beauty of the reality mesh is that you don’t need sophisticated surveying equipment. For a small project, simply take one hundred or so simple photographs from the ground and the air using a drone and you can have an engineering- ready dataset in no time at all.

Reality meshes are everywhere and crop up in virtually every conversation you have with a Bentley exec, whether it’s about road and rail or buildings and design viz. There’s a genuine excitement about the potential of the technology, but equally, the delivery mechanism, which is enabled through the company’s Scalable Mesh technology, encapsulated in the 3SM format.

Scalable meshes allow you to stream multi-resolution meshes on demand, automatically, to desktop or mobile devices. With no theoretical limit on size, they can change the way designers, contractors and visualisers work with incredibly large reality modelling datasets.

3SM files can be exported from the new ContextCapture Connect Edition software. Data can be streamed on demand from ProjectWise ContextShare at a resolution appropriate to the scale at which the project is being viewed. Low-resolution data would be shown when viewing a road corridor in its entirety, but more detail would be automatically streamed in as you zoom into the model.

In a plant, for example, you could even read the small print on a safety notice, providing the data has been captured with a high-res camera. This could be extremely useful for inspection or asset management with the creation of a digital twin.

Bentley describes 3SM as a more complete solution that solves many of the problems of other formats commonly used to provide context for engineering projects. For example, DTM and TIN are nowhere near as scalable, while STM and 3MX are not considered ‘engineering- ready’, so they can’t be used to calculate accurate quantities.

3SM also has the benefit of being able to handle different data types and a scalable mesh file can contain terrain data from many sources such as DTM, point clouds, raster elevation and more.

Reality capture

The data for reality meshes can be acquired from many different sources. On a road project, for example, photographs could be captured with an Unmanned Aircraft System (UAS). For smaller scale projects, an Unmanned Aerial Vehicle (UAV), commonly known as a drone, might be equipped with multiple data capture devices, including an SLR camera, a laser scanner or a thermographic imaging device. All of this data can be fed into ContextCapture to create the mesh.

For interiors, Bentley recently partnered with GeoSlam to streamline the workflow between ContextCapture and GeoSlam’s handheld devices that capture laser scans and photos in real time as you walk through a building. This is a great alternative to static laser scanners that can be time-consuming to set up in each room, while taking photos of walls with no textures, or reflective surfaces can cause challenges for photogrammetry.

However, as Bentley’s Francois Valois admits, GeoSlam’s technology is not suited to all workflows. “It won’t necessarily give you engineering grade data, but it will definitely give you speed and it is perfect for asset management.”

Data quality

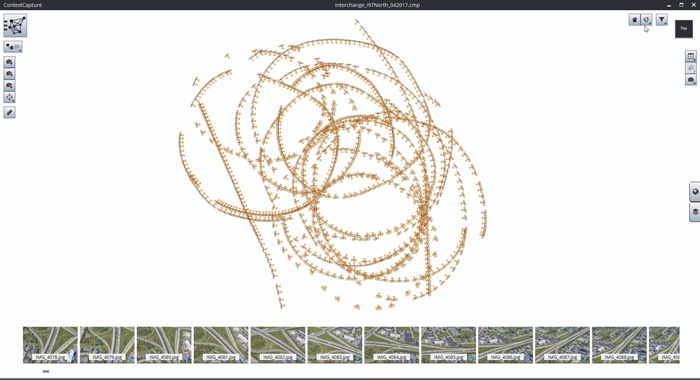

One of the key challenges with ContextCapture is ensuring that the quality and coverage of the photographs you feed into the software will create a good quality model. With drones, particularly when working in confined spaces, the challenges are even greater, so Bentley has partnered with Drone Harmony, a company that offers an Android app to help easily plan out flight paths.

The software automatically gives the right overlap between photos and can fly around complex buildings, maintaining a constant distance, which is important, as it will define the resolution of the mesh.

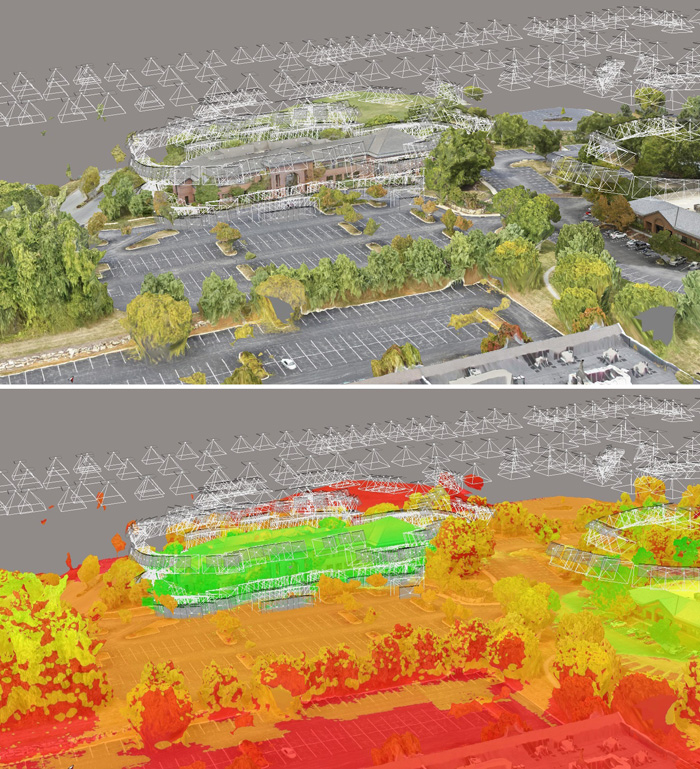

Bentley has also developed a 3D resolution tool in ContextCapture that allows users to easily assess the quality of the mesh data. In simple terms, areas of the mesh that are coloured ‘green’ are good, meaning the data was derived from the most photos or was close to the laser scanner, whereas things that are coloured red are ‘bad’. If you are using the reality mesh to make engineering decisions, it can help you judge what the data can be used for.

In ContextCapture Update 8, out in 2018, it will be possible to use photos only for texturing the mesh and not for creating the 3D model, which is useful when using photos and laser scans combined. A smart algorithm will decide whether the photos or the laser scan is better, but the user will still be able to override.

Engineering and construction

Reality meshes can be used for design, engineering, construction, maintenance, inspection, visualisation and more. You can accurately measure coordinates, distances, areas and volumes with ease.

Bentley is also developing task-specific tools, such as one that can analyse volume differences between two reality meshes (or a reality mesh and a design model) and view the cut or fill quantities.

Practical applications include tracking construction progress, quality control or even to check the accuracy of contractor billing. Bentley also plans to extend this type of verification technology to building design and BIM models.

Mesh production

The creation of mesh data from photographs using ContextCapture takes lot of compute power. Bentley is well aware of this and dedicates significant development resources to optimise the engine and reduce processing time. ContextCapture Update 7 is out later this year, and Bentley execs reckon it is more than 30% faster than Update 5, with some projects offering a 50% increase.

The processing is done using Nvidia CUDA GPUs (not CPUs) and can be spread across multiple GPUs and multiple machines.

However, for firms that don’t want to invest in powerful GPU hardware, Bentley also offers the ContextCapture Cloud processing service, which allows organisations to process images and point clouds and create a number of deliverables including reality meshes, orthophotos, and digital surface models.

The service is scalable on demand, meaning more engines can be applied to urgent jobs in order to get results back quicker. Simply define quality and speed and the cloud processing service does the rest.

Job submission can be done through the ContextCapture Console client, a desktop client that lets you connect to the cloud and upload your photos.

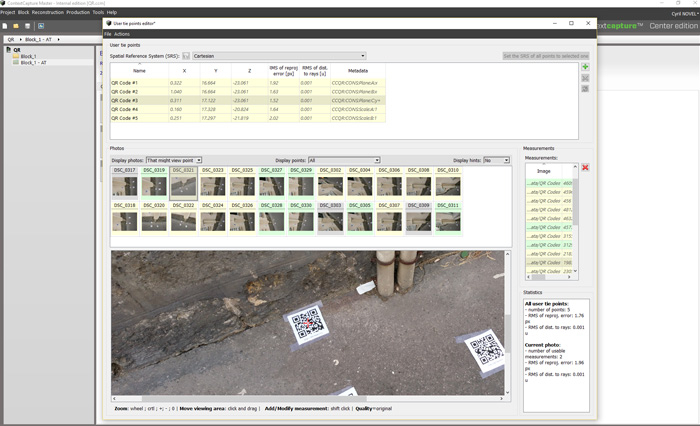

Georeferencing can be done by importing ground control points as a text file, then manually picking the points in the photos. ContextCapture also now offers a QR code framework to capture ground control points automatically. Simply place printed QR codes at known locations on site, prior to capturing the images, and the software will automatically find them in the scene.

Bentley also offers a ContextCapture Mobile app that lets you create 3D models from images taken with your phone or tablet. As mobile compute power is limited, all of the processing is done in the cloud. Simply upload your photos, then once the mesh has been processed it can be displayed on your device. This application is more appropriate for creating meshes of smaller objects and is well suited to inspection, or even to assess cracks.

Mesh distribution

The cloud isn’t only good for brute-force processing; it’s also great for managing and sharing reality modelling data.

The new ProjectWise ContextShare cloud service can stream reality mesh data to desktop tools like MicroStation or Descartes, mobile tools like Navigator or even to a simple web browser. It means everyone instantly has access to the latest data and there’s no need to distribute giant files. Moving forward, ProjectWise ContextShare will also be able to handle point clouds and images.

In the future, Bentley will more tightly link reality modelling data to the iModel Hub, a new technology than maintains a ‘timeline of changes’ or, if you prefer, an accountable record of who did what, and when.

Deep learning

Automation is the future and Bentley is currently exploring different ways of using Artificial Intelligence (AI) to get more out of reality mesh data.

At YII in Singapore last month, Bentley Fellow Zheng Yi Wu shared details of two R&D projects that use the AI technique deep learning. He explained that, in the last two years, computers have become better at recognising images than humans, based on an error rate of 5%, and this has led to big leaps in AI development.

The first project is designed to automatically detect and quantify cracks on infrastructure projects. Following image acquisition, the process involves post processing, feature extraction, edge detection and then quantification of the crack – not just, here’s a crack, but also how long and how wide that crack is. Zheng showed the crack detection tool working on several use cases including pavements, buildings and bridges. By automating the detection process and automating the drone survey, this technology should make it much quicker and easier to prioritise maintenance – and, of course, to identify issues before it is too late.

The second project is exploring the automatic classification of reality mesh data. Currently in MicroStation or ContextCapture Editor, you are able select an area within the mesh and classify it as a specific type of object. Zheng Yi Wu’s team has been using deep learning to train a system to automatically recognise objects – starting with trees and roads – and then learn how to apply those classifications to the rest of the reality mesh.

Conclusion

It feels like there is an unstoppable momentum behind reality modelling at the moment. The technology was credited in almost twice as many nominated projects at the Bentley Be Inspired awards this year, compared to 2016, and 21 of the 55 finalists used reality modelling to base their engineering work on digital context, said Greg Bentley in his keynote.

The beauty of ContextCapture is that it isn’t fussy about where it gets its source data – reality meshes can be crafted from point clouds as well as photographs. However, it’s the ease with which photographs can be captured by drones – and then re-captured on a weekly, if not daily, basis – that makes the technology so compelling. And then when it comes to distribution, with colossal scalable meshes able to be streamed on demand into its design, asset management and visualisation tools, Bentley is leading the charge.

If you enjoyed this article, subscribe to AEC Magazine for FREE