The BHoM computational development project allows AEC teams to improve project collaboration, foster standardisation and develop advanced computational workflows, as Buro Happold’s Giorgio Albieri and Christopher Short explain

In Buro Happold’s structural engineering team, we’re constantly working on unique and challenging projects, from towering skyscrapers to expansive stadiums, intricate museums to impressive bridges.

Our approach is all about exploring multiple options, conducting detailed analyses, and generating 3D and BIM models to bring these projects to life. But this process comes with the major challenge of interoperability – the ability of different systems to exchange information.

Since we collaborate with multiple disciplines and design teams from all over the world, we regularly deal with data from various sources and formats, which can be a real challenge to manage.

The AEC industry often deals with this by creating ad-hoc tools as and when the need arises (such as complex spreadsheets or macros). But these tools often end up being one-offs, used by only a small group so we end up reinventing the wheel again and again.

This is where the BHoM (Buildings and Habitats object Model) comes into play, a powerful open-source collaborative computational development project for the built environment supported by Buro Happold.

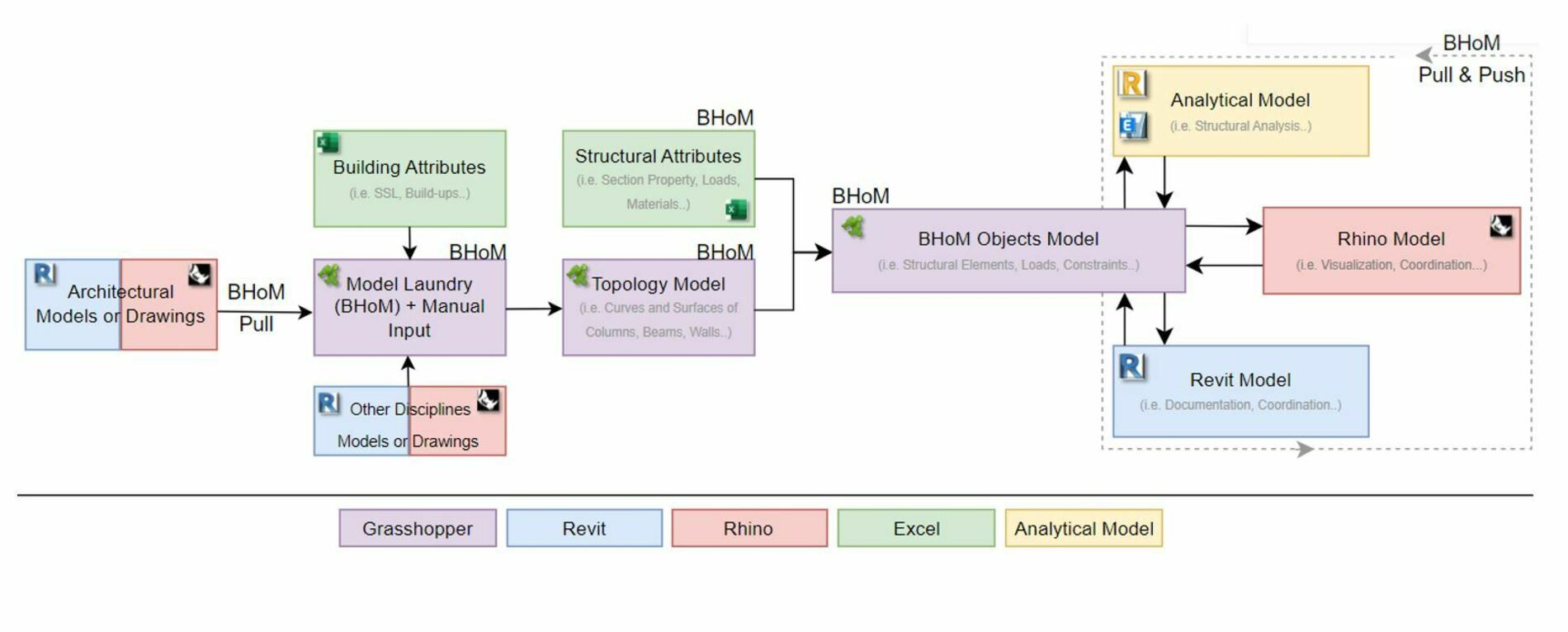

BHoM helps improve collaboration, foster standardisation and develop advanced computational workflows. Thanks to its central common language, it makes it possible to interoperate between many different programs.

Instead of creating translators between every possible combination of software applications, we just need to write one single translator between BHoM and a target software, to then connect to all the others.

The solution: The BHoM

The BHoM consists of a collection of schemas, functionalities and conversions with the following three main characteristics:

• It attempts to unify the “shape” of the data

• It is crafted as software-agnostic

• It is open source so that everyone can contribute and use it

Currently, the BHoM has over 1,200 object models with an extendable data dictionary and adapters to over 30 different software packages.

With the BHoM, we’ve refined and enhanced our approach to structural design.

Once the architectural model is received, using the BHoM we can quickly and precisely build several Finite Element Analysis (FEA) structural models for conducting structural analyses.

It’s possible to clean and rationalise the original geometries for specific purposes and assign/update attributes to all objects based on the results of both design and coordination with other disciplines.

Finally, the BIM model of the structure can be generated in an algorithmic manner.

BHoM in practice

It’s often thought that computational and parametric design is only applicable to the very early stage of a project that relies on very complex geometry.

The reality is, computational design is greatly beneficial at every stage: from the conceptual feasibility study to the detailed design of steel connections.

At Buro Happold, we use the BHoM to help us address multiple stages throughout a project, as demonstrated in the following case study examples which focus on the re-development of a desalination plant in Saudi Arabia into a huge museum.

Find this article plus many more in the Nov / Dec 2024 Edition of AEC Magazine

👉 Subscribe FREE here 👈

Modelling the existing and the new

Let’s see how a computational workflow applies to the modelling and analysis of existing structures making use of the BHoM.

For the Saudi Arabian project, all we had was a set of scanned PDF drawings of the existing structures.

Within a couple of months, we had to build accurate Finite Element Models for each of them and run several feasibility studies against the new proposed loadings.

A parametric approach was vital. Therefore, we developed a computational workflow that allowed us to create the geometric models of all the built assets in Rhino via Grasshopper by tracing the PDF drawings, assigning them with metadata and pushing them via BHoM into Robot to carry out preliminary analyses and design checks.

Of course, there’s no need to mention how much time and effort this approach has saved us compared to a more traditional workflow.

Moving on to the next stage of the project, we needed to test very quickly many different options for the proposed structures, by modifying grids, floor heights, beams and column arrangements, as well as playing with the geometry of arched trusses and trussed mega-portals.

Again, going for a computational approach was the only way to face the challenge and we developed a large-scale algorithm in Grasshopper.

By pulling data from a live database in Excel and making use of an in-house library of clusters and textual scripts, this algorithm was able to leverage the capabilities of the BHoM to model the building parametrically in Rhino, push it to Robot for the FEA and finally generate the BIM model in Revit – all in a single parametric workflow.

Managing data flow: BIM – FEA

As we move into later stages of the project, the more we can see how computational workflows are not only beneficial for geometry generation but also for data management and design calculations.

At Stage 03 and 04 we needed to be able to transfer and modify very quickly all the huge sets of metadata assigned to any asset within our BIM models while being able to test them on a design perspective in Finite Element Software.

Again, we developed an algorithm in Grasshopper leveraging the BHoM to allow for this circular data flow from BIM to analysis software – Revit and ETABS in this instance.

This made it possible to test and update all our models quickly and precisely, notwithstanding the sheer amount of data involved.

Interdisciplinary coordination

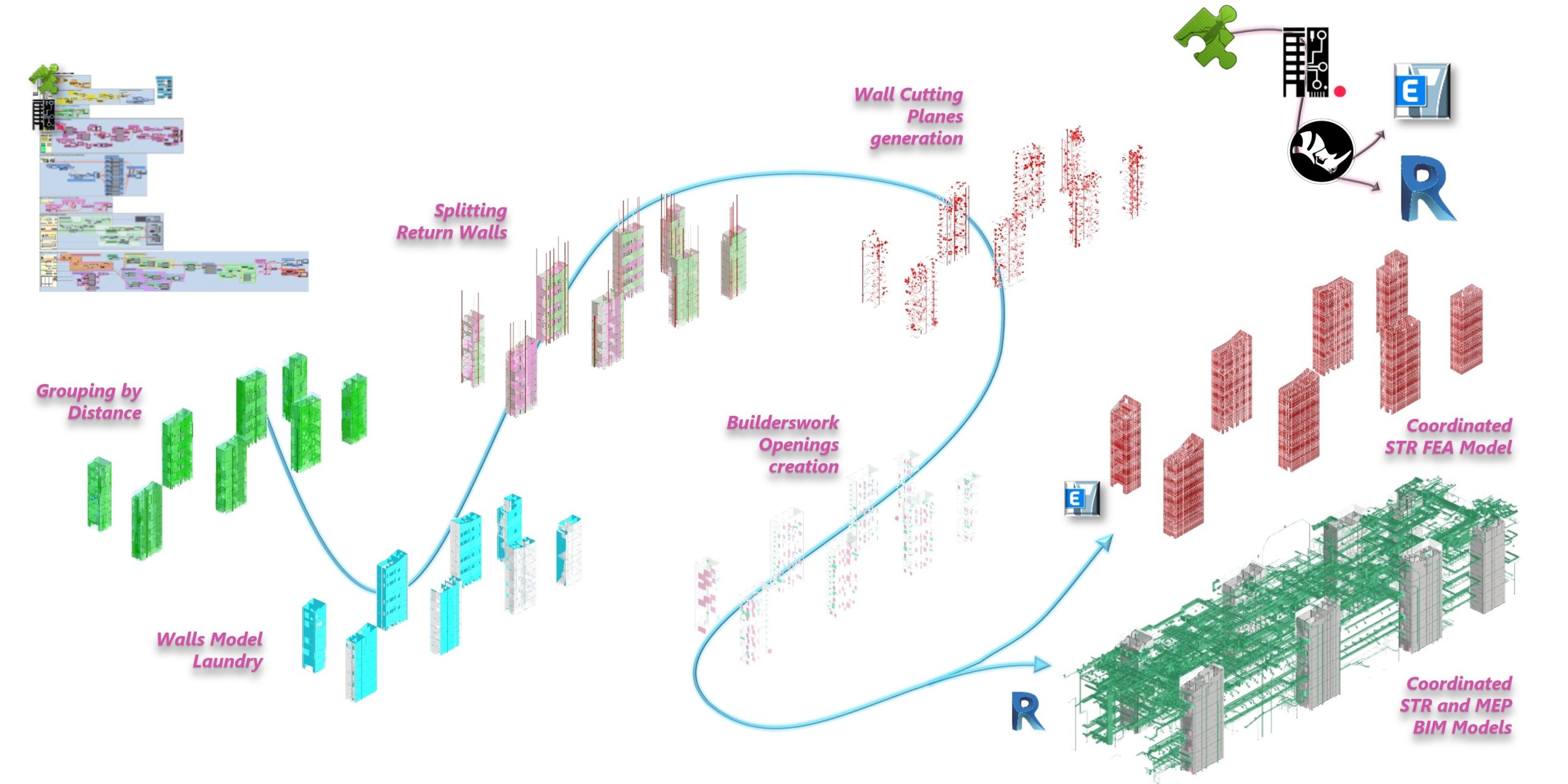

As usual, when moving forward in the project, coordination with MEP engineers starts to ramp up and when structures are big and complex, it becomes even more difficult. The challenge we had to face was intimidating. We had eight concrete cores, 45m tall, more than 9,000 Mechanical, Electrical and Plumbing (MEP) assets for the building and around 1,500 builderswork openings to be provided in the core walls to allow them to pass through.

On top of this, we had the need to specify openings of different sizes depending on different requirements based on the type of MEP asset, as well as the need to group and cluster openings based on their relative distance and other design criteria.

Again, a high level of complexity and a huge amount of data to deal with. Indeed, a computational approach was needed.

Using Grasshopper, BHoM and Rhino. Inside Revit, we developed an algorithm, graphically represented below.

Through grouping operations, model laundry algorithms and the parametric modelling of the builderswork openings, we were able to generate parametrically the BIM model of the cores provided with the required builderswork penetrations.

In parallel with this, the algorithm also generated the corresponding FE model of the core walls, so the structural feasibility of the penetrations could be checked before incorporating them in Revit.

The algorithm detected the intersections between pipes and walls, then generated openings around each intersection of different size and colour depending on different input criteria. Then, using a fine-tuned grouping algorithm, it clustered and rationalised them into bigger openings, wrapping all of them together based on user-input criteria.

Finally, after testing the openings in the Finite Element software, the algorithm pushed them into Revit as Wall Hosted Families and a live connection between the Rhino and the Revit environment streamlined any update process in parallel.

Producing large data sets

Moving even further into detailed design, the amount of data to deal with on a project of such scale becomes more and more overwhelming.

This is what we had to face when dealing with the design of the connections. Although the design was subcontracted to another office, we faced the challenge of providing all the connection design forces in a consistent and comprehensive format, both in textual and graphical contexts.

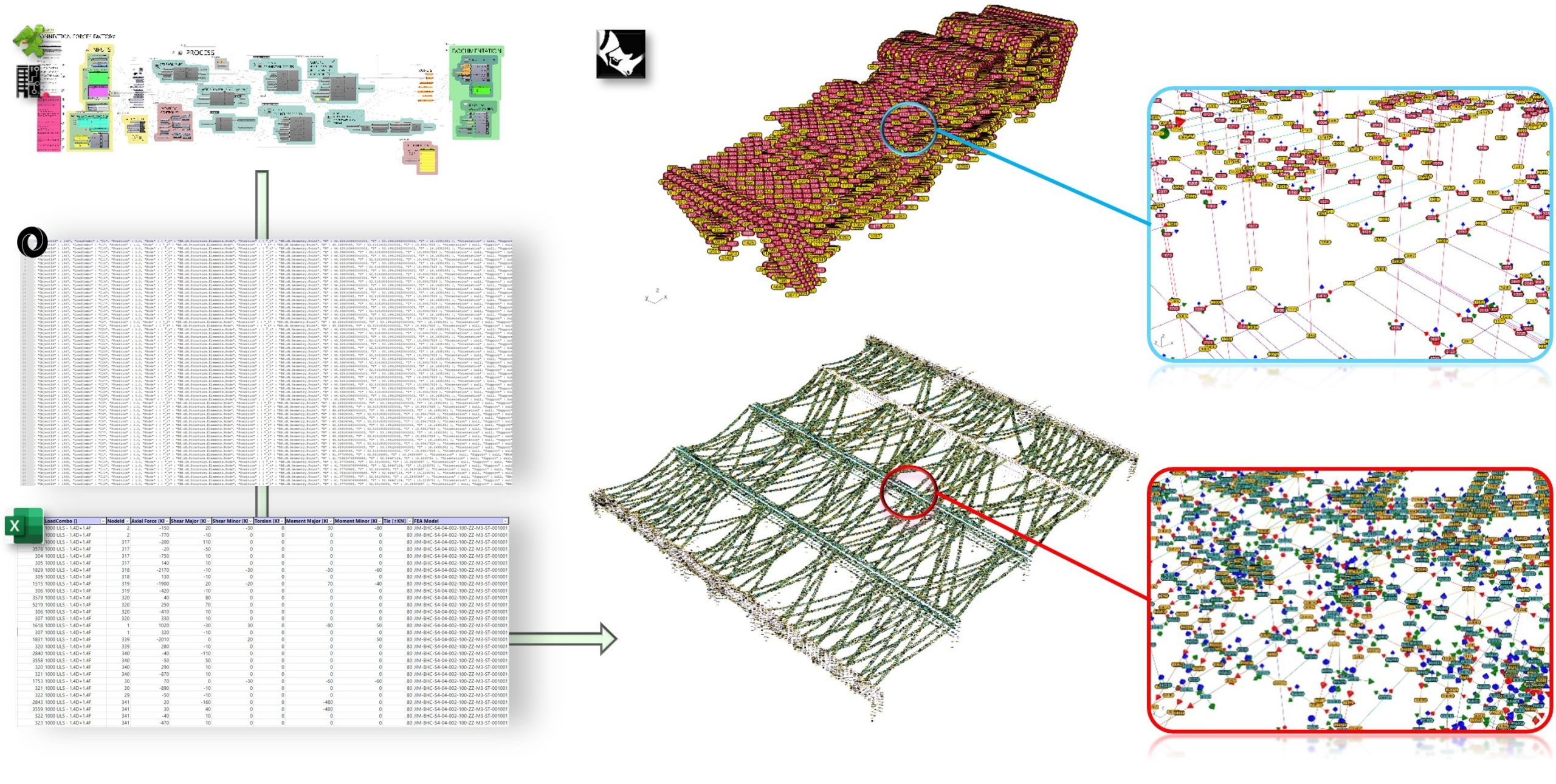

Indeed, this is not an easy task, especially when dealing with around 35,000 connections, 60 load combinations, 2,000 different frame inclinations, six design forces per connection and spanning over three different finite element software packages (ETABS, Robot, and Oasys GSA).

We had to deal with 12.6 million pieces of data and we had to do it very quickly, being able to update them on the fly. Again, a computational workflow was required.

Via Grasshopper and the BHoM, we developed an algorithm to extract, post-process and format the connection forces from the Finite Element models of all the assets of the project, serialise them in JSON, save them in properly formatted Excel files and show them graphically in corresponding Rhino 3D models via tagging and attributes assignment.

All this information was sent out for the design to be carried out by other parties.

Conclusions

Applying a specialised approach, relying on algorithmic methodology and leveraging state-of-the art computational tools, such as the BHoM, enable us, at Buro Happold, to deliver comprehensive and advanced structural solutions, ensuring efficiency, sustainability, and optimal performance across all the stages of the project.

Resources

[2] LOMBARDI, Alessio, (2023), Interoperability Challenges. Exploring Trends, Patterns, Practices and Possible Futures for Enhanced Collaboration and Efficiency in the AEC Industry, in, London, UK.

[3] ELSHANI, Diellza, STAAB, Steffen, WORTMANN, Thomas (2022), Towards Better Co-Design with Disciplinary Ontologies: Review and Evaluation of Data Interoperability in the AEC Industry, in LDAC 2022: 10th Linked Data in Architecture and Construction Workshop, Hersonissos, Greece.