In the run up to Autodesk University 2020, Greg Corke caught up with Cobus Bothma, director of applied research at KPF, to find out why he’s so excited by Nvidia’s new virtual collaboration platform, Nvidia Omniverse.

The future of architectural design will rely on the collective accessibility of all design data and geometries, in one accurate visualisation and simulation application. Those are the words of Cobus Bothma, director of applied research at global architectural firm Kohn Pedersen Fox (KPF) who has been exploring Nvidia Omniverse, the new virtual collaboration platform which places a big emphasis on real-time physically accurate visualisation.

Bothma is excited by how the platform could help bring together KPF’s global offices to work simultaneously on projects. “It shows great potential to allow multiple contributors, from across the entire design team using an array of applications, to collaborate effectively – wherever they’re currently working.”

Nvidia Omniverse is built around Pixar’s Universal Scene Description (USD), an open source file format with origins in visual effects and animation. With the ability to include models, animations, materials, lights, and cameras, it can be used to share a variety of viz-focused data seamlessly between 3D applications.

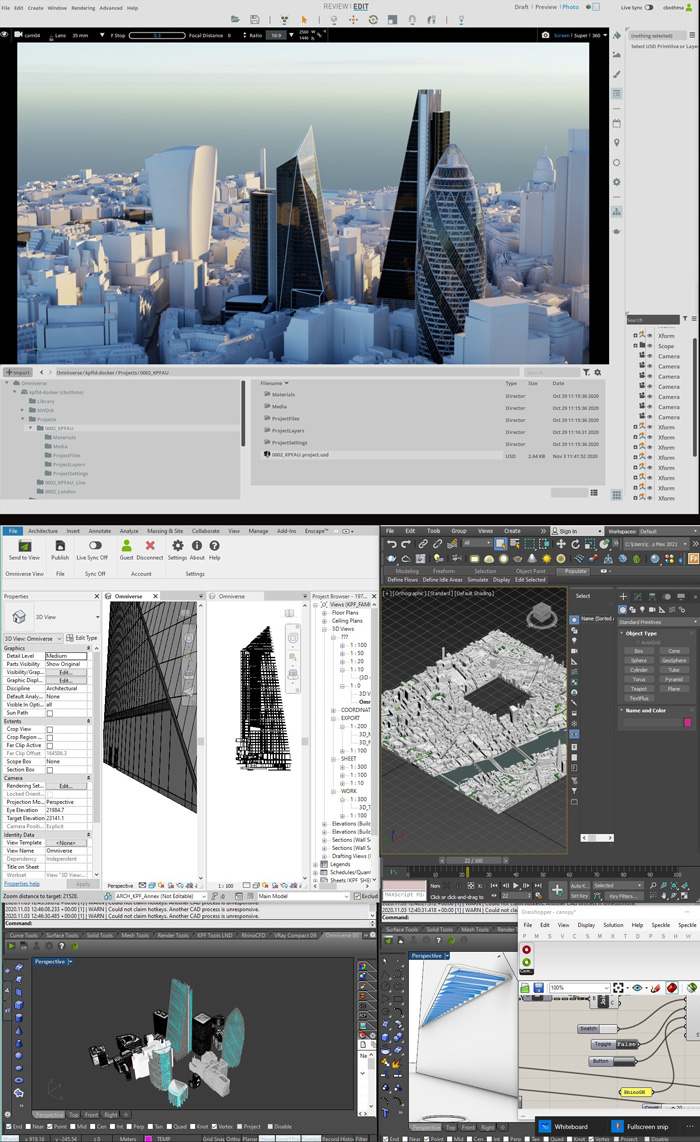

In the AEC space, Nvidia Omniverse works with several 3D design tools, including SketchUp, Revit, and Rhino, and viz tools 3ds Max, Unity, Maya, Unreal Engine, Houdini and (soon) Blender. Importantly, it replaces traditional file-based import / export workflows, with data flowing freely from each 3D application via a plug-in ‘connector’, which creates a live link to the Omniverse ‘nucleus’.

Once the link is established, and the initial model is synced, the connectors only transmit what has changed in the scene, allowing everything to be ‘real time and dynamic’. Move a wall in Revit, for example, and it will update live in Omniverse, along with any other connected application. It means teams can use whichever tool best suits the design or modelling task at hand and switch seamlessly between them.

“With this sort of technology, and the way we are connecting the tools together, it changes our view of the workflows,” says Nicolas Fonta, Sr. Product Manager for AR/VR/MR at Autodesk, who sees Omniverse as a way of breaking down the barriers between Autodesk products and others. “It’s no longer a waterfall file conversion from one tool to another down the line.

“All of those tools are sharing this common representation of the project. Omniverse is one view of it, which is beautiful, but as you’re tweaking things in Revit, it’s one common project that’s sitting on this USD foundation that is exchanged between the different tools.

“It’s no longer about ‘I need to take my Revit file, send it to Omniverse, send it to [3ds] max’, they’re all just the common representation.”

Aggregated models can be viewed in Omniverse View, an Omniverse App with a toolkit designed specifically for visualising architectural and engineering projects using physically-based real-time rendering with global illumination, reflections and refractions.

View includes libraries of materials, skies, trees and furniture, and painting tools to scatter large amounts of assets like trees and grass. Dynamic clouds and animated sun studies are also included, along with section tools. More functionality will be added as the platform evolves and it’s also possible to create custom features using C++ or Python extensions.

Importantly, to help speed decision making at any phase of the design process, Omniverse View allows all project participants, and not just users of 3D authoring tools, to navigate the model, as well as modify and render content.

“Things happen much quicker and rapidly in the boardrooms, in the meeting rooms, at design phases, especially now,” says Bothma. “We get to a point where things have to happen within a click. And this is kind of one of those things that does allow that to happen.”

Kohn Pedersen Fox

KPF is one of Nvidia’s ‘lighthouse’ accounts and has been exploring the potential of Omniverse for several months. However, as the technology is currently in beta and, as Bothma explains, undergoing a ‘rapid rate of development’, it’s not currently being used on live projects.

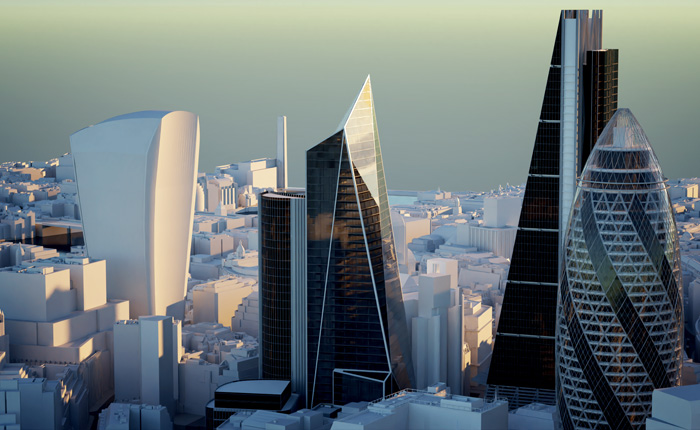

To demonstrate how it could help optimise KPF’s collaborative workflows, Bothma showed AEC Magazine an example from its 52 Lime Street project, also known as ‘The Scalpel’, a striking new office tower in the heart of the City of London.

Rhino (with Grasshopper) was used for the design of the canopy and immediate landscaping; 3ds Max to provide some context using a section of KPF’s London model’ and Revit for the construction document model, including the façade and full internals of the 42 storey building. “The Revit file could be sitting in a London office and the Rhino model could be in the New York office, says Bothma.

“When you publish, it starts collating automatically on the server side and it does it quite cleverly where it actually names the file names and the application, and then the .USD, so you always know where the source was coming from, and you can keep this up to date.”

Nvidia Omniverse – further reading

Visual intelligence

Nvidia Omniverse isn’t just about optimising the flow of design data to improve interoperability and team collaboration. Bothma believes there’s a huge value in giving teams much earlier access to models rendered using an accurate physical representation of light, “It’s getting to see exactly what the project is going to look like much quicker, from probably applications that didn’t always play nicely together.”

Scenes can be viewed in Omniverse ‘View’, which includes a real-time ray tracing mode as well as an RTX path traced renderer, which can be accelerated by multiple Nvidia RTX GPUs and coupled with ‘physically accurate’ MDL materials and lighting.

The path traced stills that Bothma produced for his presentation are quite simple but were rendered in seconds using a workstation with a single Nvidia Quadro RTX 6000 GPU. “We didn’t do much on materials, we didn’t do much on lighting, I literally opened up the sun, dragged the slider a little bit, and then screen grabbed it, and that’s what you kind of get straight out of the box as a designer’s working model.”

But Bothma says there is potential to take things much higher, describing the render quality from Omniverse as ‘top of the line’, also noting that it works with Substance Designer for advanced materials. “We can now actually use Substance Designer with Revit and Max and Rhino at the same time, in Omniverse environments.”

Bothma envisions Omniverse scenes could become dynamic assets used throughout the entire design process. “This would be where I’d do my final renders, this would be where I do design discussions, possibly streaming in the future, testing variations, looking at shadow studies, whatever the case may be – even running my animations and so forth, that’s kind of where you want to go.”

“We don’t like hopping around too much, so the idea is really like what some of the CG and VFX companies are doing; they’re running into one pipeline where all the data and the geometry is aggregated and that’s the pipeline that then will produce the images – at whatever level you want in whatever format you kind of want.”

Streaming to any device with Nvidia Omniverse

Omniverse View can run on an Nvidia RTX powered desktop or mobile workstation, allowing users to get an interactive viewport into the shared scene. However, the real power of the platform comes into play when using Nvidia RTX Server, a reference design available from a range of OEMs with multiple Nvidia RTX GPUs.

RTX Server can perform multiple roles. It not only provides buckets of processing power for path tracing, but with Nvidia Quadro Virtual Data Center Workstation (Quadro vDWS) software users can access the Omniverse platform using GPU-accelerated virtual machines. It means all collaborators can view projects in full interactive ray traced quality on low powered hardware – be it a laptop, tablet or phone.

For Bothma this is one of the most exciting developments, and he’s looking forward to being able to stream Ominverse projects live to any lightweight device. “We can’t put a big [GPU-accelerated] machine in everybody’s face, the whole time,” he says.

One might presume that Bothma sees this an opportunity to improve communication with clients, but this isn’t the priority. “When I look at technology and the use of it at KPF I always look at team collaboration first, team communication, and the second thing is clients,” he says.

“We don’t have a top down design necessarily, we enable everybody to give their feedback and design input, and so forth,” adding that Omniverse streaming will allow principals, directors, or project managers that are not au fait with 3D applications, to look themselves. “It’ll give them the ability to go and say ‘well if a designer says the sun is going to shine on this, for example, and the glass is not going to be too blue’, they’re going to be able to say ‘well let me have a look quickly and open my iPad and go in, adjust the sun go look at it.’”

“Democratisation of technology in the industry is one of the big things we’re driving right now, how to get more people to use the technology without having to be coders and scripters or visualisation experts. And we’re seeing it happen,” he says.

Bothma has several options on where to host Omniverse. To date, most of the testing has been done using on-premise hardware, but AWS [Amazon Web Services] is also an option. “I know some other companies – Woods Bagot, for example – have been doing some testing on the [Omniverse] View side on AWS with quite good results,” he says.

Whichever route KPF ends up taking, the Omniverse nucleus will certainly be centralised and maybe imaged in one or two locations. “That’s the real benefit to us, for someone in Shanghai to be able view this model that’s been published by three of our other offices and be able to see it the same as we see it,” says Bothma. “And they don’t have to wait for large files and [wonder] ‘do we have the right version of whatever software was used in the past.’”

Nvidia Omniverse – beyond viz

Always on the front foot, Bothma is already looking beyond core AEC workflows and thinking about where Omniverse could take KPF in the future.

“It has the capabilities to do a lot more than just architectural collation and so forth – robotics, and a whole bunch of other things,” he says. “And those things are going to be very appealing to me because we’re going to start looking at not just how we look at a building from its visual aspect, we might want to start looking at how we construct a building, and that might be even with robotics, and the use of machine learning and so forth. And, and, and…”

If you enjoyed this article, subscribe to our email newsletter or print / PDF magazine for FREE