If there’s anything to learn from living in the 21st-century, it’s that change is inevitable. Technology evolutions are now planned in by the quarter but occasionally they can come from left field. The technology to design and fabricate high-quality engineered buildings is entering a disruptive phase. How will large AEC firms cope?

It may come as a surprise, but in the late 1970s and early 1980s there were many architectural and construction firms in London using 3D computer modelling systems to generate plans, sections, elevations and drawings of their buildings.

Software such as BDS, RUCAPS, Sonata and GABLE, running on mainframe and minicomputers, were the first BIM solutions. Compared to the tools of today they may well appear rudimentary, and they were certainly very expensive, but they were actually very advanced for the time. It goes against the stereotype that the industry is not very progressive in adopting new technologies and applying them in practice.

Then came the relatively low-cost IBM PCs in the 1980s and, together with cheap drafting software, such as AutoCAD, most of these mainframe dinosaurs were relegated to the scrapheap, and the architectural design world went ‘a little flatter’. However, none of the original concepts died, living on in ArchiCAD, AutoCAD AEC, Architectural Desktop and then culminating in the arrival of Revit in 2000.

In those early days of Computer Aided Design (CAD), technology changed at a snail’s pace. We would literally have years in between software releases and meeting someone with a graphics card capable of a massive 256 colours was once a truly rare event! Today’s AEC firms are facing a very different landscape: evolution is built in, software upgrades and updates can come every six months or less, hardware can now mean VR headsets and software can be online as a service.

Design IT directors of large AEC firms have to stay up to speed with what’s coming, while managing what they have and preparing for what they will have in the future in order to remain competitive.

Of course, all this CAD stuff is not just technology for technology’s sake; like in any business today, technology is a weapon. For instance, in 2003, Foster + Partners’ Swiss Re (aka the Gherkin) was a grand display of Sir Norman Foster’s trademark diagrid glass façade, adding to London’s and the world’s architectural vocabulary. The building was designed and modelled in MicroStation, with Arups modelling the steel.

Foster + Partners’ in-house team of geometry experts, the infamous ‘specialist modelling group’, wrote a program to produce take-offs from the model to automate the creation of pannelisation drawings. At the time, I knew of several firms which begged Bentley Systems to write a similar script for them, so they too could compete in the new vernacular with automated pannelisation take-offs, which they duly did. It’s now not unusual to find programmers in design practice and, to this day, Foster + Partners has the biggest in-house specialist team (but still less than 1% of total staff), producing tools for their designers, solutions for geometrical problems and developing in-house applications.

Technology stacks

Today’s design IT directors live in a complex world. For most, budgets are tight, but they still have to develop their BIM technology stacks for multi-CAD environments, connecting and managing distributed teams and data, in different countries, with varying skill sets, complying to common standards and conforming to local standards. All this while working on projects and collaborating with other firms who might take a completely different approach to just about everything.

Drilling down further, there are decisions to make on which platforms to use for design, document management, document distribution, common data environment (CDE), collaboration hub, security, visualisation, virtual reality (VR), augmented reality (AR), 3D printing and not to forget the workstation, mobile hardware and cloud services. With so many choices and so many moving parts, the day-to-day job involves the spinning of a lot of plates.

With the increased update cycles, new software needs to be checked. All too often the technology stack can be undermined by just one or two components updating automatically and breaking essential links. Like painting the Forth Bridge, it takes so long to build a stack, that you need to start again when you have finished. It’s the pain that comes with building these systems which leads to the stickiness of solutions that ‘just work’ and is, in some way the attraction of SaaS services, which run off-site and take some of that trouble away.

However, with change being inevitable, today’s generation of design IT directors are constantly scanning the market for technology to solve some of their current problems, improve workflows or prepare for any possible dynamic technology shift, such as 2D to BIM or desktop to cloud.

Some of the larger firms might have a hand-picked R&D team, but most do not, and the task might befall to one individual. In times of significant change that responsibility can hang heavily. Process change can come at a productivity loss and an increase in risk in project delivery – the full buy in of the management team is essential. It’s another reason why the industry has tended to change slowly. But there are some changes, which simply cannot be ignored.

Having a Fab time

From talking to many AEC firms over the last year, it’s clear that a number of changes are coming together, which could have a fundamental impact on the way buildings are designed and fabricated. Whatever you want to call it, Digital fabrication, Design for Manufacture and Assembly (DfMA), Prefab, Modular or Precision Manufactured Housing, there is a general consensus that architectural design systems need to be able to better integrate with automated off-site construction machines.

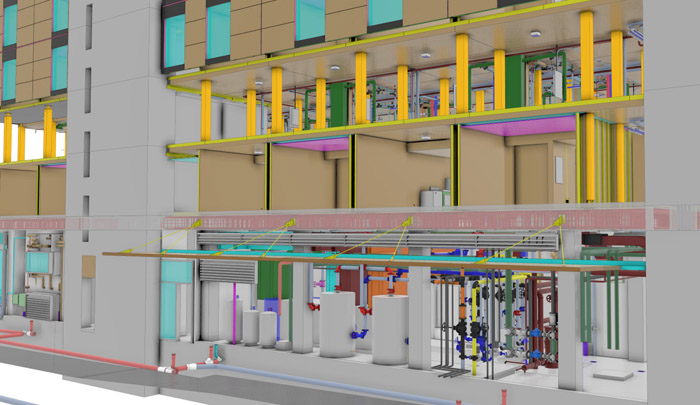

The bad news is that the BIM modellers which we have all standardised on, even though they produce 3D models, were never intended to produce accurate geometry to drive CNC (Computer Numerical Control) machines. They would also likely struggle to handle the size of models required for a level of detail that is needed to generate assemblies with detailed bill of materials (BOMs).

All BIM modellers currently available were designed to automate the production of co-ordinated 2D drawing sets to be handed to builders to make. This isn’t the future. The UK already has a number of building factories being constructed, or up and working – Urban Splash, Legal & General and Berkeley Homes, to name but a few.

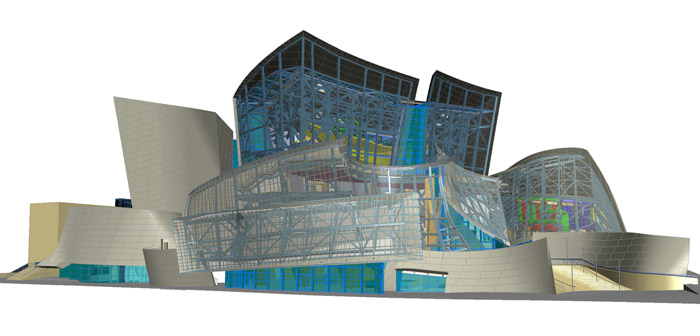

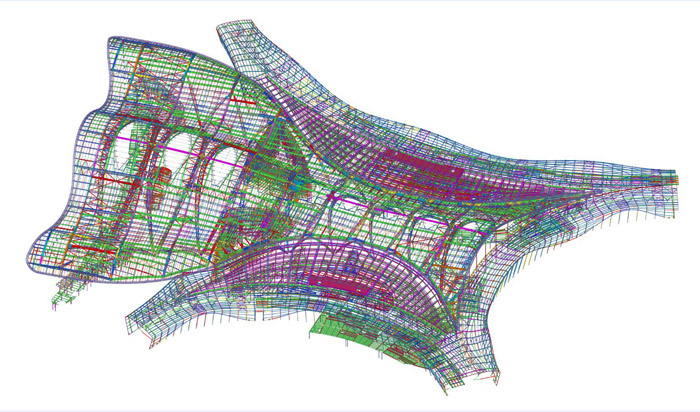

Digital Fabrication is coming at a time when market dominating products like Revit are 20 years old and are only receiving minor updates each year. Revit still mainly runs on one CPU core, hardly makes use of GPU acceleration, and gets very unwieldy for large models often requiring them to be cut up. The net result is we are seeing a lot more activity in firms testing out alternative BIM products and even looking to software solutions which are popular in aerospace and automotive, such as Dassault Systèmes’ 3D Experience platform (better known previously as Catia) and predominantly used by Frank Gehry and Zaha Hadid Architects (ZHA). While Catia is not used on every project at ZHA, it is used to send explicit 3D models to fabricators, bypassing 2D drawings for feedback on manufacturability during the design phase. It’s something that other firms are also buying into, such as SHoP Architects in New York, who has worked on a number of digital fabrication projects.

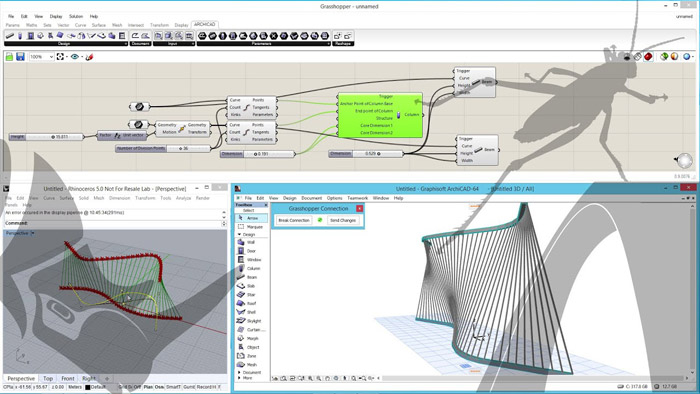

Many other ‘signature’ architectural firms are looking to re-evaluate everything from conceptual design to fabrication. McNeel Rhino and Grasshopper seem to be the weapon of choice for firms that design freeform architecture and Rhino now works in SketchUp, AutoCAD, ArchiCAD, Unity, BricsCAD and Revit. This new development is called Rhino Inside (whcih we review here).

Rhino Inside could potentially be seen as a liberating technology, as conceptual geometry created in Rhino with Grasshopper could be pulled out or injected into pretty much any of the main design and documentation tools. It would mean that Rhino becomes a common data environment of sorts, but the geometry is essentially ‘dumb’, maintaining little of the ’I’ in BIM data.

However, when it comes to ‘dumb geometry’, machine learning advances from companies like Bricsys have tools that could bring order out of the chaos. Its AEC modeller, BricsCAD BIM, offers a unique post-rationalisation capability, called ‘BIMify’, which can parse a dumb mesh model and intelligently work out what the BIM objects are, applying the correct IFC tags to elements it recognises, such as doors, walls floors, slabs etc. Dumb geometry need not stay dumb for very long. This could be game changing as you could pull models of cities out of Unity and set BIMify loose in BricsCAD! I’m calling this ‘Réchauffé BIM’ as it’s possible to heat up any geometry, back to BIM.

Computational design or generative design has become a core design tool for many large firms, so if the base geometry stayed in Rhino, G-code could be easily generated from and it could also feed the model and drawing creation, a role for which BIM is slowly becoming relegated to.

Products like ArchiCAD have built-in links to Grasshopper to drive BIM objects from scripts. This workflow is being evaluated by many architects wishing to automate the building of BIM models from geometry definitions. Rhino Inside also brings that possibility to Revit (and any other 64-bit Windows application, which would include Unity and Blender).

Foster + Partners is a huge Rhino and Grasshopper customer. Francis Aish, Head of Applied Research and Development and a partner at Foster + Partners explained how they keep the geometry in Rhino for as long as possible to enable dynamic changes to the building definition before handing it over to Revit for detailed documentation.

The company uses scripting to develop tools for its architects, as well as providing automation for laborious processes. Recently in Denmark, Aish demonstrated a real-time design evaluation VR program which his team had created on top of Unity. In our discussion Aish mused on the fact that many AEC firms were developing code to do the same things, reinvent the wheel and that perhaps there should be a more open approach to sharing some of the tools developed, especially when it comes to assisting collaborative working.

While Rhino is a surface- only based modeller, HOK has just announced it will be looking at BricsCAD BIM as an interesting Revit alternative and has become a BIM Alliance Partner of Bricsys. BricsCAD started life as an AutoCAD clone but now has a full ACIS solids-based BIM tool on top it and the interesting BIMify machine learning capability.

Rise of programming

There are many ways to start a concept model but, to be honest, it’s probably the weakest part of the architectural software market. Common tools are Grasshopper, Dynamo, Rhino, SketchUp, Maya, massing in Revit or another BIM tool.

Some firms want to jump straight into BIM, some sketch and folks like Gehry crumple up paper. CAD does not like imprecision and conceptual design needs freedom, unless you know you are going to build a bunch of rectangles – in which case go straight to massing. BIM tools really need to improve at this phase, to capture artistic input.

The leading firms typically use scripting and programming early on to allow iterative form finding and the easy creation of complex geometry.

The popularity of Rhino, Grasshopper, Dynamo and Python programming has really created a different type of architect within practices. The computational designer can either develop tools for the rest of the team, or be the key driver in defining complex geometrical forms.

The visual programming style of wiring up mathematical ‘effects’ and ‘generators’ is an easy route into geometry-generating code and is frequently used at the core of project teams.

Before computational generators, 3D models would have to have beeen hand crafted and were complex and slow to edit. With the computer in control of generating forms and managing the complexity, the architectural vocabulary has again been expanded.

It has also created a divide between scripted geometry definitions and traditional BIM tools, which operate on higher-level components, such as walls, doors and windows. In geometry-led practices, the Rhino definition drives the BIM, which essentially becomes a documentation tool, not a design tool. For many, this documentation and 2D drawing phase is seen as a necessary evil, mainly for contract compliance and for communication with clients, but advanced fabricators and construction firms would sooner have the 3D model to check for manufacturability and accurate fabrication quotes.

Not all architectural firms need Rhino or complex NURBS curves, but still employ programming teams. Rob Charlton, chief executive of Space Group, and serial BIM entrepreneur (BIM Show Live, BIM Store, BIM Technologies) is based in Newcastle and has a team of four dedicated programmers in his practice.

Charlton explained that in the North East fees are lower and customers are more conservative and are focussed on value. He might not produce buildings with too many curves, but he uses his programming team to deliver on the opportunities he sees in extending the BIM data he creates or captures for clients by developing services.

His current focus is on developing a digital twin platform for facilities management (FM), built on Autodesk Forge tools, to enable the BIM model to drive efficiencies post build and get the data out of Revit and into a tool that non-CAD folks can use. In short, Space uses programmers to drive additional services revenues and occasionally to fill in gaps for functionality that Revit doesn’t provide.

Some large firms have no dedicated programming or scripting resources at all and rely on the skills of their talented design teams. They usually teach themselves or are active in communities.

Visualisation revolution

Another case in point, for a generational change which currently faces design IT directors, comes in the shape of our need for beautiful visuals. In this area we see possibly the most regular yearly changes. GPU and CPU dev\elopment continue at breakneck speed. Every year it seems the previous chips are being bettered, making workstation choice critical to match software with machines of appropriate specifications. Do you invest in multi core CPUs for traditional CPU rendering, or high performance GPUs that can now be used for many different tasks, including real-time visualisation, ray tracing and VR? While the established base applications, 3ds Max and Maya, are again on low velocity development, we are seeing rapid advances from the key game engine players, Unity and Unreal. Promising instant visualisation and deep integrations into today’s BIM modellers, the future of viz seems to be in different applications.

With a product like Enscape, the real time visuals you can get, essentially as a bi-product of the design system, are quite stunning. Talking with Kohn Pedersen Fox (KPF) Associates’ Cobus Bothma, director of applied research, he explained how viz was moving from just being the presentation tool to becoming a valuable asset in analysing designs, for feedback into the project teams. Currently the KPF product stack means every designer has a copy of Enscape and some have Lumion. Botha is also looking at deploying Twinmotion for producing images with really rich environments. Ultimately these Twinmotion models will directly feed into Unreal for the full viz and VR treatment. KPF then uses remote workstation technology to stream pixels to iPads for in-house design meetings.

VR and AR are set to be extremely important in future design workflows. While a lot of the current use cases are around customer presentations, Unreal and Unity, as well as whole host of other AEC-focused real-time viz and VR tools, are all about expanding their appeal to designers.

BIM models, especially in Revit, are heavy, so game engines could also offer parallel workflows. By extracting the geometry as a lightweight mesh with multiple Levels of Detail (LoD), together with the metadata, they could be used for all sorts of downstream functions – clash detection, collaboration, design review, sections, elevations, quality assurance, and all types of VR and AR experiences, even on site. Importantly, as Unity and Unreal keep up with the very latest hardware, BIM data could instantly be streamed out to multiple devices – mobile, desktop, web, VR or AR. This clearly doesn’t leave a lot for the original BIM model and, even less if that BIM definition can’t drive digital fabrication. Food for thought.

In my conversations with Aish from Foster + Partners, he explained how architects really provide experiences and sell these to clients. In many respects, BIM asks architects to make deep structure-based decisions in the concept phase, when all this could be worked out later. Perhaps returning to a time where sketches and artist renditions were enough to get the client’s buy-in; the digital equivalent will be placing the customer in an augmented environment.

Financial drivers and locks

With increasing costs for enterprise licences from Autodesk which, let’s face it, has the lion’s share of UK and US markets, the cost of software is also becoming a major driver. Firms are unable to leave subscription without losing access to all licences, but are very aware of how much they are paying and how little development their core BIM tool, Revit, has received. When one software vendor can dictate tough contract terms, AEC firms are made painfully aware every three years, of how little leverage they have. This is another reason for the current interest in evaluating what else is on the market.

Autodesk is busy moving to the cloud and has decided the next generation of BIM modeller will reside there. Project Plasma is the start of this core technology but has suffered delays and is probably years away. This is happening at the same time that digital fabrication is becoming a near-future consideration. This is probably not a good time to have your development ‘gap’.

The industry is looking to liberate itself from proprietary file formats, which came with BIM modellers. In a federated working environment, poor interoperability leads to data wrangling, a potential to lose valuable information, and it impedes collaboration. Architectural practices have consciously expanded their toolsets to use best in class, so the search for industry standard CDEs goes on.

Do It Yourself

We find ourselves at a crossroads for AEC development. We have an old generation of desktop applications, with increasing costs and a lack of interoperability, while looking at the oncoming requirements and opportunities of digital fabrication, artificial intelligence (AI), generative design, and defining new workflows.

The large AEC firms have always tended to use commonly available platforms in which to do their work, but some have put forward the notion that the needs of the top 20% will rarely get a look in when a software vendor is aiming to deliver features for the 80%. Some have even mused that with BIM being a drawing process and manufacturing CAD systems being overkill, they should collectively design their own concept to fabrication system. There are enough components and technologies available to get a good head start, but the fundamental question would remain; should they be architects or software developers?

It’s not lost on me, the irony that the first new BIM tool on the market in 20 years, BricsCAD, started off life as an AutoCAD clone. I wonder if any software house is brave enough to throw everything away and start from scratch, looking at the future needs, based on the inputs and outputs? But Revit is so entrenched, and multi- disciplinary workflows rely on its ability to hold all this data, will the benefits of digital fabrication drive a fundamental change in process?

Conclusion

Looking at the technology landscape and the commercial pressures to drive efficiencies, compress timelines, reduce cost and reduce carbon, the combination of digital fabrication with off-site factories seems inevitable. We are already seeing significant investment in the residential sector in the UK. In America and Japan this is being combined with an adoption of mass timber. This will scale to office blocks and high rises as processes are refined.

Changes in materials, fabrication and automation will mean fundamental changes to design workflows and ultimately key deliverables. While in automation and aerospace 2D is still commonly used, the model definition is the key driver throughout its lifecycle. AEC has much to learn from lean manufacturing methodologies.

We should all also be aware that there are giants of the tech world looking to disrupt this space. Ikea, Google and Amazon have their eyes focussed on designing, fabricating and delivering manufactured buildings. Even Elon Musk is trying to redefine construction, and looking to automate the creation of his Giga factories. Firms like Katerra have already started the process in the States).

It is time to re-evaluate our tools, our processes and deliverables to encompass a different future, where many buildings are designed for automated manufacture and assembly and an integrated approach to disciplines becomes essential. However, the barriers are not just technical. People and culture is often mentioned when talking about the challenges of changing the AEC industry’s workflows and practices for the better. There are feedback mechanisms from all aspects of the industry, which also make it difficult to move forward.

For me, one of the biggest barriers to the AEC industry progressing are its tool makers, the software developers, which approach solving every new problem or customer requirement with the same old software they have been ‘evolving’ for years to replace 2D CAD. If BIM won’t get us to manufacturing, it could be seen as a convoluted route to just creating 2D DWGs. In many respects, the core concept of BIM has failed, with multiple BIM models being created to suit the needs of each part of the design and build process; a construction BIM model is different to an architectural one.

At some point soon there will be a dramatic change in workflow or output, that software developed decades ago can no longer address just by adding some new features. Catering to legacy systems impinges on innovation. Sometimes it takes new eyes to analyse the needs and trajectory of the market. With a focus on factory-based manufacture, they would likely come up with a very different solution to BIM as we know it.

After a summer of talking to some of the biggest AEC firms it’s clear to me that some of the leading BIM tools have failed as concept design tools, but succeeded as design checking + documentation loops – although there are still some challenges surrounding large models, complicated geometry and the fact they don’t naturally benefit automated off site construction.

While AEC firms will carry on refining their existing BIM processes and participating in the status quo, there is a common understanding in the top 20% of firms, at least, that things are changing rapidly and that new design tools are needed to define directly manufacturable definitions.

This fact is not lost on the major software companies; Autodesk, for example, is scrambling to develop a new system but it seems to have been caught out on the rapid industry-focus on digital fabrication adoption and existing software will not easily evolve to bridge the chasm.

The net result is we are seeing CAD systems that were designed for automotive and aerospace being trialled within some architectural practices and construction firms, trying to bridge the gap, connecting designers with the factory through 1:1 accurate assembly models, BOMs and G-code.

CAD software companies rarely look forward to generational changes of the core products. It’s a time when they might get it wrong and open themselves up to the competition as effectively the playing field gets levelled. The big play of the last five years has been the race to get existing apps and data to the cloud; digital fabrication was not on the radar. And here, I fear, instead of taking a fresh look and starting from scratch, developers will hamstring themselves by working within self-imposed constraints of catering to their legacy system and users, when there are already manufacturing-centric modelling systems like 3DX Catia to bridge the chasm.

We are at the start of a generational change and design IT directors of leading firms have started to rethink their technology stacks, processes, costs and deliverables, and are much more likely to evaluate alternative and new technologies and with that the market is opening up.

If you enjoyed this article, subscribe to our email newsletter or print / PDF magazine for FREE