Nvidia’s new Quadro GPU is a phenomenal proposition for real time 3D graphics and VR. With the potential it has for GPU rendering, it would be such a waste to not harness its power for ray tracing as well

Ever since Nvidia unveiled its Quadro RTX ray tracing GPUs last year, the design viz community has been hotly anticipating their arrival. And that’s hardly surprising. The Unreal Engine demos, which featured shiny stormtroopers and a Porsche 911 Speedster concept car, were quite breath-taking.

Producing a single ray traced quality photoreal image used to take seconds or even minutes, but this was now being done in a fraction of a second on a desktop workstation, albeit one with two very powerful GPUs. Nvidia had shown it could make photorealistic visualisation completely interactive and while there was almost certainly some smoke and mirrors, it was a massive advancement.

Fast forward six months and design viz artists can now start to see what all the fuss was about… well, kind of. Quadro RTX might finally be shipping but as it is a completely new type of GPU technology, it also needs special software to take full advantage of its ray trace rendering capabilities. And these applications simply aren’t commercially available yet.

But before we get ahead of ourselves, it’s worth taking a step back to look at what makes Quadro RTX different to all GPUs that have come before.

The ray tracing GPU

Quadro RTX is based on Nvidia’s new Turing architecture, which has been designed from the ground up for ray tracing and deep learning, a subset of Artificial Intelligence (AI). Previous generation Quadro GPUs, such as Pascal (Quadro P2000, P4000 etc.) and Maxwell (Quadro M2000, M4000 etc.), featured thousands of general purpose Nvidia CUDA cores which could be used for 3D graphics or other parallel processing tasks such as ray trace rendering or simulation.

Quadro RTX still has thousands of CUDA cores, so it can do all the things that Pascal and Maxwell could do (albeit faster) but it also features two additional sets of cores — RT Cores, which are optimised for ray tracing, and Tensor Cores, which are optimised for deep learning.

In order for software to take advantage of these optimised cores, it has to be specifically written to do so. And while there is widespread commitment from the industry, including Chaos Group (V-Ray), Solidworks (Visualize) and Luxion (KeyShot) to name but a few, it will take time for commercial software to be released.

So where does this leave us? At the moment, anyone investing in a Quadro RTX GPU can only use it in the same way they have used previous Quadro GPUs – just using its CUDA cores.

But the good news is, because the new Quadro RTX GPUs are a significant improvement over previous generations, this will be reason enough for many. Then, when Quadro RTX-enabled software finally starts to ship, design viz folks will be able to generate photorealistic output even faster.

The Quadro RTX family

Nvidia has launched four Quadro RTX GPUs, from the mid-range to the high-end. We imagine Nvidia will launch lower-end Quadro RTX GPUs later this year but we don’t know this for sure.

The Quadro RTX 4000 (8GB GDDR6), RTX 5000 (16GB GDDR6) and RTX 6000 (24GB GDDR6) are essentially replacements for the Pascal-based Quadro P4000 (8GB GDDR5), P5000 (16GB GDDR5X) and P6000 (24GB GDDR5X). There’s also a new ultra high-end model, the Quadro RTX 8000 (48GB GDDR6), which is essentially the RTX 6000 with double the memory. It’s designed specifically to overcome the challenges of using the GPU for really high-end rendering where the datasets can be incredibly complex.

Users can also double the addressable memory by using two GPUs in the same workstation. This is done through NVlink, a proprietary Nvidia technology that is supported on the RTX 5000 and above. Those who can afford two Quadro RTX 8000s, for example, can effectively have a GPU with a colossal 96GB. With this amount of memory Nvidia really can start to compete with the massively scaleable CPU architecture.

As you can imagine, the higher up the range you go, the more cores you get (CUDA, tensor & RT). All GPUs are rated by FP32 performance, a measure of their single precision compute capabilities (TFLOPs) based on their CUDA cores alone. This figure can be compared directly to previous generation Quadro products.

Nvidia has also introduced two new metrics to help users differentiate between its new GPUs. The first is RTX-OPS, which takes into account general processing power as well as the GPU’s ray tracing and deep learning capabilities. The second is Rays Cast, a specific ray tracing metric measured in Giga Rays/Sec.

It’s important to note that the Quadro RTX GPUs are more power hungry than the Pascal Quadros they replace. The max power consumption for the RTX 4000, for example is 160W, compared to the 105W of the Quadro P4000. The top-end RTX 8000 can go all the way up to 295W.

DisplayPort 1.4 is standard across all four Quadro RTX GPUs. Also included is VirtualLink, a new open standard that can deliver power, display and data for next generation VR headsets through a single USB Type-C connector.

Quadro RTX 4000

The Quadro RTX 4000 is probably the best fit for AEC Magazine’s key audience of engineers and architects, and is the focus for this review. It costs under £1,000 but offers incredible performance for the price.

It’s a single slot card so will be available in a wide range of single CPU and dual CPU workstations. If you’re thinking of upgrading your current machine, you’ll need to make sure your PSU can handle its 165W power requirements. You’ll also need an 8-pin power connector.

On test

To test the Quadro RTX 4000 we compared it to the Quadro M4000, P4000 and P5000, as well as the AMD Radeon Pro WX 8200, which has a similar price point.

Our test machine is a typical mid-range workstation with the following specifications.

• Intel Xeon W-2125 (4.0GHz, 4.5GHz Turbo) (4 Cores) CPU

• 16GB 2666MHz DDR4 ECC memory

• 512GB M.2 NVMe SSD

• Windows 10 Pro for Workstation

For Nvidia GPUs we used the 416.78 driver, so it could be compared directly to existing results. For the AMD Radeon Pro WX 8200 we used the 18.Q4 driver.

As touched on earlier, there are no commercially available applications that fully support Nvidia RTX, so for this review we tested with a range of professional applications for 3D CAD, real time visualisation, VR and ray trace rendering. Wherever possible we used real world design and engineering datasets.

Interactive 3D

For real time visualisation, frame rates were recorded with FRAPS using a 3DConnexion SpaceMouse to ensure the model moved in a consistent way every time. We tested at both FHD (1,920 x 1,080) and 4K (3,840 x 2,160) resolution.

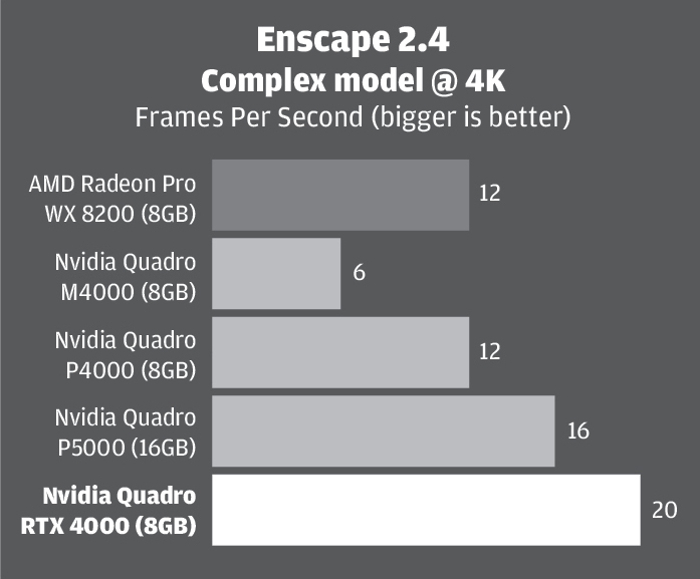

Enscape is a real-time viz and VR tool for architects that uses OpenGL 4.2. It delivers very high-quality graphics in the viewport and uses elements of ray-tracing for real time global illumination.

Enscape provided two real world datasets for our tests – a large residential building and a colossal commercial development. The GPU memory requirements for these models are quite substantial. The residential building uses 2.8GB @ FHD and 4.5GB @ 4K, while the commercial development uses 5.5GB @ FHD and 6.9GB @ 4K. This was fine for our tests, as all five GPUs feature 8GB or more.

The Quadro RTX 4000 beat all of the other GPUs by quite some way. The biggest lead came at 4K resolution when it delivered almost double the frame rates of the Quadro P4000 and the AMD Radeon Pro WX 8200. It was also significantly faster than the Quadro P5000.

At this point it’s important to note the relevance of Frames Per Seconds (FPS). Generally speaking, for interactive design visualisation you want more than 24 FPS for a fluid experience. While all the GPUs achieved this at FHD resolution, only the RTX 4000 came close at 4K.

The 20 FPS it delivered was impressive but, at times, the model stuttered, particularly when transitioning from the interior to the exterior of the commercial development. However, as with most applications, you can dial down the visual quality in Enscape to increase performance. For example, when set to draft, which still gives very good visual results, we achieved 36 FPS and everything was silky smooth.

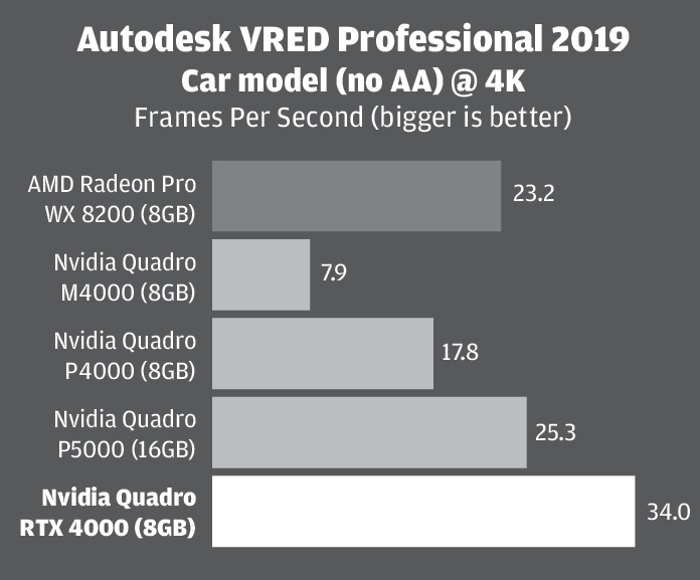

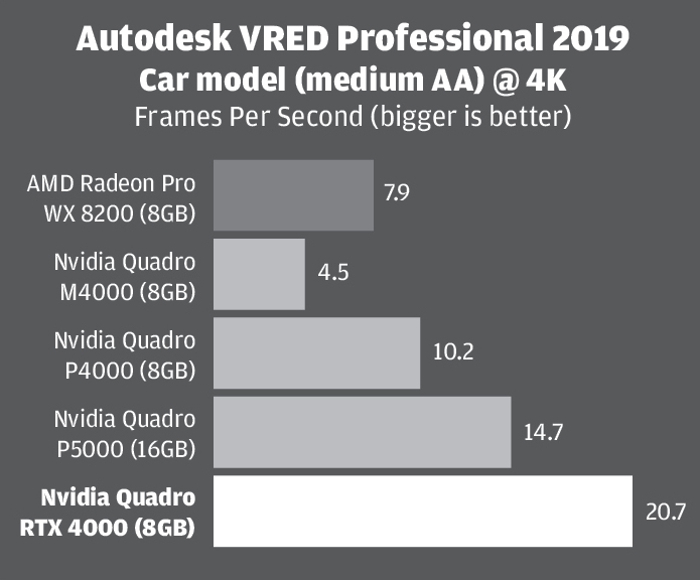

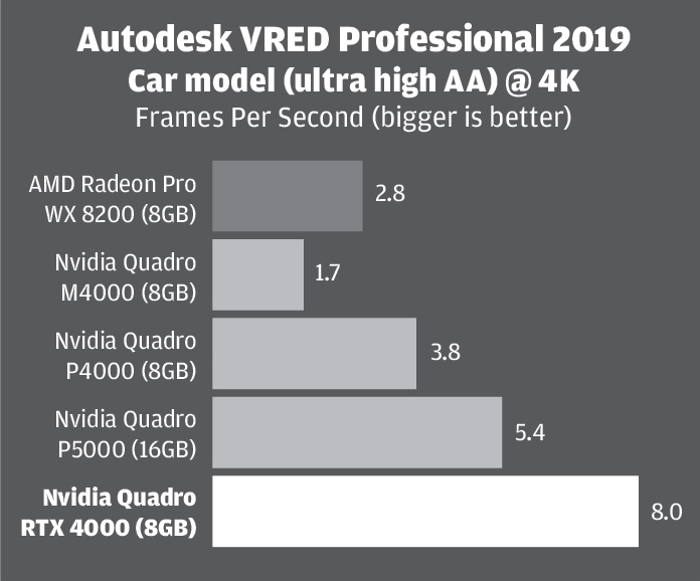

Autodesk VRED Professional 2019 is an automotive-focused 3D visualisation, virtual prototyping and VR tool. It uses OpenGL 4.3 and delivers very high-quality visuals in the viewport. It offers several levels of real time anti-aliasing (AA), which is important for automotive styling, as it smoothes the edges of body panels, but AA calculations use a lot of GPU resources, both in terms of cores and memory. We tested our automotive model with AA set to ‘off’, ‘medium’ and ‘ultra-high’.

The Quadro RTX 4000 was significantly faster than all of the other GPUs but its advantage over the AMD Radeon Pro WX 8200 became even greater when real time AA was enabled.).

At 4K, with AA set to medium, the RTX 4000 was the only GPU to give what we would describe as a fluent experience, only a touch below the ideal minimum of 24 FPS. However, when AA was set to ultra high, even the RTX 4000 struggled, and the model was quite choppy in the viewport. In these types of automotive styling workflows, where visual quality is of paramount importance, you’d traditionally need to look at a multi GPU solution, although we wonder whether the Quadro RTX 6000 could deliver this on its own.

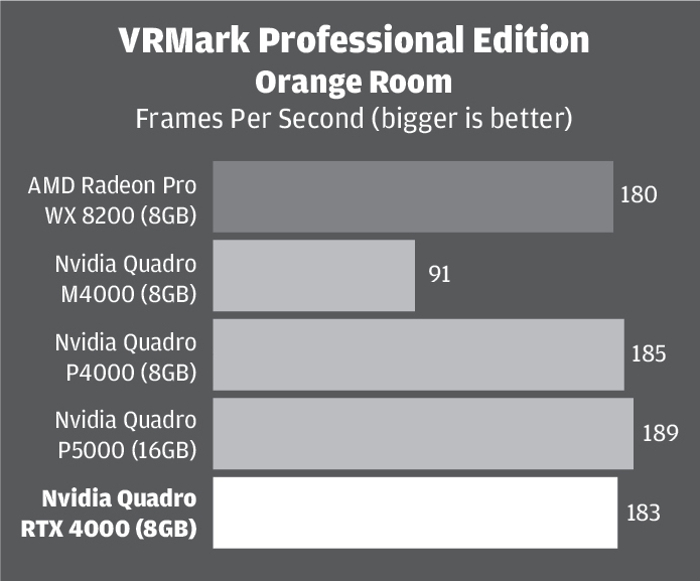

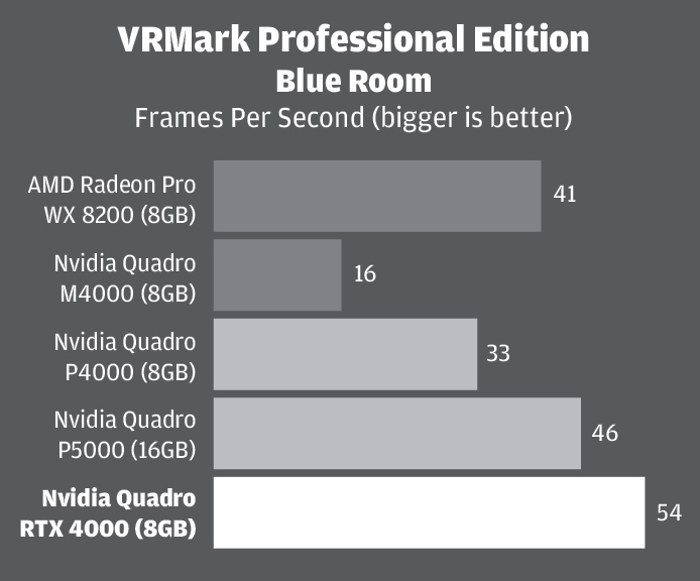

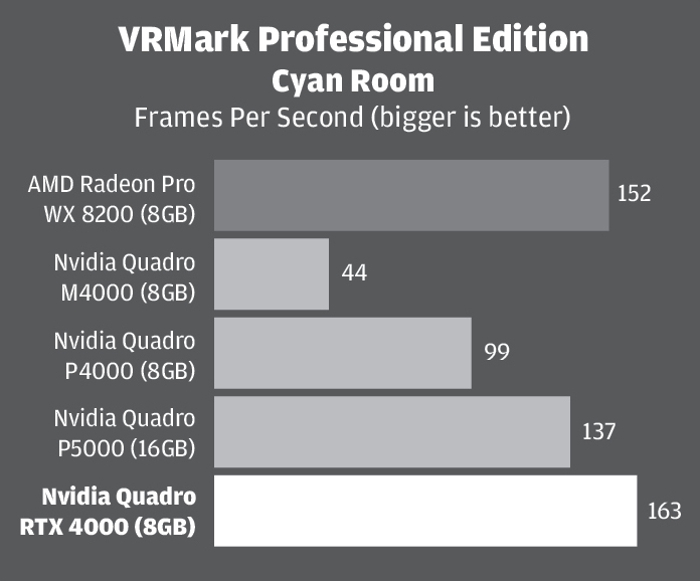

We also tested with VRMark, a dedicated Virtual Reality benchmark that uses both DirectX 11 and DirectX 12. It’s biased towards 3D games, so not perfect for our needs, but should give a good indication of the performance one might expect in ‘game engine’ design viz tools, such as Unity and Unreal, which are increasingly being used alongside 3D design tools.

In the DX 11-based Orange Room test the RTX 4000 was actually slower than the other GPUs but we wouldn’t place too much importance on these results as the scores were away above what one generally needs for VR. In the more demanding Blue Room test which is designed for next generation VR headsets, it had a small but significant lead.

It even managed to beat the AMD Radeon Pro WX 8200 in the DX 12-based Cyan Room, a test that AMD usually does well in because AMD’s Vega architecture is designed to perform well with low-level APIs like DirectX 12 and Vulkan.

We didn’t test the RTX 4000 in VR itself but from what we have observed previously with the P4000 and P5000, and taking into account its comparative performance in VRMark, we would imagine it will be a very good card for pro VR.

The Quadro RTX 4000 is complete overkill for most 3D CAD applications including Solidworks, which tend to be CPU limited and often work just as well with an entry-level or mid-range GPU like the Quadro P1000 or P2000.

However, CAD is one of the main reasons one would choose a professional GPU over a consumer GPU as they are certified and optimised for a range of CAD tools. This means there can be stability and performance benefits and access to pro features such as RealView in Solidworks and OIT (Order Independent Transparency) in Solidworks and Creo, which increase performance and visual quality of transparent objects in the viewport.

In testing with Solidworks 2019 SP1 we went straight for our most demanding dataset, the MaunaKea Spectroscopic Explorer telescope, an assembly with 8,000+ components and 59 million triangles. Using the standard Solidworks graphics engine, performance was quite poor – between 3 and 4 FPS. But this has nothing to do with the capabilities of the RTX 4000. You’ll get a similar experience with any half decent pro graphics card and will need to rely on ‘Level Of Detail’ optimisations to increase frame rates and stop the viewport being choppy.

However, with the new OpenGL 4.5 beta graphics engine, which should make its way into Solidworks proper by the 2020 release, the Quadro RTX 4000 delivered a phenomenal 58 FPS at 4K resolution with RealView, shadows and Ambient Occlusion enabled.

In short, the RTX 4000 should be more than adequate for any CAD or BIM application. If that application is CPU limited, then you almost certainly won’t find any GPU that will give you better performance. On the other hand, if it isn’t CPU limited, then it should be able to handle anything you throw at it.

GPU rendering

GPU rendering is nothing new; we’ve been writing about it in this magazine for ten years now. But 2019 could be the year that it really comes of age. While most of the renderers built into CAD applications still rely on the CPU, there are a growing number of impressive GPU renderers. These include Solidworks Visualize, V-Ray NEXT GPU, Siemens Lightworks, Lumiscaphe, Catia Live Rendering or any renderer that uses Nvidia Iray. Even Luxion KeyShot, a die hard CPU renderer and one that is particuarly popular with product designers, was recently demonstrated running on Nvidia GPUs.

Solidworks Visualize

For our tests we focused predominantly on Solidworks Visualize. The name of this GPU-accelerated physically-based renderer is a bit misleading as it works with many more applications than the CAD application of the same name. It can import Creo, Solid Edge, Catia and Inventor, as well as several neutral formats.

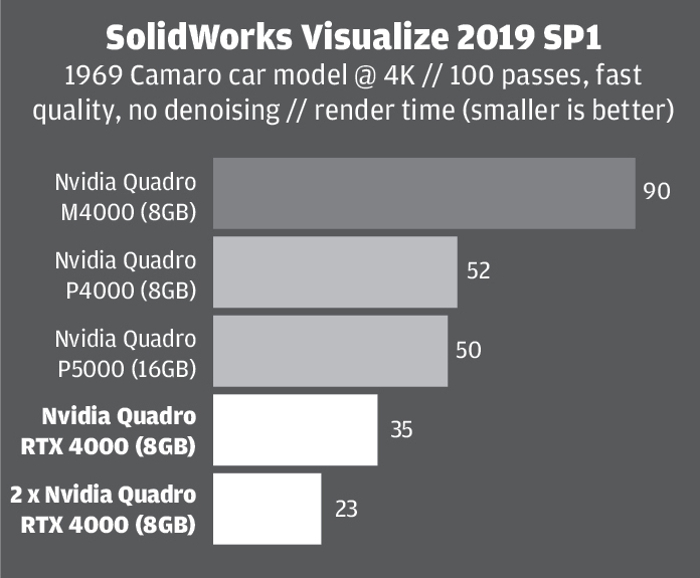

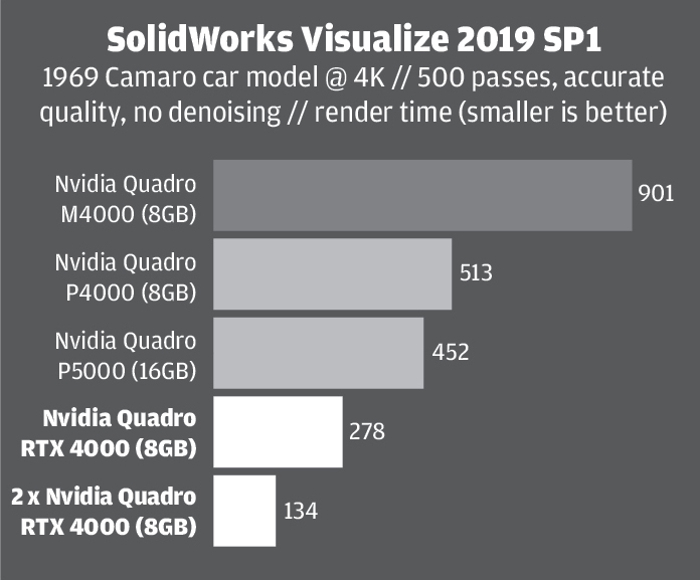

At Solidworks World this month, there was a demo of a Tech Preview of Solidworks Visualize 2020 which will have full support for Nvidia RT Cores to improve rendering performance when using Nvidia RTX GPUs. But this is not yet publicly available, so for our tests we used SolidWorks Visualize 2019 SP1 instead. This version doesn’t support Nvidia RT cores and can only utilise the GPU’s Nvidia CUDA cores.

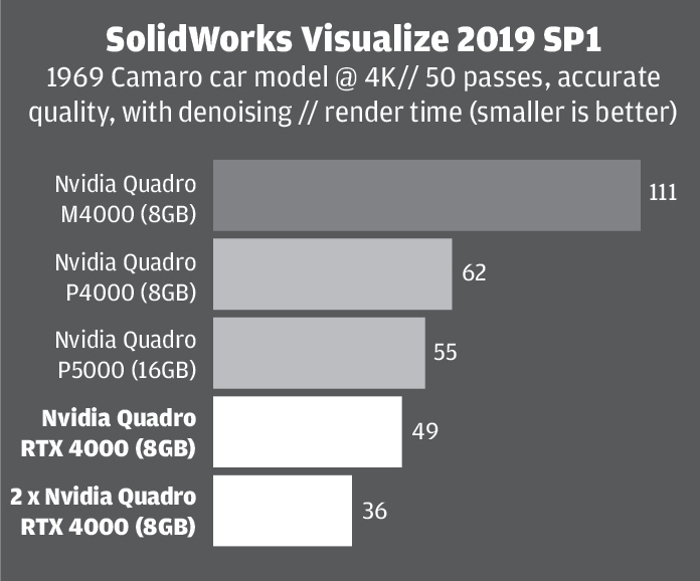

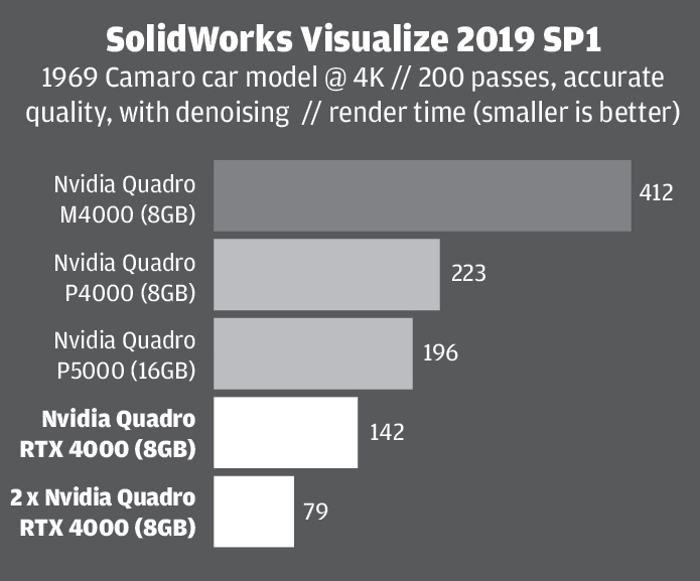

Even though the Quadro RTX 4000 was not being used to its full potential, it was still incredibly fast at rendering. We started with the stock 1969 Camaro car model at 4K resolution with two different quality settings. First, at fast quality with 100 passes, enough to get a good test render, then at accurate quality with 500 passes, which delivered significantly better results.

The RTX 4000 delivered its 100 pass render in a mere 35 seconds. But it took 500 passes to really see its true potential over the previous generation GPUs. It completed that job in just over half the time it took the Quadro P4000 and just under a third of the time it took the Quadro M4000. Adding a second Quadro RTX 4000 to the same workstation also cut the render time in two. We didn’t test the AMD Radeon Pro WX 8200 as it does not currently work with Solidworks Visualize. However, this could change in the future, as AMD recently demonstrated a technology preview of Solidworks Visualize accelerated on Radeon Pro GPUs.

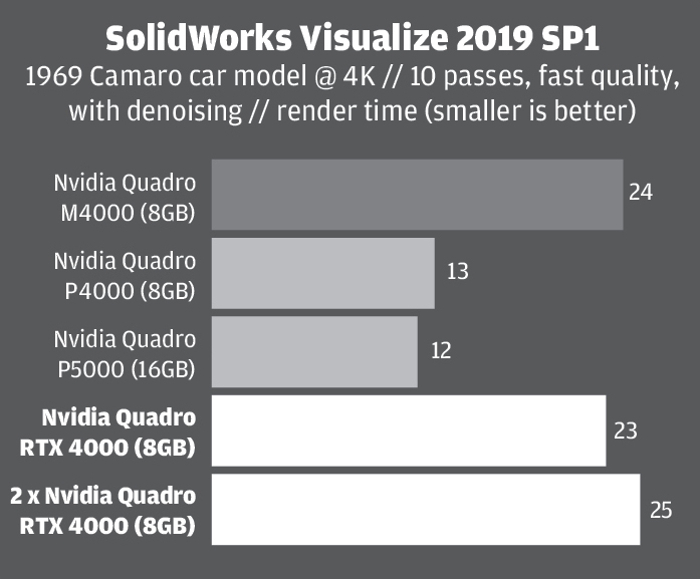

Solidworks Visualize 2019 may not be able to harness the full capabilities of the RTX 4000 GPU quite yet, but it does have some tricks up its sleeve. The GPU can be used for Artificial Intelligence (AI)-denoising to significantly reduce the time it takes to produce rendering output.

AI denoising works by rendering a scene with fewer light ray bounces, resulting in a grainy image, then using deep learning to filter out the noise. Having learnt from 1,000s of image pairs (one noisy, one fuly rendered), it essentially predicts what the final image would have looked like if it had been left to render with more bounces.

DS Solidworks reckons that if a scene routinely needs 500 passes without the denoiser, then you may be able to achieve the same rendering quality with 50 passes if you have the denoiser enabled.

To see how this impacted performance we turned on the denoiser then rendered the same scene with 10 passes at fast quality and 50 & 200 at accurate quality. This threw up some interesting results. When the scene was rendered with 10 passes, the RTX 4000 took nearly twice as long as the P4000 (23 secs compared to 12 secs).

With 50 passes it was faster than the older GPUs and that lead got bigger with 200 passes, but we never found it delivered the same percentage performance advantage over the other GPUs as it did when denoising was off.

We put this to Nvidia and are still awaiting a suitable answer, but it would appear that the denoising calculations in Solidworks Visualize 2019 simply take longer on Nvidia’s Turing architecture than they do on Maxwell and Pascal. How this would impact your productivity will depend entirely on how you set up your renders in terms of passes and resolution, but it’s an important consideration as it could mean the RTX 4000 doesn’t give you as big a performance increase as you thought it might. Of course, this is a moot point if you don’t intend to use AI denoising. What’s more, everything may change in Solidworks Visualize 2020 anyway, when we imagine the software will be able to harness the Quadro RTX’s Tensor Cores for deep learning.

Visualize with RTX

Solidworks Visualize 2020 should offer full support for Nvidia RT Cores, but what kind of rendering performance could we expect from the RTX 4000 once it is enabled? It’s certainly not going to be real time. In fact, we don’t even imagine a Quadro RTX 6000 will be able to deliver anywhere near that promise for typical viz workflows. But it should be notably quicker.

Nvidia has published some preliminary benchmark figures, comparing the results from Quadro RTX 4000 (with RTX acceleration in SolidWorks Visualize 2020) and a Quadro M4000 (in SolidWorks Vizualize 2019). What it hasn’t given is a direct comparison between the Quadro RTX 4000 in Visualize 2019 and Visualize 2020.

To get an idea of what this might be, we first replicated Nvidia’s render test on our own machine, obtained very similar render time, then adjusted the figures slightly.

It’s a little rough, of course, and important to note that Nvidia’s testing was done on a tech preview so performance is likely to change by the time the software ships, but we reckon enabling the RT cores in SolidWorks Visualize 2020 should cut render times by about 35%. And that performance increase will be exclusive to the RTX GPUs. You won’t get that with Pascal or Maxwell.

GPU memory

For real time visualisation, the Quadro RTX 8000’s 8GB is probably plenty for most mainstream workflows. However, when it comes to GPU rendering, it can be restrictive. Memory requirements increase with the size the model, the HDR images and the textures, as well as with output resolution. In SolidWorks Visualize, if you run out of GPU memory it simply won’t run on the GPU and all the calculations will fall back on the CPU, which is signifcnatly slower even with a big multi-core CPU. To test out what we could and could not do with the RTX 4000 we swapped to a workstation with more memory – the Scan 3XS WI4000 Viz, which we review in full here. The full spec can be seen at the bottom of the page

The RTX 4000 was able to handle the 1 million polygon 1969 Camaro car model with ease, maxing out at 5.1GB when rendering at 10k resolution. We then imported the MaunaKea Spectroscopic Explorer telescope assembly, hit render, and it immediately fell back to CPU. In fact, this model is so big — it has 53 million polygons — that we couldn’t even get it to render on the 16GB Quadro P5000.

There are no hard and fast rules here as to what the RTX 4000 will be able to handle in SolidWorks Visualize, but if you’re currently pushing the limits of other 8GB GPUs, such as the Quadro M4000 or P4000, then you’ll probably need to look at the 16GB Quadro RTX 5000 or even higher. Alternatively, get smarter about how you optimise your scene.

Multitasking

One of the big challenges of giving the GPU more responsibility, and the ability to perform graphics and compute tasks, is that different applications will then start to fight for finite resources. If you’re running a GPU render in the background, for example, but also need to spin a model in the CAD viewport, something has to give. Compared to AMD, Nvidia hasn’t historically fared so well in multi-tasking workflows like this, but has this changed with the RTX 4000?

To find out, we set a render going in SolidWorks Visualize, then loaded up the colossal MaunaKea Spectroscopic Explorer telescope assembly in SolidWorks 2019 using the new OpenGL 4.5 beta graphics engine. When panning, zooming and rotating, everything felt really responsive, which wasn’t always the case with the Quadro P4000. Viewport performance did drop – from 41 FPS to 25 FPS but this didn’t impact our experience in any way. We upped the ante with Autodesk VRED, which demands even more from the GPU, but again everything felt fine. With AA set to ‘off’ it dropped from 35 FPS to 22 FPS and with medium AA from 21 FPS down to 14 FPS.

It was only when we set AA to ultra-high that the RTX 4000 struggled, going down from 9 FPS to 3 FPS. GPU memory possibly had an influence here as both individual processes – rendering in SolidWorks Visualize and real time viz in Autodesk VRED would have pushed GPU memory usage to well over 8GB.

In short, it looks like the RTX 4000 should be able to handle multi-tasking workflows with relative ease.

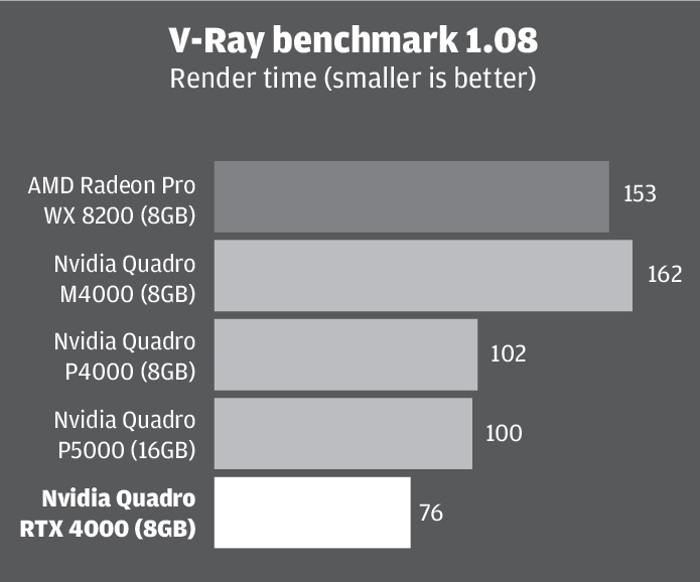

V-Ray / Radeon ProRender

We also tested the RTX 4000 with two other GPU rendering applications. In the V-Ray benchmark it was an impressive 25% faster than the P5000, although this is all about raw ray tracing performance as the benchmark doesn’t take advantage of the AI denoising capabilities of V-Ray NEXT.

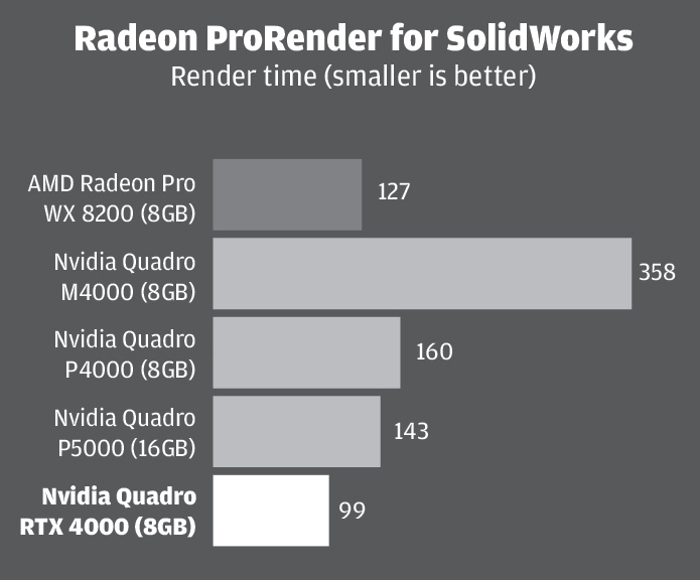

In Radeon ProRender for SolidWorks, an application whose benchmark scores are usually dominated by AMD, who develops the software, the RTX 4000 was about 20% faster than the AMD Radeon Pro WX 8200.

Conclusion

As it currently stands, the Quadro RTX 4000 should be considered a GPU that can do all the same things as its Quadro P4000 predecessor, but much faster. In fact, in almost all of our tests – pro viz, game engine viz, VR and GPU rendering, it also beat the Quadro P5000, which is a class above and still retails for well over £1,000.

Its lead in SolidWorks Visualize was quite outstanding, delivering rendering output nearly twice as a fast as the Quadro P5000. And things only look set to get faster. With our estimated additional 35% performance boost when its RT cores are put to work in SolidWorks Visualize 2020, this represent more than a significant boost to rendering workflows.

But the RTX 4000 isn’t flawless. It doesn’t maintain such a commanding lead when AI denoising is enabled, especially when rendering with few passes. And in some cases it’s even slower. Considering the RTX 4000 is all about deep learning, we found this very surprising. As far as AI denoising is concerned we can only presume the GPU will come into its own when the Tensor cores are put to full use in RTX optimised software. It’s certainly too early to judge it on this.

Overall, Nvidia has done an excellent job with its next generation pro GPU. Even without the future promise of RTX acceleration, it’s hugely impressive for the price and firmly cements the role of the GPU as a multi-functional processor and not just a graphics card for interactive 3D. It looks like it will still take Nvidia some time to truly deliver on its real time ray tracing vision, but it’s certainly on the right path.

Price£808

Test machine #2 // Scan 3XS WI4000 Viz

Specifications

» Intel Core i9 9900K (overclocked to 4.9GHz) (8 cores) CPU

» Nvidia Quadro RTX 4000 (8GB GDDR6 memory) GPU

» 32GB Corsair Vengeance LPX DDR4 3000MHz memory

» 500GB Samsung 970 Evo Plus NVME M.2 PCI-E SSD

» 2TB Seagate Barracuda Pro HDD

» Microsoft Windows 10 Professional 64-bit

» £1,962 + VAT

For a full review, click here

If you enjoyed this article, subscribe to our email newsletter or print / PDF magazine for FREE