In an exclusive interview, Martyn Day speaks to Autodesk chief software architect Jim Awe about the company’s vision of the next generation of BIM tools.

Revit turns 17 years old this year. Its heritage dates back still further, to an older system called Sonata. This makes it a senior citizen in the software world. On the plus side, it enjoys a significant pedigree. On the negative side, most software companies feel that most code has, at best, a tenyear lifespan.

So it’s no surprise that, for years, there have been hints that Autodesk was working on a successor to Revit, perhaps cloudbased, to match the company’s vision of software as a service and the web delivery of all its products.

With the arrival of Autodesk Fusion in 2012, the company took a fresh approach to product design for the manufacturing market – cloud-based with a new user interface, powerful new constraints-solving and, importantly, a platform-independent approach, in a sharp break from delivering only Windows-based applications. The aim was to replace Inventor, and more specifically, to take aim at the market-leading SolidWorks application owned by Dassault Systèmes, a company that was by then also hinting at a nextgeneration solution.

When software companies move to a new generation of applications, there are in general two ways to go. First, they can start afresh and not burden themselves with the constraints of supporting previous methodologies (see, for example, Autodesk Fusion.) The benefit of this approach is that the vendor is liberated from older applications and can freely begin introducing cutting-edge tools and processes. Customers of earlier products, however, may not be so happy.

Second, they can maintain the front end and rework all aspects of the code in the background (as seen with Bentley MicroStation). This is like changing a tyre at 90 miles an hour and means replacing components as and when possible, while cloning operations and processes.

But when it comes to the next generation of BIM tools, it seems that there are new options open to software developers with the deployment of web infrastructure – a ‘third way’ to go in this challenge.

Looking at the development of Fusion, we initially wondered if Revit would get the same treatment as Fusion, albeit in very different market conditions. In manufacturing, where Fusion is targeted, Autodesk was the underdog. In AEC, Revit has been in full flow, with mass adoption across all of Autodesk’s core geographies.

Looking for answers, I spoke with Autodesk CEO Carl Bass back in 2015. At that time, he told me that work was underway to renew the Revit code and that it would probably be Fusion-like – but with all the work the company had done to componentise core software services (DWG, document management, rendering, point cloud and so on) in the cloud, this would take a lot less time than we might guess. In other words, much of the work had already been done.

Fast-forward to 2016

Then came further clues, during an Autodesk University 2016 keynote given by Autodesk’s senior vice president of products Amar Hanspal. On stage, he started to talk about an exciting project, admitting that the question of whether he should discuss the early-stage project on stage at all had been the topic of hot debate internally at the company.

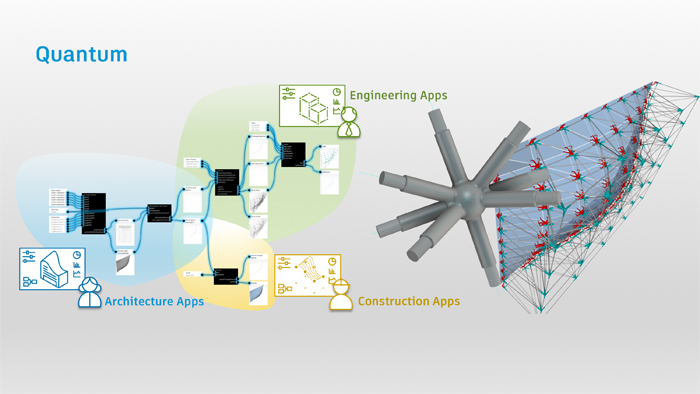

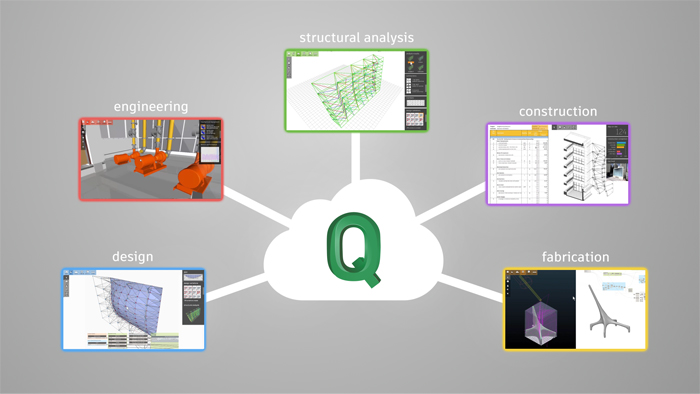

Its code name, he revealed, was Project Quantum. According to Hanspal, it was about “evolving the way BIM works, in the era of the cloud, by providing a common data environment.” Its aim, he continued, was to tackle issues that arise because AEC is a federated process, with data stored in many silos, often unconnected and often unavailable when needed.

Project Quantum, he said, would connect ‘workspaces’, by breaking down the monolithic nature of typical AEC solutions, enabling data and logic to be anywhere on the network and available, on demand, in the right application for the task at hand. These workspaces would be based on professional definitions, enabling architects, structural engineers, MEP professionals, fabricators and contractors access to the tools they need.

In this respect, Project Quantum represents a fundamental shift of mindset at Autodesk in developing products for AEC and was not what we were expecting to hear. On the face of it, it’s not about rewriting or regenerating Revit, but is a much broader vision that aims to tackle collaboration and workflow.

On stage at Autodesk University 2016, Hanspal presented, as an example, the modification of a curtain wall. He demonstrated an architect updating their model in a workspace, while showing how an engineer had a different workspace with more relevant tools for their role in the task and a fabricator had yet another workspace, containing design drawings of the curtain wall. In other words, three different professionals, with three different workspaces, containing different views and tools — but all relating to the same project.

In this way, Quantum isn’t really a design tool as such, but an enabling platform, a common data environment, almost a cloud-based Babel fish.

For those of you who, like me, were originally hoping for a long-overdue, next-generation replacement of Revit, capable of modelling larger models, faster, all this may come as something of a disappointment.

However, the Quantum approach is the result of a completely fresh look, not just at underlying point tools, but also at the process of joining up digital design-tofabrication. Revit will benefit from this approach and it’s one we believe will enable rapid future development.

In order to get a bigger picture of the implications of Quantum, AEC Magazine talked with Jim Awe, chief software architect at Autodesk and also to Jim Lynch, vice president of the company’s building products group.

So what is Quantum?

Jim Awe, an Autodesk veteran, first set out to explain the methodology behind Quantum. “The simplest way to try and understand what we’re trying to accomplish is an analogy with Uber. We’re trying to have a data-centric approach to a process. Uber has its data displayed simultaneously in many different places: the customer has their mobile app to call the driver; the driver also has an app which displays their own view of the world; while Uber has a central system displaying a bird’s-eye view of its control and communications network. The beauty is that nobody explicitly sends data around the system,” he said.

Awe added that, by delivering specialised, targeted applications, instead of huge, monolithic programmes, Autodesk can avoid trying to serve too many people with applications overloaded with functionality. In the BIM world, collaborative workflows and sharing are still way too cumbersome.

“We want apps that offer the right level of knowledge for the task and can share that information seamlessly in the system,” he said. “Today, there’s a lot of manual effort and a lot of noise. There is a lot of oversharing of information that no one really needs or cares about!”

To get to this view of the process, Autodesk has chosen to ponder high-level strategies. Awe commented, “The key consideration is how to get data to flow smoothly in two directions throughout the ecosystem. In one direction, you have the continuum of design / make / use as you consider a system from concept to fabrication. In the other direction, you have all the major systems of the building that have to coordinate with each other (Structure, Facade, Site, MEP, etc.). We don’t need one giant database for all the data if we have interconnectedness between databases. If you look at Google Maps for instance, it presents the data as if it’s all in one place, but it isn’t, it’s from different services from all over the network.”

A holistic approach to managing projects is perhaps not new, as demonstrated by extranet product such as Primavera and many others. However, as Autodesk also creates the core authoring applications, the company feels it is well-placed to offer levels of integration and connectivity not seen before, if it can break free from its own product silos.

Awe explained, “We have this incredible portfolio of products that Autodesk has built or acquired over the years, and we haven’t been able to utilise all the IP as much as we would have liked, because functionality is isolated in apps which don’t talk to each other in a file-based, desktop world. The cloud changes that dramatically. Project Quantum is a heavy research effort to try and figure out how we can implement some of these new concepts, given the change in the technology landscape.”

The implications for Revit

While high-level strategising to solve some of the AEC industry’s horrific data jams sounds great in principle, Awe’s words still left me pondering what all this means for Revit, given its status as probably the biggest data monolith in the BIM process.

While Revit has always been developed as one application, for most of its history, three versions have been sold, for architecture, structural and MEP. The functions from these three disciplines have since been rolled into one product. Now, it seems as if the Quantum vision of the future might lead back, once again, to different tools and views for different disciplines.

So what did Awe have to say about Revit? “Revit is still a major player in the ecosystem. It’s just we have trouble getting Revit to cover the entire landscape. Consider site design: we are never going to put all the Infraworks features into Revit or vice versa. There is just a limit as to what one application can do within an ecosystem.

“Revit will still be a major player, although it will morph a little bit to work within that ecosystem. Revit may give up the capabilities of certain building elements to another app, but Revit will still be a major player in a Quantum world. This is not a replacement for Revit and it’s not Revit in a browser.”

One of the reasons Revit has suffered is down to the architecture of its database and its tendency to bloat. In my past conversations with Autodesk, a source has admitted that, since Revit was acquired and not developed in-house, this has been somewhat out of Autodesk’s control. Had the company had a choice, Revit’s architecture might have looked very different — and that’s just the case for desktops. When it comes to a cloud-based, next-generation world, it’s just not fit for purpose.

“That’s true,” Awe acknowledged. He then showed me a demo of one of Quantum’s core capabilities. In a single screen, there were four distinct application views displayed, one of which was Revit. Every time model data was added into Revit, it appeared instantly in the three other applications. So Quantum enables information-sharing with other environments in real time. This is not a case, by the way, of translating the Revit file and then propagating it. Instead, Revit constantly ‘transmits’ geometry and property set data, via Quantum, to other applications that are tuned in, without laborious file-based data exchange.

“The opportunity here is for Revit to handle large chunks of the modelling still, but communicate in real-time with other applications, and users who may be watching or participating in the design process,” said Awe. “As we now have multiple applications coordinated, we could have for instance, FormIt which has a different database and a different way of working, but it can watch out for Revit’s property set data, which is part of Project Quantum.

“The beauty of the system is that we don’t have to take the data away and translate it into some other format. Export a file, move the file somewhere else, it really does knock down the interoperability barriers of collaboration.

Revit survives intact

In other words, with Quantum, Revit survives intact and evolves at the dawn of Autodesk’s next-generation BIM solution. However, there are indicators as to how Revit will morph in the future with Quantum capabilities.

One of Revit’s Achilles’ heels is the size to which models grow and the performance issues that this growth can trigger. In many ways, this is caused by customers building ever-larger and more detailed models — plus a fair bit of bad practice when it comes to failing to break models down. However, it’s also true that Revit requires the most RAM, fastest SSDs and processors of any of the BIM systems that AEC Magazine reviews.

Quantum is set to bring new life to Revit by taking the load off the local database. Awe explained that, by using mixed geometric representation, combining hybrid full-detail and dumb components, Autodesk has achieved pretty large reductions in local file sizes. Sending whole models anywhere, meanwhile, will no longer be required.

“If you’re in an application that requires a high level of detail — for instance, if the data is for fabricating the panels and components — what actually gets sent back to Revit is not at the same level of detail. Revit would receive a display mesh that’s the right size and looks about right, which can be displayed in context for the architect to see how it looks.

“If the architect did want to see the panel in all its manufactured glory, then they could double-click the panel and see the manufacturer’s information. If you try to model every single part in Revit to a fabrication level of detail, you will undoubtedly slow it down as it’s overwhelmed with data.”

Taking the load off Revit will be a major boost for all users and, on its own, may well be a driving force for firms that build big, multi-storey buildings. The Quantum idea is that there would be a small number of large applications generating design content, with a large number of web-based applications taking these outputs and performing tasks, such as rendering, analysis, takeoffs and so on.

At the moment, a whole model needs to be loaded and then filtered in a subtractive and protracted process; through Quantum, the filtering can happen before anything is loaded. And should you want to see everything, you can load it all in a coordinated view, like Navisworks.

One of the benefits of writing Autodesk Fusion from scratch was that Autodesk could finally offer applications outside of Windows. As Fusion can run purely in a browser, it can run on anything, even an iPad — but Autodesk has also delivered a Mac-native version, a long-held wish for Revit customers.

Unfortunately, Revit is staying as it is, a desktop Windows application — and that means only available on Windows. However, all new Quantum applications developed have been web-based, so should work on any system.

Looking into the distance, I assume that, as Quantum grows, Revit will have more capabilities taken from it and rewritten for the cloud, lightening the local load and democratising Revit’s capabilities to extend to all parties in a project. This is probably the smartest way to create a new generation of Revit, while maintaining a popular application. Suddenly, Revit has a development path that actually promises more than the incremental updates that we have seen released each year.

Despite Awe’s vision of task-specific views of data, Revit will not be going back to being three separate products for architecture, structural and MEP. It also means that while Revit will be updated, it will not evolve to own more direct manufacturing capabilities or to have more civils / topology capabilities. These will now appear in Quantum and data will be available, on demand, in a variety of granular options.

Where a number of Revit users are connected via Quantum, other designers’ edits will obviously be seen in real time. Here, Autodesk is looking at ways for participants to lock geometry and set private workspaces so the process of collaborative design does not become too chaotic. Awe suggests a comparison to the way Github works: sometimes it may be appropriate to lock to a version of someone else’s work and update later.

Rapid development

It will also be much easier for Autodesk’s AEC team to add new functionality into Quantum than it would be to a traditional monolithic application, said Jim Lynch. For example, Autodesk developers took an open source analysis package and had it working within a day. Adding the equivalent application to a large codebase, such as Revit or AutoCAD, by contrast, would have taken months, as it would need to be integrated to the database and then the graphics system. With Quantum, this is a trivial issue. And in the future, it suggests that Autodesk can acquire applications and point solutions and rapidly deploy them to users of the Quantum ecosystem.

Awe added, “We had a group of developers experiment with the system, without much of an introduction, and they created a Minecraft-style modelling application, where multiple people were collaborating simultaneously to produce the designs. Each user had an independent view of the information, while a central screen offered an aggregated view, like Navisworks. This gives us enormous flexibility in how we can build applications, move the data around, what kind of independent specialist views we can create, versus what we can do now, which is give everybody the same tool, with the same model, and force them to filter it down an appropriate view.”

Quantum applications

So with Quantum providing this ecosystem of sharing applications, I asked, what would an actual product that sits on top of all this look like?

“We still have a long way to go,” Awe replied. “The friction in the industry as it stands, is that you have a design-heavy tool such as Revit, which coordinates all the systems, all the verticals, and then you throw the data over a wall to the people who have to make and fabricate it. The data is not at the right level of detail or the right composition, so engineers have to rebuild it from scratch and throw away most of the data.

“Quantum takes us in a new direction where a specific system, such as a curtain wall, can be designed from concept to fabrication. And then we can take those individual systems and stitch them together around their interface points. For instance, how the curtain wall attaches to the structure and how the building footprint impacts the site. The applications have to agree on certain interface points throughout the process. If you like, it’s a contract between the systems and between the levels of detail.”

This makes sense, as Revit isn’t an application designed to drive cutting machinery, and nor should it be solved in a single application. Adding the detail required for fabrication would have a negative impact on the size of the model database and there are better, manufacturing- specific CAD systems out there. By agreeing these interface points between systems, at different levels of detail, in different formats, Quantum enables the selection of the right tool for the right job, while still maintaining a linked ecosystem.

The key seems to be these interface points between the systems. This means geometry and data doesn’t have to be exported or translated and each party can keep the levels of detail separate and do that in whatever tool they need to do it in.

Awe explained that, while modelling, if interface points change, this could automatically update the design in another system or raise a flag to the designer to indicate a change has been made.

This is a massive benefit over what happens now, where an architect will give continually lob over a hefty Revit model with each revision, leaving other project participants having to figure out what’s changed. For fabricators, this is just noise in the process and wastes their time.

Quantum parametrics?

While Quantum is aimed at bypassing existing workflow log-jams, it also brings with it new potential problems. In the world of Revit, Dynamo, Grasshopper, ArchiCAD and GC, we have lots of parametric systems driving geometry. In an interlinked world, how would Quantum deal with conflicting and automatic drivers?

“We do imagine that there will be multiple parametric systems, and our goal is to make them collaborative, not competitive,” said Awe. “Having all the disciplines intertwined into the same model and editor can be unnecessarily restrictive.

“We imagine a Quantum ecosystem to be more decoupled, giving each discipline the freedom to choose the tool of their choice for modelling their specific part of the building. So, as an example: the façade designer may use Dynamo or Grasshopper as their main authoring tool; the architect may use something like Revit; and the structural engineer may use a brand-new, web-based authoring tool. Each one is responsible for the parametrics of their own system and there should not be any conflict. But of course, what happens when those systems have to coordinate? Our theory is that having them interact and communicate via the interface points that we discussed earlier is beneficial in several ways.

“It decouples segments of the model, which allows the method of authoring and the level of detail to vary depending on the need. Just as I could mix Dynamo scripts and Revit parametric constraints, I could also mix conceptual level of detail for one system with fabrication-level of detail for another. This is much more flexible than having to do the entire model in one level of detail and then trying to throw it over the wall to the next person, or to attempt putting multiple levels of detail into the same model.

“It allows for the ‘natural’ boundaries of professional discipline to be represented in workflows. As a structural engineer, I can do my work in my own chosen tool. When I am done working things out, I submit my work back into the system, which triggers an update of the interface points I agreed on with the architect. The architect then gets a controlled event that they can react to. They can easily see what changed and make the decision to accept as-is, or start some negotiation or redesign based on the proposed change. So, in this case, the parametric systems aren’t competing for control of the reaction to those changes. They are informing each other in a more rational, controlled way that respects the normal boundaries of those two professions. This same dynamic would hold for interactions between architect and site engineer, architect and façade engineer, and so on.

“We feel confident in this interaction among the major systems that have heavy fabrication / make requirements. However, it will get a little trickier depending on how fine-grained and specialised each of the tools gets. Will there be a ‘stairway authoring tool’ for nonstandard stairs? What about panellised walls? We imagine that Revit will continue to play a major role in the BIM process and then reach out to the Quantum ecosystem when there is a complex system in the building that has heavy Design/ Make/Use lifecycle considerations. We might run into some ‘competing parametrics’ in some of those smaller systems, but our goal is to overcome that the same way we do for the larger systems, maybe with a few subtle nuances.”

Sharing data and the cloud

Autodesk has made no qualms about its cloud-based vision of the future of design tools. At the ‘sneak-peak’ at Autodesk University, it quickly became apparent that Quantum was essentially an always-on system. If a designer were to decouple and go offline, how could that work be added back into the mix, when multiple changes might continue to be made? Synchronising project data online and offline is a challenge, so does this mean you need to be always online with Quantum?

“I’d be lying if I said we had it all figured out”, explained Awe. “Many of the applications we have been working on and have demonstrated are web-only applications, so would not be available if you didn’t have a web connection. I’ve been in this industry a long time and collaboration and interoperability have always huge barriers to realising the the full benefits of BIM. Quantum’s capabilities make a lot of those issues go away. Now, we have some new problems to solve, but we think we have made considerable progress in tackling long-established painpoints.

“We are just getting started on implementation,” he continued, “and to date, we have separated the data out by discipline, so that each company or team has their own pile of data that they own, but the interface points are shared by the two (or more) parties that have agreed to collaborate around those shared points. They can push resolved sets of geometry for others to use for coordination and visualisation, but they have agreed to share those as well. They don’t have to give up any of their native data used by whatever tool they use to author.

“But, as I’m sure you can guess, it gets tricky pretty fast when you consider all the possible workflows. So, lots of work still to do here. We are fresh off of considering the realities of ‘ownership of data’ with the BIM360 Docs project, so we understand most of the issues. One thing that is different is that we are reducing the amount of information that must be shared in order to collaborate and we hope that will have a positive effect. But, the bottom line is that it’s still early and we will need to work with customers to help us figure out what they are comfortable with. There are new opportunities over file-based systems for sure, but there’s also some uncharted territory.”

Quantum API

Obviously, ecosystems need populating and Autodesk aims to have an API available to provide application and services from the development community, all operating around the Design / Make / Use continuum and the concept of ‘Data at the Centre’ (without that data literally residing in one database).

According to Awe: “You can’t do that by being a closed system. As we are building out the pieces for prototype workflows, we always make it work for at least two cases: first, one of our tools, like Revit; and second, a generic stand-in tool, like Excel or standard JSON data that any JavaScript app could produce.

“As an example, for the façade application to get its interface points, we would make a modification to Revit that can serve those changes up to a neutral schema. We would also make an Excel version of those points that can feed into that neutral schema. So, in short, there are no assumptions about reading / writing directly to Autodesk tools, and that gives us the flexibility to insert anything into that same spot in the workflow.”

The development team also said that this API would be available to competitors, as they recognise that AEC firms now run multiple tools to complete their projects. I was shown many ‘orchestration graphs’ of target customers’ toolsets. This could potentially have big benefits for companies like Bentley, which has a huge suite of analysis tools, and popular point solutions, such as McNeel Rhino. The big question will be, will these vendors want to play in Autodesk’s ecosystem? I suspect that customers will make that decision for them, by choosing to adopt (or not) a Quantum-based system.

Final thoughts on Quantum

Quantum is a lot to take in. The first thing to note is that there is no next-generation Revit and that Revit is Windowsbased for the foreseeable future. As it develops, Quantum will take a lot of the weight off Revit by removing the database millstone around its neck. Over time, it’s likely to see some of its functionality dissolve into Quantum-based, on-demand web applications, further lightening the load on the desktop. I’d even suggest that, over a longer time period, we could well see Revit dissolve into the Quantum framework, becoming a series of applications.

Quantum will, however, offer some immediate benefits for users with compliant applications — namely, dynamic updates of geometry and data properties, enabling collaborative working in a new and exciting way. Autodesk has dodged the bullet of making a humongous online database and solved the problem of trying to expand Revit into areas in which it was never ever intended to play (such as civils and fabrication), and which quite frankly would break it.

By breaking down the development work into smaller modules and enabling rapid deployment of new tools using the cloud, Autodesk can quickly flesh out its AEC offering and rapidly integrate its manufacturing solutions. For third-party developers, this also opens new possibilities and potentially enables design firms to integrate disparate arrays of solutions from different vendors.

Quantum is a very ambitious vision and it’s not a shipping product — but the potential to kill some of the problems that Revit BIM workflows suffer from is significant. As Awe put it: “It’s still early in the process and beca use we are talking about an ecosystem of products and services that will evolve over time, it’s difficult to give specifics beyond general trends and theories that we think may be likely.

“Second, the breakthroughs that we are very excited about are the ones that have been holding back the industry and the full potential of BIM for many years: collaboration and interoperability of data and tools.

“Leveraging cloud technologies allows for those promising breakthroughs, but it also introduces some new, but exciting, challenges that we are eager to work through. So, while we don’t have all the answers just yet, we are confident that it is a promising direction and we are investing in it accordingly.”

While the technology is one thing, it will be interesting to see how customers feel about a system that really needs to have everyone online to work — should that prove to be the end result. It’s possible that the benefits may outweigh the limitations, but I’m not convinced how that might play in countries with lessevolved infrastructures.

When the AEC industry moved to BIM, interoperability became an issue as we lacked decent interchange standards. While Quantum attempts to solve that issue, it also makes Autodesk customers even more reliant on Autodesk products, services and pricing.

I’ve talked with many enterprise license customers who have been shocked at the increase in premiums charged for their next three-year deal for design tools, services and consultancy from Autodesk. After all, it’s one thing to have your authoring tool based on one company’s technology, but it’s a much more significant proposition to hand over your complete process to that vendor. For competitive third parties, this would also be seen as potentially playing into Autodesk’s stated ambition to ‘own the platform’.

For now, from what I have seen, there is a touch of genius to creating a new, ‘third way’ to reinvigorate a mature product, providing a platform to renew its capabilities, while solving serious pain points for customers and developers and, at the same time, bringing powerful capabilities to team collaboration.

In conclusion, Awe closed off our chat by saying, “For now, we are heads down, and working to develop a platform ecosystem that we consider the future of a Design / Make / Use workflows for the AEC industry.”

If you enjoyed this article, subscribe to AEC Magazine for FREE