Autodesk boldly told the industry that there would be no second-generation Revit. Instead, it would develop a cloud-based platform for all project data, called Forma. This month the first instalment arrived, with a focus on conceptual design. But Forma will become so much more than this. Martyn Day gets to the heart of the platform and how it handles data

In early May, Autodesk announced the first instalment of its next-generation cloud-based platform, Forma, with an initial focus on conceptual design (see box out at end). The branding and original announcement of Forma was delivered at Autodesk University 2022 in New Orleans. While the name was new, the concept was not.

Back in 2016, the then vice president of product development at Autodesk, Amal Hanspal, announced Project Quantum , the development of a cloud-based replacement for Autodesk’s desktop AEC tools.

In the seven years that followed, progress has been slow, but development has continued, going through several project names, including Plasma,, and project teams. While the initial instalment of Forma could be considered to be just a conceptual design tool — essentially a reworking and merging of the acquired Spacemaker toolset with Autodesk Formit — this launch should not be seen as anything other than one of the most significant milestones in the company’s AEC software history.

Since the early 2000s, Autodesk (and, I guess, a lot of the software development world), decided that the cloud with a Software-as-a-Service (SaaS) business model was going to be the future of computing. Desktop-based applications require local computing power, create files, caches and generate duplicates. All of this requires management and dooms collaboration to be highly linear.

While the initial instalment of Forma could be considered to be just a conceptual design tool — essentially a reworking and merging of the acquired Spacemaker toolset with Autodesk Formit — this launch should not be seen as anything other than one of the most significant milestones in the company’s AEC software history

The benefits of having customers’ data and the applications sat on a single server include seamless data sharing and access to huge amounts of compute power. Flimsy files are replaced by extensible and robust databases, allowing simultaneous delivery of data amongst project teams.

With the potential for customers to reduce capital expenditure on hardware and easy management of software deployment and support, it’s a utopia based on modern computer science and is eminently feasible. However, to move an entire install base of millions from a desktop infrastructure, with trusted brands, to one that is cloudbased, does not come without its risks. This would be the software equivalent of changing a tyre at 90 miles an hour.

The arrival of Forma, as a product, means that Autodesk has officially started that process and, over time, both new functionality and existing desktop capabilities will be added to the platform. Overall, this could take five to ten years to complete.

The gap

Autodesk’s experiments with developing cloud-based design applications have mainly been in the field of mechanical CAD, with Autodesk Fusion in 2009. While the company has invested heavily in cloud-based document management, with services such as Autodesk Construction Cloud, this doesn’t compare to the complexity of creating an actual geometry modelling solution. To create Fusion, Autodesk spent a lot of time and money to come up with a competitive product to Dassault Systèmes’ Solidworks, which is desktop-based. The thinking was that by getting ahead on the inevitable platform change to cloud, Autodesk would have a contender to capture the market. This has happened many times before, with UNIX to DOS and DOS to Windows.

Autodesk has finally created the platform change event that it hoped for, without user demand, but it has come at a time when Revit is going to be challenged like never before

Despite these efforts Fusion has failed to further Autodesk’s penetration of its competitors’ install base. At the same time the former founder of Solidworks, Jon Hirschtick, also developed a cloudbased competitor, called Onshape, which was ultimately sold to PTC. While this proved that the industry still thought that cloud would, at some point, be a major platform change, it was clear that customers were both not ready for a cloud-based future or to leave the current market-leading application.

Years later and all Solidworks’ competitors are still sat there waiting for the dam to burst. Stickiness, loyalty and long-honed skills could mean they will be waiting a long time.

This reticence to move is even more likely to be found in the more fragmented and workflow-constrained AEC sector. On one hand, the pressure to develop and deliver this over ten years would seem quite acceptable. The problem comes with Autodesk’s desktop products that have had a historic low development velocity and a growing vocal and frustrated user base.

Back in 2012, I remember having conversations with C-level Autodeskers, comparing the cloud development in Autodesk’s manufacturing division and wondering when the same technologies would be available for a ‘next-generation’ Revit. With this inherent vision that the cloud would be the next ‘platform’, new generations of desktop tools like Revit, seemed like a waste of resources. Furthermore, Autodesk was hardly under any pressure from competitors to go down this route.

However, I suspect that not many developers at the time would have conceived that it would take so long for Autodesk to create the underlying technologies for a cloud-based design tool. The idea that Revit, in its current desktopbased state, could survive another five to ten years before being completely rewritten for the cloud is, to me, inconceivable.

Angry customers have made their voices clearly heard (e.g. Autodesk Open Letter and Nordic Open Letter Group . So, while Forma is being prepared, the Revit development team will need to do a serious bit of rear-guard action development to keep customers happy in their continued investment in Autodesk’s aging BIM tool. And money spent on shoring up the old code and adding new capabilities is money that’s not being spent on the next generation.

This isn’t just a case of providing enhancements for subscription money paid. For the first time in many decades, competitive software companies have started developing cloud-based BIM tools to go head-to-head with Revit (see Arcol, Snaptrude), Qonic and many others that have been covered in AEC Magazine over the past 12 months).

Autodesk has finally created the platform change event that it hoped for, without user demand, but it has come at a time when Revit is going to be challenged like never before.

The bridgehead

While Forma may sound like a distant destination, and the initial offering may seem inconsequential in today’s workflows, it is the bridgehead on the distant shore. The quickest way to build a bridge is to work from both sides towards the middle and that seems to be exactly what Autodesk is planning to do.

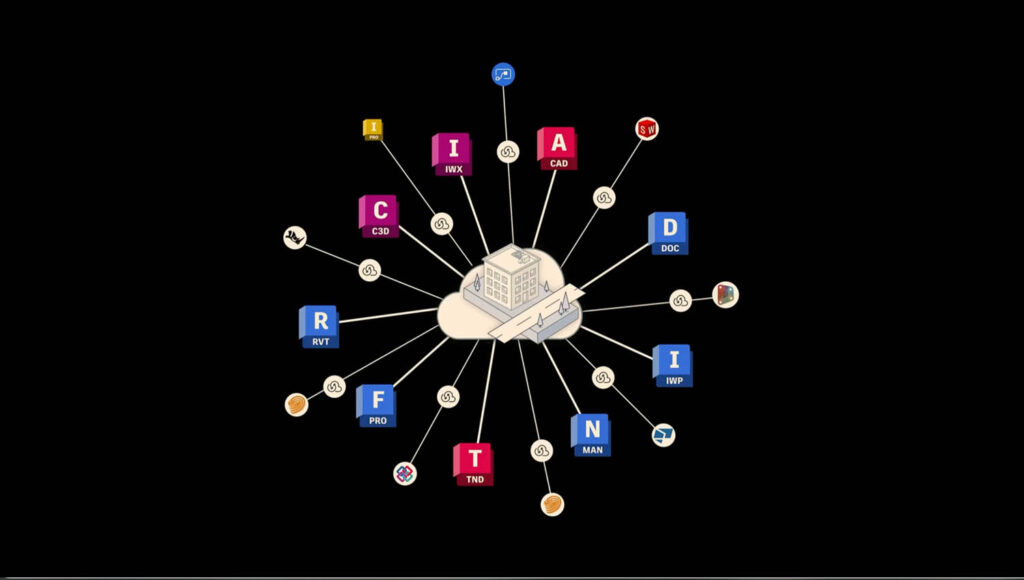

Forma is based on a unified database, which is capable of storing all the data from an AEC project in a ‘data lake’. All your Autodesk favourite file formats — DWG, RVT, DWF, DXF etc. — get translated to be held in this new database (schema), along with those from thirdparties such as Rhino.

The software architecting of this new extensible unified database forms the backbone to Autodesk’s future cloud offering and therefore took a considerable amount of time to define.

Currently, Autodesk’s desktop applications don’t use this format, so on-thefly translation is necessary. However, development teams are working to seamlessly hook up the desktop applications to Forma. With respect to Revit, it’s not unthinkable that, over time, the database of the desktop application will be removed and replaced with a direct feed to Forma, with Revit becoming a very ‘thick client’. Eventually the functionality of Revit will be absorbed into thin-client applets, based on job role, which will mainly be delivered through browserbased interfaces. I fully expect Revit will get re-wired to smooth continuity before it eventually gets replaced.

Next-generation database

One of the most significant changes that the introduction of Forma brings is invisible. The new unified database, which will underpin all federated data, lies at the heart of Autodesk’s cloud efforts. Moving away from a world run by files to a single unified database provides a wide array of benefits, not only for collaboration but also to individual users. To understand the structure and capabilities of Forma’s new database, I spoke with Shelly Mujtaba, Autodesk’s VP of product data.

Data granularity is one of the key pillars of Autodesk’s data strategy, as Mujtaba explained, “We have got to get out of files. We can now get more ‘element level’, granular data, accessible through cloud APIs. This is in production; it’s actually in the hands of customers in the manufacturing space. “If you go to the APS portal (Autodesk Platform Services (formerly Forge), you’ll see the Fusion Data API. That is the first manifestation of this granular data. We can get component level data at a granular level, in real time, as it’s getting edited in the product. We have built similar capabilities across all industries.

“The AEC data model is something that we are testing now with about fifteen private beta customers. So, it is well underway — Revit data in the cloud — but it’s going to be a journey, it is going to take us a while, as we get more and richer data.”

To build this data layer, Mujtaba explained that Autodesk is working methodically around workflows which customers use, as opposed to ‘boiling the whole ocean’. The first workflow to be addressed was conceptual design, based on Spacemaker. To do this, Autodesk worked with customers to identify the data needs and learn the data loops that came with iterative design. This was also one of the reasons that Rhino and TestFit are among the first applications that Autopdesk is focussing on via plug-ins.

Interoperability is another key pillar, as Mujtaba explained, “So making sure that data can move seamlessly across product boundaries, organisational boundaries, and you’ll see an example of that also with the data sheet.”

At this stage, I brought up Project Plasma, which followed on from Project Quantum. Mujtaba connected the dots, “Data exchange is essentially the graduation of Project Plasma. I also led Project Plasma, so have some history with this,” he explained. “When you see all these connectors coming out, that is exactly what we are trying to do, to allow movement of data outside of files. And it’s already enabling a tonne of customers to do things they were not able to do before, like exchange data between Inventor and Revit at a granular level, even Inventor and Rhino, even Rhino and Microsoft Power Automate. These are not [all] Autodesk products, but [it’s possible] because there’s a hub and spoke model for data exchange.

“Now, Power Automate can listen in on changes in Rhino and react to it and you can generate dashboards. Most of the connectors are bi-directional. Looking at the Rhino connector you can send data to Revit, and you can get data back from Revit. Inventor is now the same (it used to be one directional, where it was Revit to Inventor only) so you can now take Inventor data and push it into Revit.”

Find this article plus many more in the May / June 2023 Edition of AEC Magazine

👉 Subscribe FREE here 👈

Offline first

In the world of databases there have been huge strides to increase performance, even with huge datasets. One only has to look to the world of games and technologies like Unreal Engine.

One of the terms we are likely to hear a lot more in the future is ECS (Entity Component Systems). This is used to describe the granular level of a database’s structure, where data is defined by component, in a system, as opposed to be just being a ‘blob in a hierarchy data table’.

I asked Mujtaba if this kind of games technology was being used in Forma. He replied, “ECS is definitely one of the foundational concepts we are using in this space. That’s how we construct these models. It allows us extensibility; it allows us flexibility and loose coupling between different systems. But it also allows us to federate things. This means we could have data coming from different parties and be able to aggregate and composite through the ECS system.

“But there’s many other things that we have to also consider. For example, one of the most important paradigms for Autodesk Fusion has been offline first — making sure that while you are disconnected from the network, you can still work successfully. We’re using a pattern called command query responsibility separation. Essentially, what we’re doing is we’re writing to the local database and then synchronising with the cloud in real time.”

This addresses one of my key concerns that, as Revit gets absorbed into Forma over the next few years, users would have to constantly be online to do their work. It’s really important that team members can go off and not be connected to the Internet and still be able to work.

Mujtaba reassuringly added, “We are designing everything as offline first, while, at some point of time, more and more of the services will be online. It really depends on what you’re trying to do. If you’re trying to do some basic design, then you should be able to just do it offline. But, over time, there might be services such as simulation, or analytics, which require an online component.

“We are making sure that our primary workflows for our customers are supported in an offline mode. And we are spending a lot of time trying to make sure this happens seamlessly. Now, that is not to say it’s still file-based; it’s not file based. Every element that you change gets written into a local data store and then that local data source synchronises with the cloud-based data model in an asynchronous manner.

“So, as you work, it’s not blocking you. You continue to work as if you’re working on a local system. It also makes it a lot simpler and faster, because you’re not sending entire files back and forth, you’re just sending ‘deltas’ back and forth. What’s happening on the server side is all that data is now made visible through a Graph QL API layer.

“Graph QL is a great way to federate different types of data sources. Because we have different database technologies for different industries, because they almost always have different workflows. Manufacturing data is really relationship heavy, while AEC data has a lot of key value pairs (associated values and a group of key identifiers). So, while the underlying databases might be different, on top of that we have a Graph QL federation layer, which means from a customer perspective, it all looks like the same data store. You all you get a consistent interface.”

Common and extensible schemas One of the key benefits of moving to a unified database was that data from all sorts of applications can sit side by side, level 200 / 300 AEC BIM model data with Inventor fabrication-level models. I asked Mujtaba to provide some context to how the new unified database schema works.

“It’s a set of common schemas, which we call common data currencies — and that spans across all our three industries,” he explained. “So, it could be something basic, like basic units and parameters, but also definitions or basic definitions of geometry. Now, these schemas are semantically versioned. And they are extensible. So that allows other industry verticals to be able to extend those schemas and define specific things that they need in their particular context. So, whenever this data is being exchanged, or moved across different technologies under the covers, or between different silos, you know they’re using the language of the Common Data currencies.

Forma in the long-term is bold, brave and, in its current incarnation, the down payment for everything that will follow afterwards for Autodesk. However, it has been a long time coming

“On top of that data model, besides ECS, we also have a rich relationship framework, creating relationships between different data types and different level of details. We spend a lot of time making sure that relationship, and that that way of creating a graph-like structure, is common across all industries. So, if you’re going through the API, if you’re looking at Revit data and how you get the relationship, it will be the same as how you get Fusion relationships.”

To put it in common parlance, it’s a bit like PDF. There is a generic PDF definition, which has a common schema for a whole array of stuff. But then there’s PDF/A, PDF/E and X, which are more specific to a type of output. Autodesk has defined the nucleus, the schema for everything that has some common shared elements. But then there are some things that you just don’t need if you’re in an architecture environment, so there will be flavours of the unified database that have extensions. But convergence is happening and increasingly AEC is meeting manufacturing in the offsite factories around the world.

Mujtaba explained that because of convergence Autodesk is spending a lot of time on governance of this data model trying to push as much as possible into the common layer.

“We’re being very selective when we need to do divergence,” he said. “Our strategy is, if there is divergence, let it be intentional not accidental. Making sure that we make a determined decision that this is the time to diverge but we may come back at some point in time. If those schemas look like they’re becoming common across industries, then we may push it down into the common layer.

“Another piece of this, which is super important, is that these schemas are not just limited to Autodesk. So obviously, we understand that we will have a data model, and we will have schemas, but we are opening these schemas to the customers so they can put custom properties into the data models. That has been a big demand from customers. ‘So, you can read the Revit data, but can I add properties? For example, can I add carbon analysis data, or cost data back into this model?’

“Because customers would just like to manage it within this data model, custom schemas are also becoming available to customers too.”

Back in the days of AutoCAD R13, Autodesk kind of broke DWG, by enabling ARX to create new CAD objects that other AutoCAD users could not open or see. The fix for this was the introduction of proxy objects and ‘object enablers’ which had to be downloaded, so ARX objects could be manipulated. To this backdrop, the idea that users can augment Forma’s schema was a concern. Mujtaba explained, “You can decide the default level of visibility to only you can see it, but you might say this other party can see it, or you can say this is universally visible, so anyone can see it.

“To be honest, we are learning so we have a private beta running within the Fusion space of extension data extensibility with a bunch of customers, because this is a big demand from our customers and partners. So, we are learning. “The visibility aspect came up, and, in fact, our original visibility goal was only ‘you can see this data’. And then we learned from the customers that no, they would selectively want to make this data visible to certain parties or make it universally visible.

“We are continuing to do very fast iterations. Now instead of going dark for several years and trying those things and trying to boil the ocean, we are saying, ‘here’s a workflow we’d like to solve. Let’s bring in bunch of customers and let’s solve this problem. And then move to the next workflow’. So that’s why you see things coming out at a very rapid pace now, like the data exchange connectors, and the data APIs”.

It’s clear that the data layer is still to some degree in development, and I don’t blame Autodesk for breaking it down into workflows and connecting your tools that are commonly used. This is a vast development area and an incredibly serious undertaking on behalf of Autodesk’s development team. It seems that the strategy has been clearly identified and the data tools and technologies decided upon. In the future, I am sure files will still play a major role in some interoperability workflows, but with APIs and an industry interest in open data access, files may eventually go the way of the dodo.

Conclusions

Forma in the long-term is bold, brave and, in its current incarnation, the down payment for everything that will follow afterwards for Autodesk. However, it has been a long time coming. In 2016, the same year that Quantum was announced at Autodesk University, Autodesk also unveiled Forge, a cloud-based API which would be used to break down existing products into discreet services which could be run on a cloudbased infrastructure.

Forge was designed to help Autodesk put its own applications in the cloud, as well to help its army of third-party developers build an ecosystem. Forge recently was rebranded Autodesk Platform Services (APS). I actually don’t know of any other software generation change where so much time and effort was put into ensuring the development tools and APIs were given priority. The reason for this is possibly because, as in the past, these tools were provided by Microsoft Foundation Classes, while for the cloud Autodesk is having to engineer everything from the ground up.

While this article may appear a bit weird, as one might expect a review of the new conceptual design tools, for me the most important thing about Forma is the way that it’s going to change the way we work, the products we use, where data is stored, how data is stored, how data is shared and accessed, as well as new capabilities, such as AI and machine learning. Looking at Forma from the user-facing functional level that has been exposed so far, does not do it justice. This is the start of a long journey.

Autodesk Forma is available through the Architecture, Engineering & Construction (AEC) Collection. A standalone subscription is $180 monthly, $1,445 annually, or $4,335 for three years. Autodesk also offers free 30-day trials and free education licences. While Forma is available globally, Autodesk will initially focus sales, marketing and product efforts in the US and European markets where Autodesk sees Forma’s automation and analytical capabilities most aligned with local customer needs.

From Autodesk SpaceMaker to Autodesk Forma

Over the last four years there have been many software developers aiming to better meet the needs of conceptual design, site planning and early feasibility studies in the AEC process. These include TestFit, Digital Blue Foam, Modelur, Giraffe and Spacemaker to name but a few. The main take up of these tools was in the property developer community, for examining returns on prospective designs.

In November 2020, just before Autodesk University, Autodesk paid $240 million for Norwegian firm Spacemaker and announced it as Autodesk’s new cloud-based tool for architectural designers. Autodesk had been impressed with both the product and the development team and rumours started coming out that the team would be the one to develop the cloud-based destination for the company’s AEC future direction.

This May, Autodesk launched Forma, which is essentially a more architectural biased variant of the original application and, at the same time, announced it was retiring the Spacemaker brand name. This created some confusion that Forma was just the new name for Spacemaker. However, Spacemaker’s capabilities are just the first instalment of Autodesk’s cloudbased Forma platform, the conceptual capabilities, which will be followed by Revit.

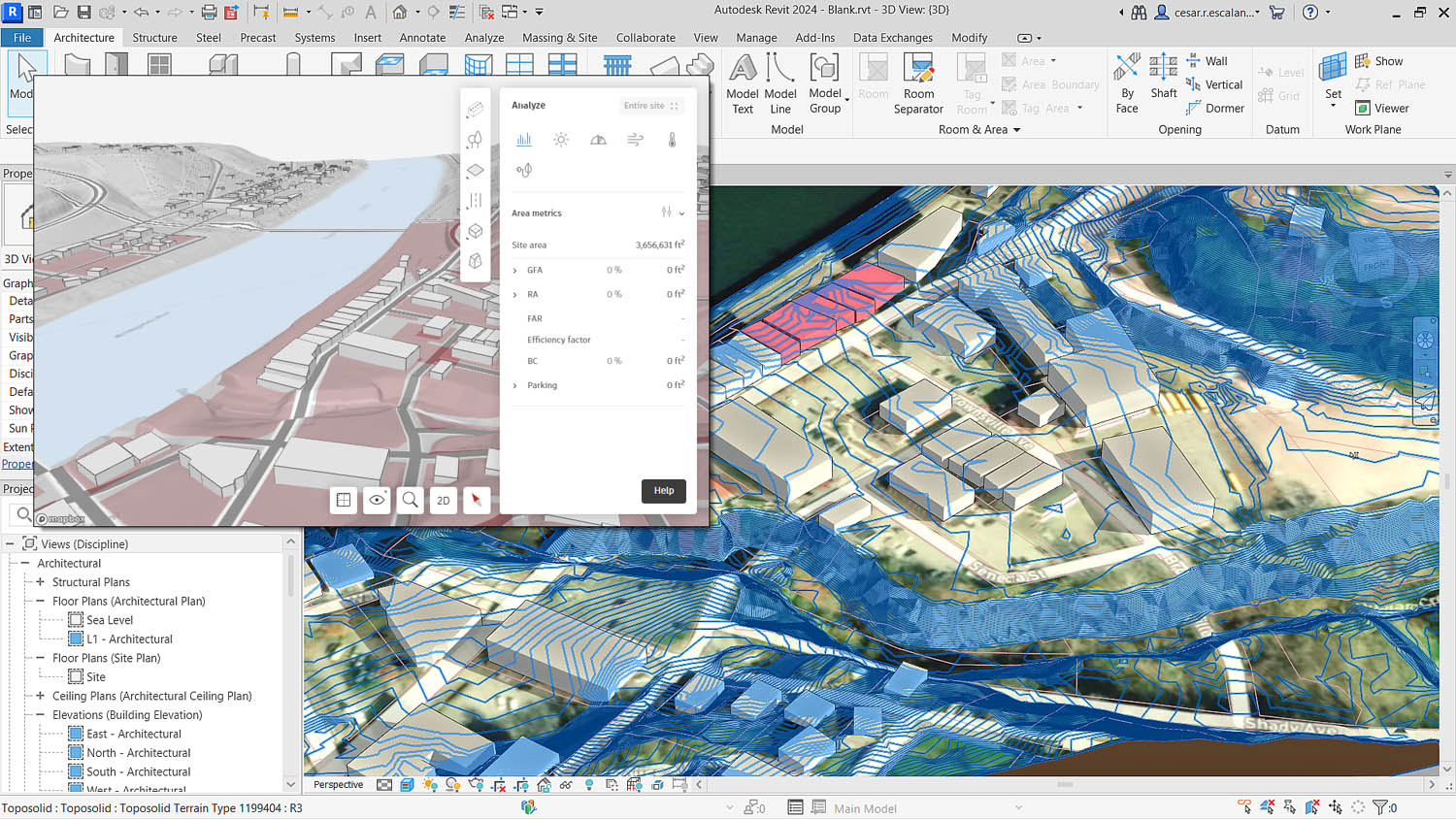

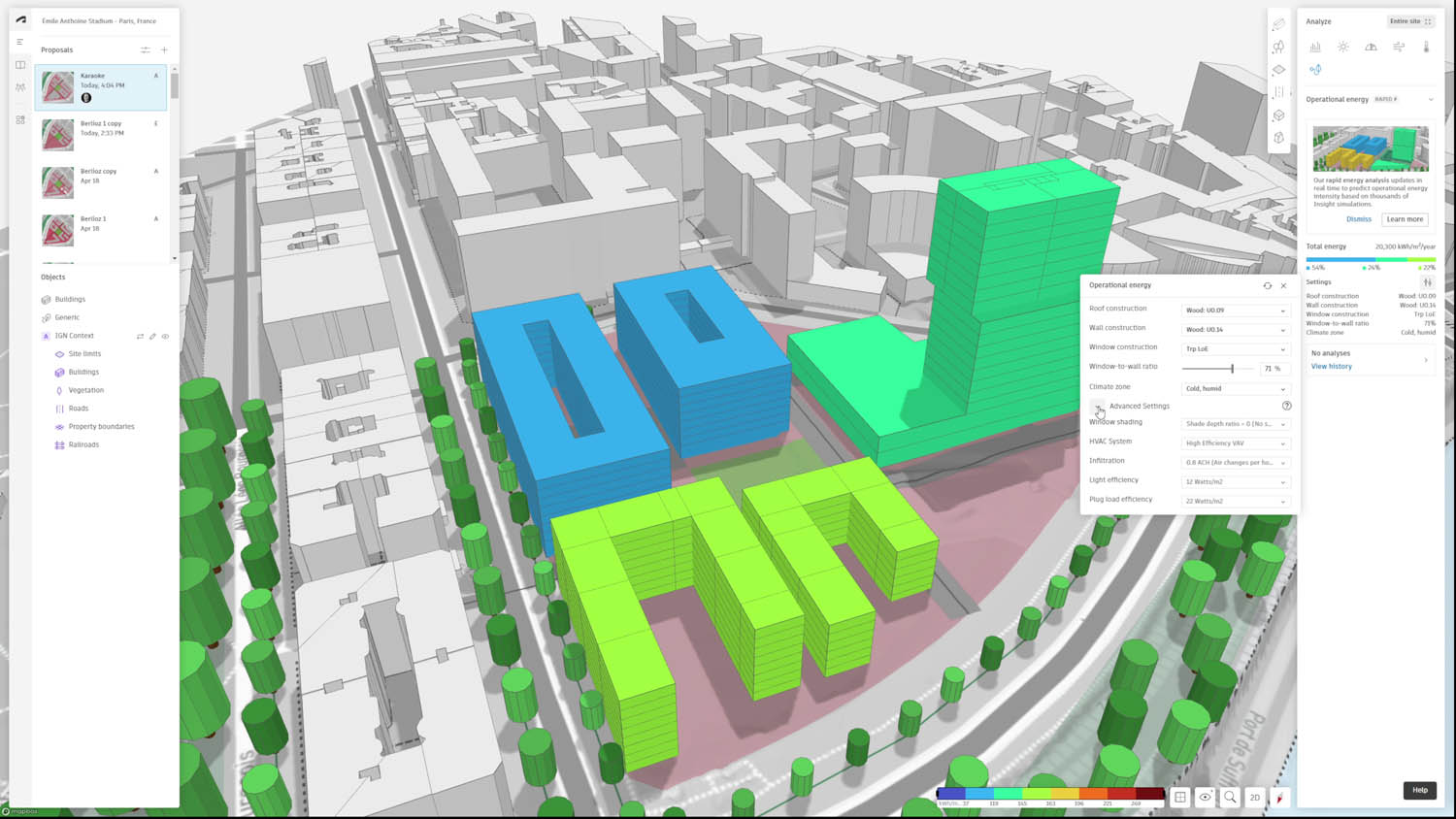

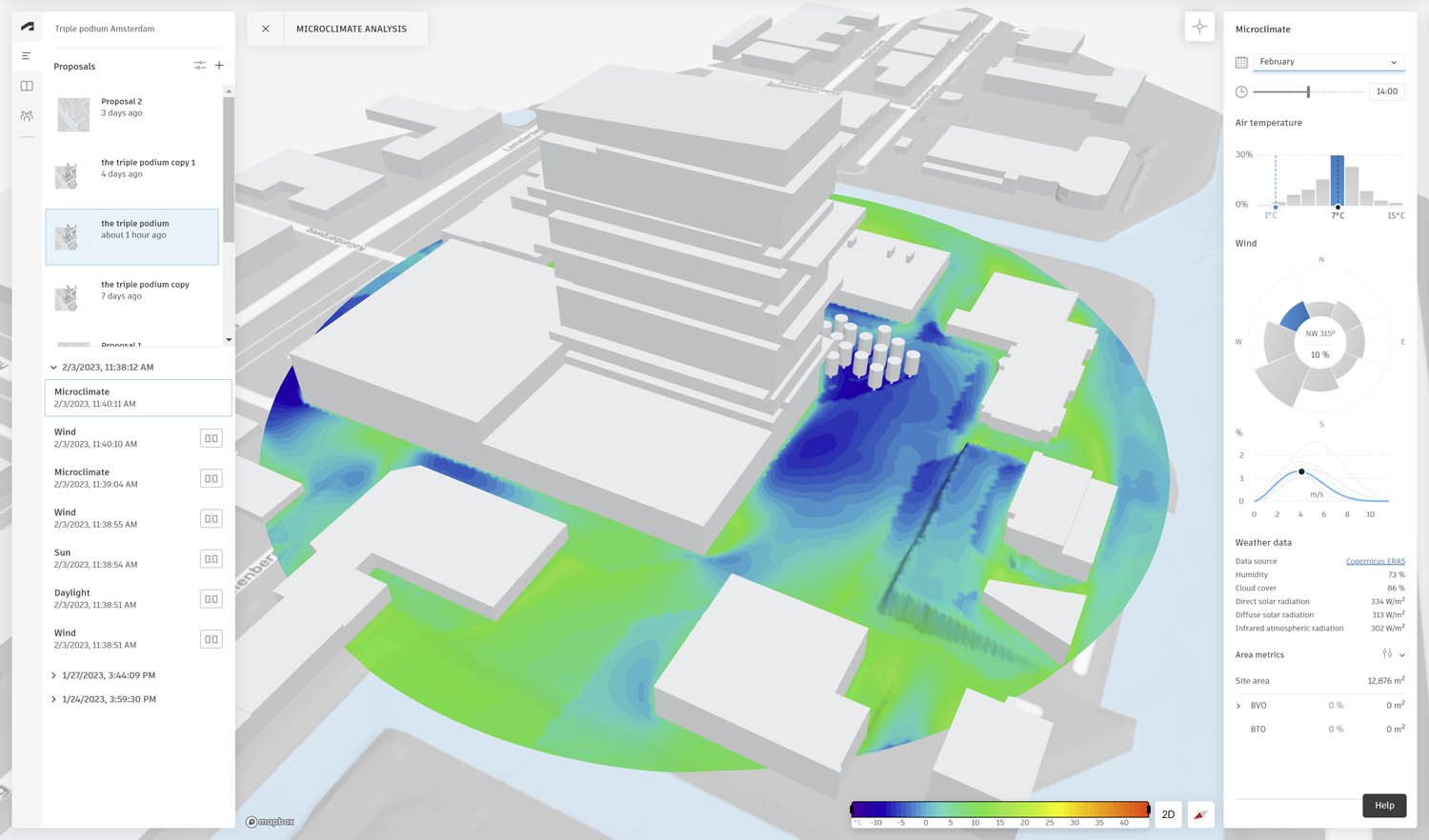

Forma is a concept modeller which resides in the cloud but has tight desktop Revit integration. Designs can be modelled and analysed in Forma and sent to Revit for detailing. At any stage models can be sent back to Forma for further analysis. This twoway connection lends itself to proper iterative design.

At the time of acquisition, Spacemaker’s geometry capabilities were rather limited. The Forma 3D conceptual design tool has been enhanced with the merging of FormIT’s geometry engine (so now supports arcs circles, splines), together with modelling based on more specific inputs, like number of storeys etc. It’s also possible to directly model and sculpt shapes. For those concerned about FormIT, the product will remain in development and available in the ‘Collection’.

One of the big advantages hyped by Autodesk is Forma’s capability to enable modelling in the context of a city, bringing in open city data to place the design in the current skyline. Some countries are served better than others with this data. Friends in Australia and Germany have not been impressed with the current amount of data available, but I am sure this is being worked on.

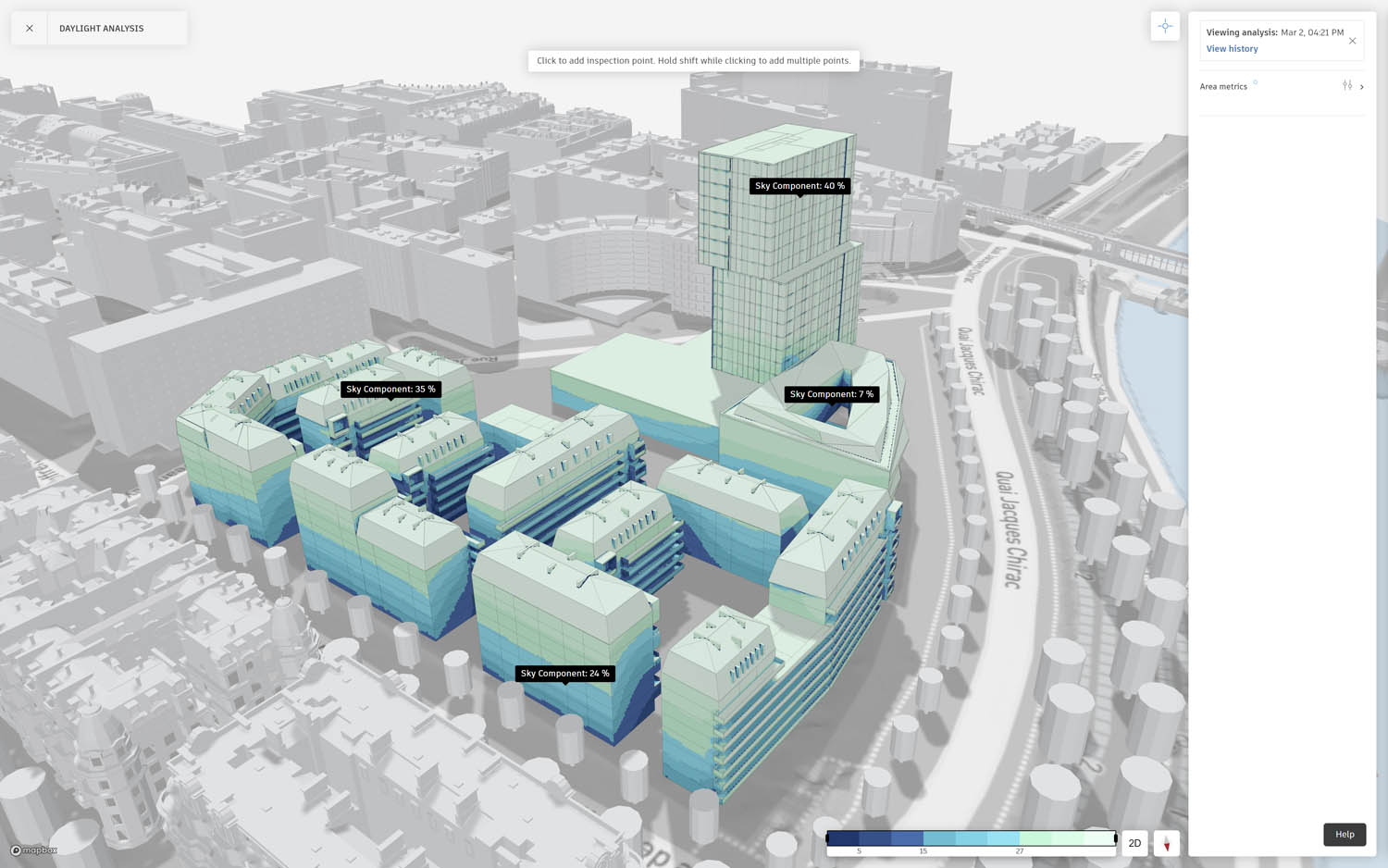

Looking beyond the modelling capabilities, Forma supports real-time environmental analyses across key density and environmental qualities, such as wind, daylight, sunlight and microclimate, giving results which don’t require much deep technical expertise. It can also be used for site analysis and zoning compliance checking.

At time of launch, Autodesk demonstrated plug-ins from its third-party development community, with one from TestFit demonstrating a subset of its car parking design capability, and the other from Shapedriver for Rhino.

Talking to the developers of these products, they were actively invited to port some capabilities to Forma. Because of the competitive nature of these products, this is a significant move. Rhino is the most common tool for AEC conceptual design and TestFit was actually banned from taking a booth at Autodesk University 2022 (we suspected because TestFit was deemed to be a threat to Spacemaker). In the spirit of openness, which Autodesk is choosing to promote, it’s good to see that wrong righted. By targeting Rhino support, Autodesk is clearly aware of how important pure geometry plays to a significant band of mature customers.