Last year Autodesk demonstrated a VR capability that could take Revit models and provide immersive experiences based on accurate BIM models. This demonstration has now become a reality, as Martyn Day explains

Now that we are over the hype of 3D printing, we have another technology to trumpet for the construction industry: Virtual reality (VR).

VR, in itself, is not exactly new. I can remember staggering around an Autodesk office in Guildford in the late 1980s, wearing a heavy helmet, with electronics strapped to my arm and thick cables attached to a huge server occupying half a room. The display was very low resolution and the models were very primitive. Autodesk shortly cancelled the project and never seemed to revisit it, until recently.

What’s new is that a lot has changed since then. The AEC industry has embraced 3D modelling. Computers are much faster and graphics more powerful. Everything is smaller and games technology has driven down the entry cost of interactive VR headsets.

While today’s goggles are still expensive for the home user, they are relatively affordable for professional use. Up until now, many developers have used generic games engines such as Unreal and Unity to create immersive environments but have stripped out all the intelligence. The AEC industry is racing to adapt this games VR technology to support geometry and metadata, making it more useful for immersive BIM experiences in all phases of design and client interactions.

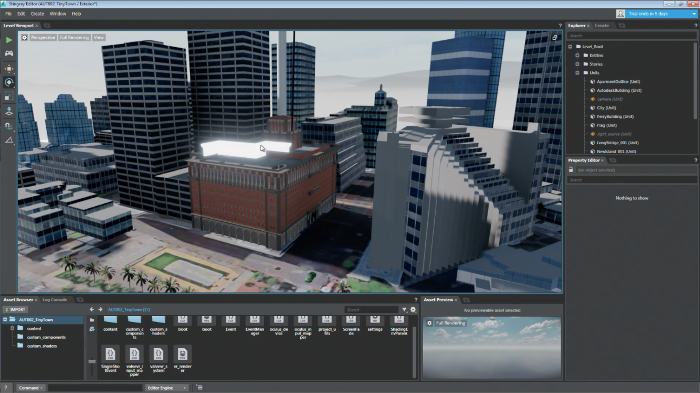

At Autodesk University 2015, Autodesk created a space to demonstrate its new Stingray physically-based rendering technology, which came from the company’s 2014 acquisition of the Swedish-developed Bitsquid games engine. Delegates could try out the immersive BIM-VR experience by wearing the HTC Vive, prior to the headset’s official release.

While there wasn’t much room to walk within the 3D spaces, the high-resolution experience was really impressive. There was no lag as you looked around. The interior and exterior models, which originated in Revit, were exquisitely textured and it was possible to interrogate building components to see dimensions, metadata and sections. Stingray was obviously a powerful engine for VR and we were told that it would be coming soon.

At the same time Autodesk was also working on Project Expo, a behind-thescenes labs project to create an architecturally focused implementation of Stingray. It used the cloud to create a fully immersive 3D experience direct from a Revit model.

Introducing Live Design

Autodesk’s AEC-focused real-time design visualisation developments have now evolved into Live Design, which offers a number of workflows.

The expert solution is based on three existing Autodesk technologies, namely Revit, 3ds Max, and Stingray.

The process starts in Revit, where core geometry is created with all the rich layers of data that you would expect in a BIM model. This is then imported into 3ds Max for additional modelling or animation, from where it can then be imported into the Stingray application via a live link.

Once in Stingray, everything is rendered in real time during edits, with ‘what you see is what you get’ results. Within Stingray, there are many powerful environmental controls and it looks just incredibly fast to model and edit. While this does require some knowledge of multiple applications, it also allows a wide range of choices throughout the authoring process. Once you are ready, Stingray will create a selfextracting executable, which works on Windows or iOS mobile devices.

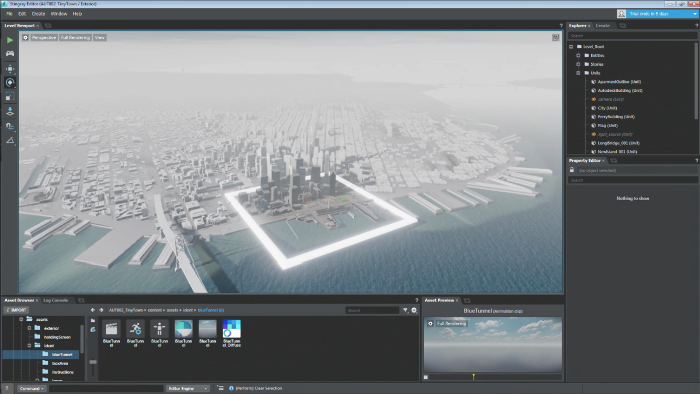

For those who are not experts in 3ds Max but want to utilise the power of Live Design, Autodesk is building on the work it has done with Project Expo to use the power of the cloud to allow one-button creation from Revit. This prepares and processes the Revit BIM model using cloud-hosted versions of 3ds Max and Stingray with output delivered for mobile, desktop or VR usage.

At the moment, Autodesk is still developing this capability and the service offers some basic features that will be expanded in future, but that already include terrain maps, geographic location, time of day/year control for sunlight studies, materials conversions, all the useful BIM data, Revit cameras and floors/gravity. It’s also possible to add people, cars and traffic simulations. According to Autodesk executives, the process of creating a Stingray environment on the cloud typically takes six minutes.

Inside the interactive Stingray environment, it’s possible to fly around the model like a ghost or to enable gravity and walk through buildings in different lighting conditions. As the system has maintained its BIM heritage, interrogation of components and building services is possible. This is immensely useful and simple to use. In some respects, Stingray combines elements of existing products, such as Navisworks and BIM360 Field, but is fast and offers really high-quality graphics. I suspect that Live Design will be used by customers right from the concept stage all the way through to sales and marketing.

Autodesk is keeping a keen eye on hardware under development and is currently focusing on Oculus Rift, HTC Vive and Google’s Tango tablet. Having used the Stingray system with HTC Vive, I have to say that the real-time render has a great feel to it and I think Revit-based architects will love the results. As with all graphics-based realtime environments, processing power is key. As Autodesk recommends, the better the GPU you use, the better the expe – rience you’ll get. In development, the team is targeting the Windows Surface tablet as its typical base system. Anything more powerful than that will naturally give you more frames per second. For VR and for feeding the high refresh rates of the HTC Vive, you will need a PC or workstation with a very powerful GPU.

Conclusion

We live in exciting times. Visualisation technology has expanded from being a domain of experts to something that every BIM user can understand and benefit from. With the promise of VR and the power of the cloud, Autodesk is looking to blur the lines between rendering as a single image or animation, to delivering dynamic, on-demand, real-time experiences on desktops, mobiles and headsets. As the service part of the workflow is performed in the cloud, we don’t know the price yet, but it will probably be charged for on a cost-per-cloud-unit basis. Obviously, to use as a professional desktop set-up, there’s no cloud cost, since Revit and 3ds Max are part of Autodesk’s Building Design Suite. The question is will Autodesk bundle Stingray into the suite (it currently costs £185 annually)? I’m sure we will know by this year’s subscription release in September/October.

For now, Live Design is not ideal for those who don’t want to use the three packages to get a real-time result. Designers with expertise in 3ds Max will have no trouble understanding Stingray, although advanced users will need to learn scripting. With its one-button ‘BIM to real-time environment’ offering, Autodesk will see an explosion of usage, as this is exactly what a lot of customers have been waiting for.

Looking elsewhere, we would recommend you check out Graphisoft’s BIMx Pro, which has been a key differentiator for the company. In addition, IrisVR (see box out below) is a very easy to use push button solution for bringing Revit models into VR and Revizto works with most BIM formats and also provides collaboration.

IrisVR — from Revit to VR at the push of a button

IrisVR Prospect is a VR tool built specifically for architects and AEC professionals. The software’s ‘one click’ workflow is designed to make it incredibly easy to bring 3D CAD data into a VR environment.

The key principle of IrisVR Prospect is that whatever you see on screen – in Revit or SketchUp, for example – is exactly what you see in VR. It not only brings in the model geometry but also materials and lighting, so nothing needs to be done post-import.

The process only takes a few minutes, making it incredibly easy for an architect to swap between CAD and VR to aid design.

The software is also well suited to design review or client presentations. Such is the ease of import, that design iterations based on feedback could feasibly be viewed in VR during the same meeting.

The rendered quality inside IrisVR Prospect is simple, as can be seen from the accompanying image, but it still gives the wearer of the VR headset an excellent sense of presence and scale.

The software works with a variety of headsets. The Oculus Rift is best suited for use at a designer’s desk, while the HTC Vive is great for conference rooms, giving a theoretical 5m x 5m space for users to walk around.

To navigate further distances, simply teleport by pointing a virtual cursor at different places within the model. With a custom collision system there are no rigid rules here. With ghost mode enabled, you can even bust through a wall. It’s very intuitive.

The software currently has a handful of features that can be accessed via an on-screen GUI. Dynamic lighting and shadows can be experienced in real time at any time of day or year, based on the geolocation of your CAD file.

Callouts and annotations can be used to mark up the model. Layers can be toggled on and off to expose or hide things like glazing, services and furniture.

Working with CAD data

IrisVR Prospect currently works with Revit, SketchUp and OBJ files.

For SketchUp, simply drag and drop a SketchUp file into the IrisVR dropzone.

For Revit, there is a plugin. Inside Revit, create a 3D view of the portion of the model you would like to view in VR, then press a button to start the geometry conversion. The ‘export’ supports Autodesk materials, layers and linked Revit files. Metadata can also be brought in and while there are currently no tools with which to interrogate this data, they should appear in future releases.

Support for ArchiCAD and a plug-in for Grasshopper (and Rhino, by extension) are also in development.

The conversion process is hidden from the end user, but there is a lot going on behind the scenes, including mesh and texture compression.

To help ensure everything translates properly into VR, the developers have also worked on a lot of ‘edge’ cases, such as what happens if someone uploads a file without a floor.

At the moment there is a practical limit to the size of models, dictated by the need to deliver the high frame rates required for a nauseafree VR experience.

However, a lot of work is being done to improve performance of large models and the company’s ultimate vision is that users won’t have to worry about file size at all. IrisVR is currently in beta and is available for download from irisvr.com

By Greg Corke

If you enjoyed this article, subscribe to AEC Magazine for FREE