By harnessing the power of the cloud together with engineering accurate scalable meshes, Bentley is looking to make model complexity a non-issue for large-scale design visualisation on infrastructure projects, reports Greg Corke

Visualisation used to be the preserve of the design viz specialist, but this has changed dramatically over the past few years. Most BIM applications now have capable renderers built in and advanced tools like V-Ray are being tuned to the workflows of architects and engineers. While static rendered images continue to play an important role in design exploration, it’s the realtime rendering tools – some of which also support Virtual Reality (VR) – that are currently generating the biggest buzz.

Two years ago, Bentley Systems got its own real-time rendering technology, LumenRT, with the acquisition of E-on Software. LumenRT has always been considered easy to use, but now Bentley is making a big effort to expand its reach by making a custom version available free of charge to those who use its CONNECT Edition design modelling applications.

LumenRT Designer contains most of the functionality of full-blown LumenRT, but has a slightly smaller environmental content library of vegetation, vehicles and characters. Resolution is limited to FHD (1,920 x 1,080) and you’ll also need the full version if you want to take your designs into VR.

Handling large projects

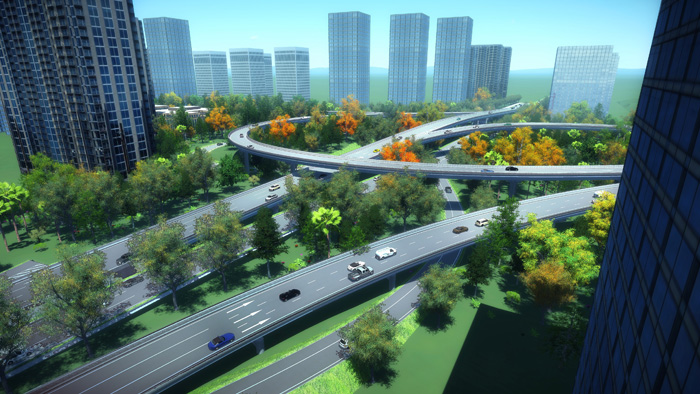

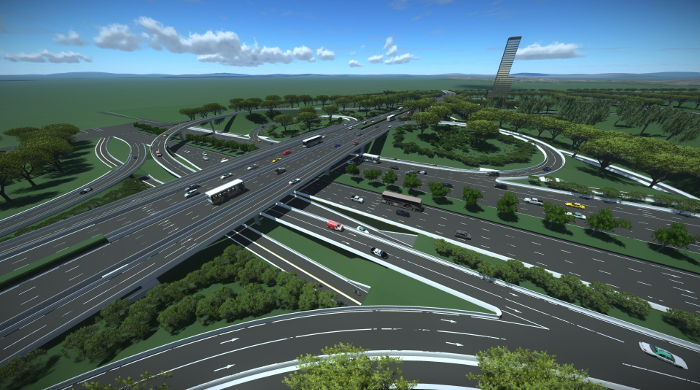

LumenRT has always placed a big emphasis on the visualisation of infrastructure projects but, since it came under Bentley’s control, this focus has grown. One of biggest challenges that engineering firms face is how to handle the complexity of large projects, which often include huge roads, long corridors and vast expanses of terrain.

The datasets can be enormous, containing millions of polygons. This puts a massive load on the GPU and slows down 3D performance. Trying to navigate a complex scene at only a few frames per second significantly impacts the experience and limits how useful it is. And you can certainly forget about doing anything in VR.

The traditional way to solve this problem is to decimate the mesh and reduce the number of polygons in the scene. This is fine for many visualisation workflows – as long as you don’t need to look too closely – but once the data has been approximated and averaged, it’s pretty much useless for any kind of engineering calculation.

Thanks to Bentley’s new scalable mesh technology, LumenRT can now visualise large scenes using actual engineering data, without having to go down the oneway optimisation route. The technology is based on Bentley’s 3SM format and works with point clouds, Lidar data and even standard DTM meshes.

In LumenRT, when the camera position changes, data can be automatically streamed from Bentley’s ProjectWise ContextShare service and cached on the client device, which could be a workstation, a mobile phone or a VR headset. Data comes in as quick as 0.3 seconds, says Bentley’s David Burdick, so there is no discernible lag.

Scalable mesh technology supports infinite levels of detail, so when you zoom in, you see more detail and when you zoom out, you see less.

Resolution can go down to less than 1cm. From a viz perspective, this may or may not be relevant, but as Bentley’s David Huie explains, it is really valuable, as the same data can be used in the engineering process. “You can actually base your volumetric calculations off the mesh,” he says. “It’s so versatile that it’s usable for the OpenRoads guys, or for someone who wants to do a site plan or in LumenRT for visualisation.”

Bentley’s David Burdick describes scalable mesh as a “game-changing technology” that allows firms to work with models of any size.

“We are working with customers that are doing small buildings all the way up to 100 km square corridors, so we don’t want any limitations. There was a theoretical limitation because of the size of the graphics hardware – and with big terrains in there, there just wasn’t enough GPU power to handle it. Now, with the streamable format, we can do any size.”

Burdick gives the example of a recent 20km x 30km corridor project. “If you tried to stick this on a laptop, it would die. [With scalable mesh technology] you can stick this on the web and have it stream and you can run it on a phone and bring in this whole mesh.”

Virtual Reality (VR)

This time last year, Bentley was in the process of adding a VR capability to LumenRT. With update 3, released this summer, the software now supports the Oculus Rift and HTC Vive.

Bentley calls this a ‘Level 1 VR’ implementation, which is pretty much a visual experience. Users are able to walk around, teleport, wave the wand, change the time of day and click on objects to access high-level attribute information.

With December’s forthcoming update 4, the ‘Level 2 VR’ implementation, users will be able to access a much richer level of BIM information from inside VR. This is not only with data from Bentley’s modelling applications, but also from Revit, ArchiCAD, SketchUp and City Engine, for which LumenRT plug-ins exist.

‘Level 2 VR’ will also introduce the idea of ‘scenarios’, where users are able to flip things on and off inside VR, as David Burdick explains. “I’ll be able to say, just show me all the piping on this and show everything else with an invisible phantom around it.”

From VR to MR

Bentley is also exploring the potential for using LumenRT with Augmented or Mixed Reality – visualising your design in the context of a site. Burdickbelieves that this is where development of LumenRT will ultimately lead.

Initial research was done with the Microsoft HoloLens, but the LumenRT team found that the headset had limitations for design viz, as Burdick explains. “With the first device [the HoloLens], the computer was inside the headset, so the problem was once you started getting over 100,000 polygons, which is about the maximum you can hold, the thing really started to heat up.

“We really needed to go beyond that – because our models are 4 million to 10 million polygons, roughly.”

Now Bentley has access to Microsoft’s new Mixed Reality device, which connects to a PC via Bluetooth or hardwire. As the processing is done on the PC, it means it can handle much bigger models.

In addition to working closely with Microsoft’s HoloLens team, Bentley is also exploring a tablet version, where the LumenRT scene is overlaid with the actual reality behind it, captured with the tablet’s camera. However, Bentley still needs to solve some challenges around geo-positioning, as the tolerances are still quite high.

Moving viz to the cloud

LumenRT currently offers a push-button workflow. Press a button in Bentley’s modelling applications, Revit, ArchiCAD, SketchUp or City Engine, and a base LumenRT model is created automatically.

Geometry, layers, levels, lights, materials and BIM information are brought across and baked into a so called LiveCube. David Burdick says that a typical Revit model, which is heavily instanced, can take anywhere from 3 mins to 10 mins to bake. All of the processing is done locally on the desktop.

Once inside LumenRT, the design asset can be brought to life by adding vehicles, moving people, windswept plants, rolling clouds, rippling water and much more. Importantly, the software is able to handle change, which means updated designs can be re-imported and the user does not have to redo the entire scene.

In the future, Bentley will move away from a desktop-centric workflow and embrace the cloud.

“Right now, LumenRT’s native format uses a baked, DirectX format that’s optimised for viz,” explains David Burdick. “In the future, we’re not going to do that anymore. We’re just going to work natively off an iModel kind of format and enrich the iModel to give it what it needs for LumenRT.”

Bentley is also exploring how GPU power can be harnessed from the cloud. One of the challenges of expanding the reach of LumenRT to architects and engineers is that typical CAD workstations are not geared up for real-time visualisation, which needs a much more powerful GPU.

By doing the graphics processing in the cloud, rather than on the client device, users can get around the problem. It also means visualisation can be done on a phone or a tablet, and not just on a powerful workstation. Bentley has trialled this with Microsoft and it works well on 2D screens, says Brubeck.

VR is a different animal, he acknowledges, as it basically requires doubling the frame rate. “We are going to get there eventually, I have no doubt,” he says, adding that by reducing the latency with new generation GPUs, the latency you have because of the distance from the cloud server becomes less of an issue.

Conclusion

With LumenRT, Bentley is looking to deliver design visualisation into the hands of engineers and architects. This is nothing new, of course. Most design viz software developers have been on this trajectory for some years. The ace up Bentley’s sleeve, however, could be the ability to use actual engineering data within the visualisation, rather than approximated geometry.

As the AEC industry embarks on a journey of using VR for collaborative design review, the potential to carry out volumetric calculations on the engineering mesh and extract rich information from the 3D model could prove to be a powerful future capability.

In addition, by having a direct connection to an iModel in the cloud, any annotations or calculations done in VR could be stored as part of the project. In contrast, most file-based design viz applications are largely disconnected from the engineering process.

If you enjoyed this article, subscribe to AEC Magazine for FREE