Arup’s designers are benefitting from combining 3D modelling with gaming technology to generate crowd-source feedback on proposed and existing major transport hubs to improve passenger flow and signage. Sponsored Content

When it comes to major transport projects, global consulting engineers Arup is at the forefront of many of the sizable international infrastructure projects. Offering both creative and technical consultancy, the company aims to deliver more efficient, commercially sustainable solutions through its industry expertise, together with in-house R&D teams, which develop advanced software-based analysis solutions for customer projects.

With increasing city population densities, designers of public environments, such as transit stations and stadia, have to consider flow, signage and space usage, especially when faced with potential overcrowding at peak times, fire safety, evacuation and, in extreme cases, the threats of terrorism. To identify and minimise these bottlenecks early in the design phase, Arup has developed a number of tools and has embarked on R&D projects which certainly fall into the category of ‘Reality Computing’.

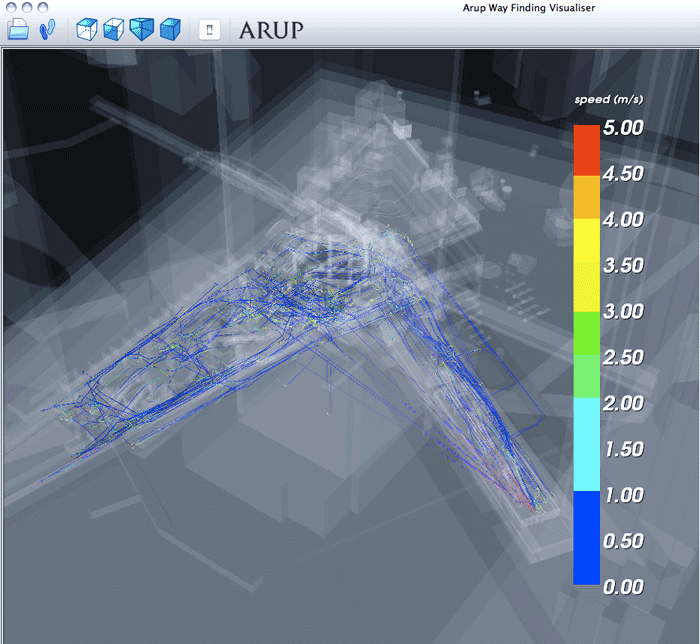

MassMotion

At the conceptual phase, Arup offers MassMotion and MassMotion Flow, for crowd simulation and analysis. The software is capable of generating tens of thousands of ‘agents’ which ‘spawn’ inside 3D models of proposed designs. Each agent makes its own way to a defined exit point.

The software also enables the application of ‘real-world’ data, such as passenger numbers entering a station and train arrival/ departure times. It’s possible to see platform build-up of agents between trains and identify maximum capacities and bottlenecks of ingress and egress. The software was also used to simulate the crowds at the annual Hadj pilgrimage in Mecca, where up to 130,000 people per hour will circle around the central Qibla. The MassMotion simulation looks amazingly close to actual footage.

Realtime Visual Simulation

While MassMotion is a mathematical simulation, in which the agents find pathways to pre-defined exit points, it doesn’t take into account real-world inputs which actively inform passengers as to where they should be heading.

In some respects, it assumes that to get from A to B, the agent knows exactly how to get there and anyone that has tried to navigate Schiphol Airport or Shinjuku railway station in Tokyo, will realise that getting to B is not necessarily a guaranteed outcome without outside intervention! To really understand the impact of design options, you also need human interaction.

Alvise Simondetti is a member of Arup’s Foresight Research & Innovation team, and is responsible for developing interactive computer graphics which represent design solutions.

To address the issue of getting the human experience into the design cycle, his team has developed a Realtime Visual Simulation tool. Simondetti explained, “Realtime Visual Simulation allows users to explore designs before they are built, giving an accurate preview of a project and allowing stakeholders to understand an environment before it becomes a reality. Users navigate through models freely, without the limitation of a pre-set path or video sequence and this behaviour is captured and fed back to the design team.”

Using a games engine, a joystick and a three monitor set-up to fill peripheral vision, participants navigate proposed concourses. Each monitor displays a normal perspective, equivalent to that of a 50mm camera lens.

Models of the environment are detailed and visually rich, and members of the public are tasked with getting from the concourse to different platforms. The 3D model includes any proposed signage and this should guide them to their destination.

The scenes also include human ‘avatars’ to provide a more realistic experience when there may be line of sight issues with signage. Each journey is charted, logging the users’ direction and view while they search for clues as to where they should be heading.

From this it’s possible to identify signs that are poorly placed, hidden from view, lack clarity or just don’t work as expected. There are three general modes of end-user navigation.

Fly around mode is unbounded by geometry and allows the user to go anywhere and go through walls. First Person mode applies gravity and collision constraints. Views are set at ‘head height’ and walking produces a natural ‘head bounce’ in view.

Third Person mode adds a male or female avatar to the user’s view and enables the understanding of scale.

The system allows designers to try security camera positions and signage of different colours, sizes, translations and symbols in proposed as well as in existing public buildings. These are then tested by the public in real time and the results collated and informed improvements made to the proposed facility layout.

For now the system is desktop-based but Simondetti commented, “ The technology is very suitable to being ported to immersive technologies such as VR goggles.

We found that the more realistic the experience, the closer to reality it feels, the better for our testers. It’s simple, the more immersive, the better the results. So while many wonder about the actual usefulness of VR goggles, for our application it would improve our results.”

Admiralty Station, Hong Kong

Arup’s Realtime Visual Simulation was recently used in the development by Hong Kong’s MTR Corporation on the expansion of Admiralty Station.

Named after the Royal Navy HQ which was once based there, this 60,000 sq ft facility sees one million passengers a day.

It gets heavily congested during rush hour, despite trains leaving every two minutes.

To alleviate the congestion, two new surface train lines are being added, which will double the size of the station from four to eight platforms.

Underground, the station will go from three platforms served by eight escalators to seven underground platforms with 48 escalators.

Obviously this is an extensive engineering project and the client wanted to minimise changes to existing signage and CCTV positions. Arup conducted a wayfinding study using hundreds of participants at each phase of development.

The station was virtually modelled and included dynamic agents (other people), moving lifts and elevators at simulated real-time speeds.

The results of these Realtime Synthetic Environment sessions identified 235 potential problems with the 970 signs – that’s 25% of all the signage.

The net result was 145 proposed signs were changed. This meant considerable savings for the client post-construction.

Conclusion

Computer-based analysis and simulation is more often than not seen to be a ‘black box’ operation: enter inputs, process and get an output.

Arup’s Realtime Visual Simulation research proves that human interaction with digital design information can be just as valuable as a purely mathematical analysis. When it comes to public buildings, flow capacity of passengers along any route is only one dynamic to be considered.

It’s clear from the Hong Kong example that, by including a human analysis dynamic into the design process, it’s possible to create a valuable feedback loop. Here, real-world consumers can overturn the assumptions of even the best-intentioned design team, while saving the owner money and assisting future passengers in getting to where they need to be faster.

If you enjoyed this article, subscribe to AEC Magazine for FREE