With the availability of affordable headsets like the Oculus Rift and HTC Vive, VR is now within reach of AEC firms of all sizes. Greg Corke explores this brave new virtual world

It’s an all too familiar scenario: an architect enters a building for the first time and the space doesn’t quite match the vision of his or her design. However beautiful a static rendered image may be, traditional design visualisation can only convey so much, even when the scene is rendered at eyelevel with furniture for scale.

At Gensler, design director and principal Hao Ko knows the feeling. “You still have to make a translation in your mind, in terms of how tall this space is going to feel,” he says. “More often than not, I’ll go to my own projects and I’ll be like, ‘Wow! That’s a lot bigger than I expected.’ You still have those moments.”

This, he says, is where virtual reality, or VR, comes in – and others in the industry are starting to reach the same conclusion.

VR head-mounted displays (HMDs) such as the Oculus Rift and HTC Vive have the power to change the way architects design and communicate buildings before they are built. The wearer is instantly immersed in a true three dimensional environment that gives an incredible sense of scale, depth and spatial awareness that simply cannot be matched by traditional renders, animations or physical-scale models.

A VR experience with an HMD can fool your brain into thinking what you’re seeing is actually real. The WorldViz ‘Walk the Plank’ demo at Nvidia’s GTC event in April stopped me dead in my tracks. Even though I knew I was standing in an exhibition hall, I literally could not step forward for fear of falling. The sense of presence was overwhelming. It felt like I truly ‘existed’ in the scene and, from then on, the fight of mind over matter was well and truly lost.

This sensation of actually being inside a building also makes VR an incredibly powerful tool for communicating design intent. Clients, in particular, often don’t have the ability to understand spatial relationships and scale simply by looking at a 2D plan or 3D model. VR can evoke a visceral response in exactly the same way that physical architecture can.

“We just had a client where we were showing some conceptual renderings and they were having a hard time [understanding the building],” explains Mr Ko. “The second we put goggles on them, it was like, ‘Oh yeah. Build that. That’s great. That’s what I want.’”

VR can play an important role at all stages of the design-to-construction process, from evaluating design options and showcasing proposals, to designing out errors and ironing out construction and serviceability issues before breaking ground on site.

Even at the conceptual phase, VR can be an effective means of exploring the relationships between spaces – the impact of light on a room at different times of the day or year, or views from mezzanine floors. With a physical scale model or BIM model on screen, you still have to imagine what it would be like to exist inside the space. With VR, you actually experience the proportion and scale.

VR software

VR environments for architecture and construction projects have traditionally been created with powerful professional VR development platforms, such as WorldViz Vizard or Virtalis Visionary Render.

But with VR now set to go mainstream, so-called 3D game engines, often used to create first-person shooters, offer a powerful, low-cost (and sometimes free) alternative for architectural VR.

Portsmouth-based VR specialist, TruVision, for example, relies on Unreal Engine to generate its fully immersive 3D environments for architects and clients.

Architectural content is also being generated specifically for the popular game engine. Software developer UE4Arch, for example, sells a wide range of materials and models for Unreal, including beds, tables and chairs.

Autodesk Stingray is a relatively new game engine that was built from the BitSquid engine that Autodesk acquired in 2014. It offers a live link to Autodesk 3ds Max, which is great for design viz specialists familiar with the 3D modelling, rendering and animation software. However, Autodesk is also exploring ways to make the technology more accessible to architects and other users of Revit. [Update 16/02/17 – Autodesk has now released a push button Revit to VR application called Autodesk LIVE. Read our hands on review].

Crytech is another popular game engine, as is Unity, which already has a big presence in the AEC sector. Unity is also being used as the foundation for new architecturally focused products. New York-based startup IrisVR, for example, has adapted the game engine for Prospect, a software tool that allows architects to quickly bring SketchUp, Revit and other BIM models into a VR environment. [Update 16/02/17 – Read our hands on review of Iris VR Prospect].

[Update 16/02/17 – Enscape is a real-time visualisation tool designed to work specifically with Revit. The software can be used on a standard display or in VR. Read our hands on review ].

Other software developers are getting in on the act by building in tools to render out VR panoramas. Unlike the tools above, which generate VR-optimised 3D models for real-time navigation, VR panoramas are essentially wide-angle panoramic images that deliver a full 360-degree snapshot of the world around you. The viewing position is static, which is a big downside, but with a stereo pair of images, the user can still get a good sense of depth and the quality can be excellent.

VR panoramas are best viewed on entry-level ‘smartphone’ headsets like the Samsung Gear VR or Google Cardboard, but will also work fine on the Oculus Rift.

They can be rendered out from a range of applications including Chaos Group V-Ray, Nvidia Iray VR Lite, Autodesk A360 Rendering, Iris VR Scope and Lumion.

In Vectorworks 2017, due for release later this year, users should be able to create a VR panorama very easily, simply by uploading a 3D CAD model to the cloud. The resulting stereo images are then displayed on a smartphone’s web browser.

What is an HMD?

A head-mounted display (HMD) is a pair of goggles that you strap on your head for a fully immersive VR experience. Each eye is shown a slightly different view, fooling your brain into thinking you are inside a virtual 3D world. Wearers experience an amazing sense of presence, scale and depth.

The virtual world can be explored from any angle, simply by moving your head or eyes. Movements are tracked by the HMD and the view of the virtual world adjusts instantly.

From BIM to VR

Most CAD and BIM models feature extremely detailed geometry, which is not needed for VR. Fully interactive VR software also has extremely high performance demands, so some form of model optimisation is required when bringing BIM data into a VR environment.

This is one area where specialist VR consultancies earn their keep with finely tuned processes for tasks like simplifying geometry, adding lighting, fixing gaps in the model and culling objects that will not be visible in the scene.

“Our clients have the models, we have the headsets, the graphics engines and the knowledge of how to stitch all these together,” says Scott Grant, CEO of Glasgow-based design viz specialist Soluis.

Once the model is inside the VR environment, things like materials, lighting, furniture and other small details that make the VR experience feel real are added. Barcelona-based ARQVR, for example, even goes so far as to add tiny, personalised decorative objects such as paintings, books, logos and plants.

Additional programming work can be done to make the experience more interactive, such as enabling clients to experiment with design options, materials and lighting. Architects can flip between design schemes without leaving the VR environment.

For Autodesk’s Stingray demo of a San Francisco apartment, for example, London-based creative agency Rewind programmed in the ability to try out different furniture options or strip back the walls to reveal the building services.

Creating the most realistic and interactive VR experiences is a skilled process, so firms typically outsource or employ specialists.

Workstation hardware for smooth VR

Virtual Reality demands extremely powerful workstation hardware. While most modern CAD workstations should satisfy the minimum requirements for CPU and memory (3.30GHz Intel Core i5 4590 / 8GB RAM or above), they will likely fall well short on graphics.

Both the Oculus Rift and HTC Vive require a graphics card capable of sustaining a minimum frame rate of 90 FPS per eye. Anything below this and the user is likely to experience nausea or motion sickness.

VR not only pushes the computational limits of GPU hardware, but can place huge demands on memory size and memory bandwidth as models need to load into GPU memory quickly.

AMD recommends its top-end professional GPU, the AMD FirePro W9100 (available in 16GB or 32GB versions). However, it recently launched the Radeon Pro Duo (pictured right), a hugely powerful liquid-cooled dual GPU graphics card, designed specifically for VR content creation and consumption.

[Update 16/02/17 – In September 2016 AMD released a new professional ‘VR Ready’ GPU, the Radeon Pro WX 7100 (8GB). Even though this was the first single slot professional GPU it is more powerful that the AMD FirePro W9100. Read our full review].

Nvidia recommends its top-end Quadro M5000 or Quadro M6000 GPU (available in 12GB and 24GB versions).

[Update 16/02/17 – Nvidia has now released three more professional ‘VR Ready’ GPUs, the single slot Quadro P4000 (8GB), and the dual slot Quadro P5000 (16GB) and Quadro P6000 (24GB). Read our in-depth review of the Nvidia Quadro P4000.]

In many cases, a simple graphics card upgrade will turn your CAD workstation into one capable of running VR. However, this depends on what type of machine you have. Most VR-capable graphic cards need two PCIe slots, an auxiliary power connector and more than 150W of available power. You need to make sure your CAD workstation can satisfy these demands.

Nvidia has also launched a ‘VR Ready’ program to help users buy a workstation that is capable of running professional VR applications.

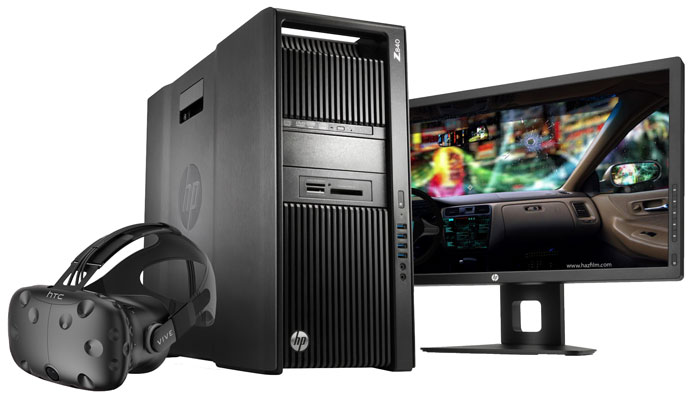

Workstation manufacturers Dell, HP and Lenovo have all announced ‘Nvidia VR Ready’ professional workstations. The HP Z240, Z640 and Z840, Dell Precision T5810, T7810 and T7910 and the Lenovo P410, P510, P710 and P910 all come with Nvidia-recommended configurations, as well as an Intel Core i5-4590/Intel Xeon E3-1240 v3 or greater CPU and a HTC Vive or Oculus Rift HMD.

[Update 16/02/17 – In January 2017 Dell launched a ‘VR Ready’ All-in-one workstation, the Precision 5720 with AMD Radeon Pro WX 7100 GPU. Read about it here]

MSI has also announced the first ‘Nvidia VR Ready’ mobile workstation, the MSI WT72, designed to handle VR-powered design reviews. It features the new Quadro M5500 GPU, which is said to be the world’s fastest mobile GPU. Eurocom also offers a number of laptops capable of powering the Rift or Vive.

[Update 16/02/17 – In January 2017 Nvidia and AMD launched new VR Ready mobile GPUs, the Nvidia Quadro P4000, Quadro P5000 and AMD Radeon Pro WX 7100. All the major manufacturers are in the process of launching 17-inch ‘VR Ready’ mobile workstations based on these new power efficient GPUs. These include the Dell Precision 7720, Lenovo ThinkPad P71, GoBOXX MXL VR and MSI WT73 VR].

Mobile VR is interesting for two reasons. First, it means VR is portable, making it easier to take VR to client offices or construction sites. Second, some laptops can be worn in a dedicated VR backpack, complete with additional battery, which means no trailing cables.

VR is not limited to professional GPUs. The recently announced AMD Radeon RX 480 and Nvidia GeForce GTX 1080 both meet the minimum requirements for the HTC Vive and the Oculus Rift and should work fine with VR game engines like Unreal Engine and Unity.

However, both AMD and Nvidia have said they are currently testing and certifying their professional graphics cards for a range of VR engines and VR software. This should become more important moving forward, just as it already is for firms that rely on certified graphics and workstation hardware for running CAD software.

Increasing visual quality also demands much more from the workstation that renders the images in real time, so there are some tricks such as light baking, which can remove some of the load. However, pre-rendering can take hours, even on a render farm.

Nvidia Iray VR takes realism to the next level by delivering physically based rendering inside the virtual world. The GPUaccelerated ray trace rendering technology accurately simulates light to deliver stunning effects, such as reflections in the marble floor that change as you shift position.

There are some downsides. First, the pre-processing requires a serious amount of compute power. Even with eight ultra high-end GPUs, it would take over 100 hours to render a set vantage point within a scene (approximately a 2m x 2m x 2m box). You can move from side to side and experience parallax effects (such as looking round a column or over a balcony), but cannot walk freely within the scene.

Second, while the output quality is excellent, it is a little wasted on current-generation HMDs. Viewing a ray trace rendered image on a 4K monitor is one thing but, on an HMD, the experience is completely different as you can still see the pixels. For both of these reasons, Iray VR is probably more of a technology for the future.

At the heart of design

Virtual reality does not need to be ‘photorealistic’, or even close, for it to be of huge benefit to architects. One can argue that spatial realism is much more important than photorealism. The rendered quality of WorldViz’s ‘Walk the Plank’ demo feels like a 3D game from five years ago, but it still stops many in their tracks.

By not aspiring to create the most realistic experience possible, VR can instantly be opened up to a much wider audience.

After all, how can VR become an essential tool for architects if you have to hand off your BIM model to a specialist to work on? By the time you get your VR-optimised model back, the design will have evolved. For VR to become pervasive in the AEC sector, and used at the heart of design, it needs to be instant.

This is the approach taken by IrisVR whose Prospect software is specifically designed to help architects take BIM into VR in minutes. The idea is that whatever you see on screen inside your BIM software, you then see in VR. Simply press a button and the software takes care of everything: geometry optimisation, materials, lighting and all. [Update 16/02/17 – Read our hands on review of Iris VR Prospect]

Enabling architects to effortlessly move between BIM and VR can truly revolutionise the way buildings are designed. At any stage of the design process, the architect can pop on a headset and instantly get a feeling of being inside the building. This simply cannot be matched by viewing a BIM model on screen.

Autodesk is also working on making VR much more accessible to architects. Project Expo is a technology preview that uses the cloud to bring BIM models from Revit into Stingray at the push of a button. [Update 16/02/17 – Project Expo is now a product called Autodesk LIVE. Read our hands on review].

There are already signs of VR becoming pervasive in the AEC sector. AMD FirePro, for example, is currently talking to a large multinational engineering firm about putting VR on the desks of one in five of its employees. While many of the users will be designers, it is even more interesting to learn that management and implementers will also have access to VR headsets.

From Revit to VR – we test three push button apps

We get hands-on with three Virtual Reality (VR) applications that work seamlessly with Autodesk Revit, weigh up their capabilities and assess how well they combine with the HTC Vive and the very latest workstation class GPUs.

Applications on test include Autodesk LIVE, Enscape and Iris VR Prospect.

Head Mounted Displays (HMDs)

Virtual Reality headsets (or HMDs) have been around since the 1990s, but early models were both ridiculously expensive and offered a relatively poor user experience, due to low resolutions, modest frame rates and poor head tracking.

However, the games and entertainment industry is now driving the market forwards at some pace. The Oculus Rift costs just £400. The HTC Vive comes in at £700. That puts these low-cost, consumer-focused but professional-quality headsets well within the reach of mid-size and smaller architecture and engineering firms.

With both headsets, most of the heavy duty processing is carried out by a powerful PC or workstation with a high-end graphics card. The headset is tethered to the workstation by chunky USB and HDMi cables. This means the wearer has to be careful not to trip over trailing cables, although some VR setups route the cables above head height.

The headsets are quite heavy (around 0.5kg) but are padded and relatively comfortable to wear. Both feature a single 2,160 x 1,200 display, split across both eyes, which equates to a per-eye resolution of 1,080 x 1,200.

This might sound like a lot, but as you are viewing the displays up close, there is some pixellation. David Luebke, vice president of graphics research at Nvidia, says that retinal resolution is 78m pixels per eye – 60 times that of current HMDs – so there is still some way to go. And things can’t progress too quickly as graphics processing also needs keep up.

Refresh rates on the HTC Vive and Oculus Rift are incredibly high. While films and renderings give decent results at 24 frames per second (FPS), VR must run at 90 FPS – not only to reduce lag and enhance realism, but to eliminate the feeling of nausea or motion sickness experienced by some VR users.

However, the displays are only one part of the overall solution. It is the accuracy with which HMDs can track the physical position of the user’s head, so it can be synchronised with the virtual world, which makes them so powerful.

Both the Rift and Vive have rotational and positional head-tracking capabilities. This means you not only have a 360-degree view around a fixed point, controlled simply by turning your head (left, right, up and down and anywhere in between), but the user’s head is also tracked as it moves through 3D space.

This means objects will appear to move in relation to others. For example, you can shift your body to one side to look around a floor column, or lean over a balcony to see the road below – unless vertigo gets the better of you, that is!

The Oculus Rift is generally used for a seated experience. Movement is tracked by a single base-station and a gyroscope, accelerometer and magnetometer built into the headset.

The headset currently ships with an Xbox One controller for navigation, but a pair of dedicated VR controllers (the Oculus Touch) will be launched later this year for a room scale experience. [Update 16/02/17 – the Oculus Touch is now available.]

Set up is very easy, almost plug and play. This is great for novice users, especially clients, as they generally don’t need any help to get it working out of the box.

The HTC Vive is primarily designed for a standing, ‘room scale’ experience, which means the user can literally walk around a building (although the trackable area is limited to around 5m by 5m). The headset is tracked by two base stations, which can be mounted on walls or tripods.

The Vive ships with two VR wands (one for each hand), which have a selection of buttons including a trigger. Depending on the functionality of your VR software, you can use the wands to select objects, navigate between rooms or even mark up objects.

The HTC Vive is more complex to set up than the Rift, as you need to install and calibrate the tracking cameras which must be placed at head height at opposite corners of the room, either wall mounted or on stands. This can be quite daunting for beginners.

VR can also be delivered using smartphones, which are attached to specially designed headsets such as the Samsung Gear VR and Google Cardboard. However, as the smartphone is used to both display and compute the images, performance is nowhere near as good as with the workstation- accelerated Vive and Rift. Both headsets also lack positional tracking, which is important for comfort and immersion. However, for VR panoramas, you can get a pretty decent experience.

[Update 16/02/17 – Graphisoft has since released a low cost, interactive VR solution for Google Cardboard based on its BIMx app for ArchiCAD. Read our review]

Health and safety

Feelings of nausea or motion sickness caused by HMDs used to be quite common. However, with new-generation headsets, better tracking and powerful workstations to maintain frame rates, this is now less of an issue. In saying that, VR should still be used sparingly, as some wearers report stress, anxiety, disorientation and eyestrain due to focusing on a pixellated screen. Oculus Rift recommends at least a 10- to 15-minute break for every 30 mins of use.

[Update 16/02/17 – Having used the HTC Vive for a few months now, I have become very accustomed to using VR. While I used to feel some disorientation, it now feels rather natural. No more vertigo, except in very extreme situations (think exposed steel girders, 500m up in the air). Some call this getting your ‘VR legs’.]

The other potential health and safety issue is physical injury. When you are immersed in a virtual world, it is very easy to forget where you are. While architectural visualisation shouldn’t encourage the same kind of reckless abandon you might get from shooting aliens, you still need to be careful.

The HTC Vive protects against bumping into walls with a ‘chaperone’ system that can alert the user when they are close to the edges of the room. However, moving around a room blindfolded with trailing cables to trip over still won’t get past some firms’ health and safety protocols, making a sitting VR or augmented reality experience more appropriate.

VR can also have a positive effect on health and safety. Human Condition Safety is developing a fully immersive VR platform designed to increase safety of construction workers and reduce workplace risk. The company’s SafeScan software will help workers learn the correct ways to perform tasks, especially dangerous ones, in a ‘hyper-real’ gaming environment that uses BIM model data.

Navigation

VR headsets will often be used by all types of users, experts and novices alike, so navigation around a building needs to be easy. With the Oculus Rift, users can simply pick up an Xbox One controller and explore, in exactly the same way they would in a 3D game.

Of course, nothing is more natural than walking, but even with the ‘room-scale’ VR capabilities of the HTC Vive, you soon hit the limits of your physical room. This means you also need a way to navigate through the building from space to space.

TruVision allows users to teleport between rooms simply by pressing a button on an Xbox One controller.

The use of waypoint systems is a popular means of navigation for users of the HTC Vive. For example, in Autodesk’s Stingray demo, users simply point a virtual laser at a floating ‘hotspot’ within the 3D space and click to move.

BIM to VR specialist IrisVR uses a similar method, although navigation is not limited to pre-set portals. Here, users can move to virtually any point within the building by using the Vive to shine a virtual ‘torch’ on a surface and then clicking a button.

Professional VR specialist WorldViz is getting closer to producing true-scale virtual buildings with its so-called ‘factory scale’ VR, which can track up to ten users in spaces of up to 50m x 50m. To do this, it uses longer headset cables, hung from ceiling trusses, which move with the wearer.

VR treadmills have the potential for a VR experience with no boundaries. Infinadeck is an omnidirectional treadmill that allows the user to walk in any direction. It is said to react to the user’s movement, including their speed and direction, to keep them safely in the centre of the deck.

Meanwhile, Motion Chair, a research project from iSpacelab at Canada’s Simon Fraser University, allows the user to navigate virtual environments by leaning in the direction they want to go – much like sitting on a giant joystick.

Users don’t have to be restricted to moving from room to room. With bird’s eye or doll’s house views, buildings can be viewed from above and the user quickly teleported to a room of choice.

There’s also the good old-fashioned architectural flythrough. TruVision’s ‘drone’ mode allows users to fly through buildings – walls and all.

Design review and collaboration

Compared to dedicated AEC design review software like Navisworks or Tekla BIMsight, equivalent tools inside a VR environment are still very much in their infancy. This is particularly true of game engine VR experiences, where the onus tends to be on presenting a polished vision of a proposed building, rather than delivering practical tools for solving realworld design and construction problems.

But this will change. While most game engines strip out metadata, which is important for true BIM processes, when moving from Revit to Autodesk Stingray, data is not only retained, but users can click on objects and view the underlying attribute information.

Revizto, a dedicated tool for turning CAD models into navigable 3D environments, has a similar capability. In VR mode, users can click on an object to view information, including metadata.

Alternatively, they can pick an object from the object tree list and move to its location in the 3D model. Revizto also offers powerful issue tracking capabilities, but these are not currently available in VR. It will be interesting to see they make their way into the virtual world.

IrisVR Prospect also retains BIM data when importing models from Revit, although the developers have not yet delivered tools to work with this data.

Having access to BIM data inside a VR environment could be a hugely powerful capability. For example, markups could be fed directly back to the BIM model for easy design resolution. Models could be displayed thematically, automatically colour-coded by member size, material type, construction status or availability in the supply chain.

IrisVR Prospect does have some elementary markup capabilities where users can use the HTC Vive wands to draw in the space. Capabilities like these will surely grow as VR becomes more ingrained in the design process.

While I don’t see a need for a full-blown VR BIM application (not least for health and safety concerns over long term use of HMDs), I do expect to see elementary design tools appear. Being able to use wands or hand gestures to change room dimensions or ceiling heights without having to go back and forth between BIM and VR would be a hugely powerful capability.

The problem with VR headsets is that they mostly promote a solo experience. Design review, as a true collaborative process, is most effective when done in teams.

Even if everyone wears an HMD and ‘lives’ in the same model, not being able to make eye contact and observe body language or expressions makes communication less effective – in the same way that a conference call always comes second place to a face-to-face meeting.

WorldViz is investing heavily in collaborative VR and already uses avatars for so-called ‘co-presence’ experiences where users can interact with each other in the virtual world. Participants of a design review session can highlight different aspects of the design simply by moving their virtual hands or pointing lasers at objects. Seeing another person’s avatar within the VR scene can also stop reallife collisions!

Of course, participants don’t have to be in the same physical location. You could, for example, have a New York-based architect, London-based engineer and Munich-based cladding contractor all collaborating in the same virtual building.

VR does not have to mean users cut themselves off from the real world by donning a fully immersive headset.

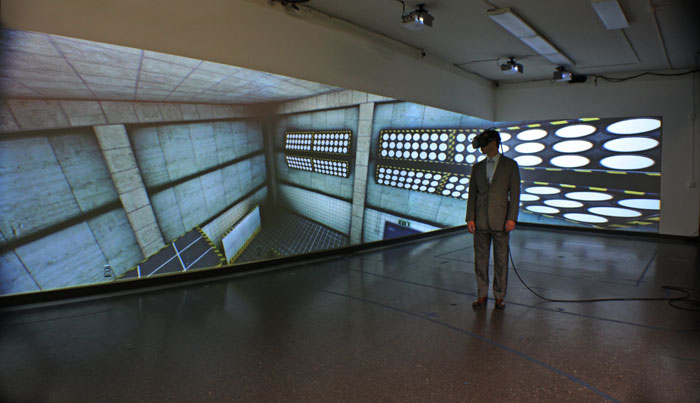

Soluis has developed a portable high resolution dome system that offers a standing interactive experience without the need for headsets. It can be used for collaborative BIM design reviews or interactive exploration of 3D models.

Radisson Hotels, for example, has used Soluis to model and explore design options for its next generation of hotel interiors.

While the dome is expensive to purchase, around £100,000, it can be rented for short or long periods of time.

Meanwhile, companies like WorldViz and Virtalis specialise in virtual reality CAVEs and walls that use powerful projectors and 3D glasses to deliver a 1:1 scale experience. Deploying a CAVE on a construction site for example, could help problem- solve issues between the real and virtual worlds, combining laser-scanned, asbuilt data with design-intent CAD models.

Looking to the future and taking this idea a step further, Microsoft’s ‘mixed reality’ HoloLens could even be used to visualise a holographic 3D BIM model in context on site. Using augmented reality, users could solve issues by literally seeing the design model overlaid on a partially constructed building.

Conclusion

Since the arrival of the Oculus Rift and HTC Vive, VR hype has gone into overdrive. But to dismiss these exciting new VR technologies as a fad would be a mistake. For AEC firms in particular, the benefits can be huge.

We all know BIM can optimise the delivery of buildings by providing greater efficiencies at all stages of the building lifecycle. But BIM does not encourage exploration of form, space and aesthetics — the human elements of architecture — as VR can.

While there is currently a big emphasis on using VR to wow clients (and it does this exceedingly well), the true power of the technology will be realised when the architect or engineer takes control and it becomes an essen tial, integrated tool for design.

It is one thing to model a building in a 3D CAD system, but using VR to experience how it will feel and function can take design to a whole new level. Architects can exist inside their designs, encouraging bold new ideas and more iteration.

Add design/review into the mix for optimising design or ironing out on-site construction issues and VR gives a whole new meaning to virtual prototyping.

Of course, for all of this to go mainstream, VR needs to be quick and easy. Control needs to be put in the hands of architects and engineers. They need to be able to take buildings into a VR environment at the click of a button so that they can make informed design decisions at the exact moment they are needed most.

If this all sounds familiar, then that’s because there are many parallels between VR today and design viz ten years ago. Back then, design viz was still a specialist skill. Now, high-quality, push-button rendering is available within most CAD/BIM applications. I expect the same will happen with VR, making flipping between the real and virtual worlds a natural part of design.

But you can forget about solving construction issues at the top of a steel-framed skyscraper. This vertigo sufferer will be keeping his feet firmly on the ground.

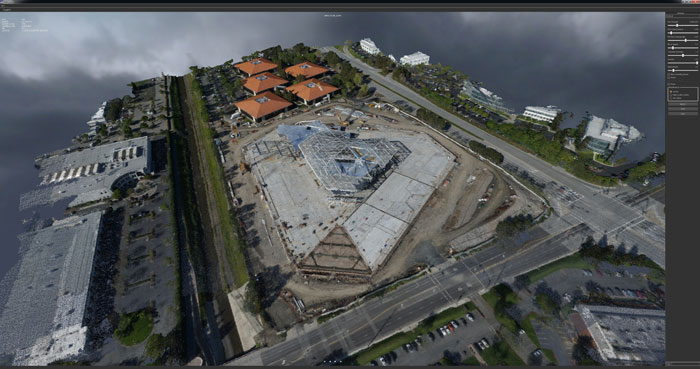

Virtual construction: exploring the real world

Nvidia is using its new Silicon Valley HQ to show how VR can be used to help track progress on a construction site. Wearing a VR headset, a site manager, client or architect could fly around a time-lapse point cloud that shows every little detail of the site. The colour point clouds are being created from hundreds of photographs, taken by a drone that flies over the site every day. In the VR environment, the user is able to step through the construction progress simply by pressing a button on an HTC Vive wand.

Users can see demolitions being completed, the site being graded, then foundations and the building starting to take shape. Navigation around the site is intuitive. Starting with a bird’s eye view, the user simply reaches out, then presses the trigger buttons and pulls the building towards them. The building can then be spun round like a turntable, or zoomed in further to look at details within the site.

The software includes basic measurement tools. Wands can be used to take horizontal and vertical measurements. This could help track progress of specific site components, such as how much of the highway has been completed on any given day.

If you enjoyed this article, subscribe to AEC Magazine for FREE