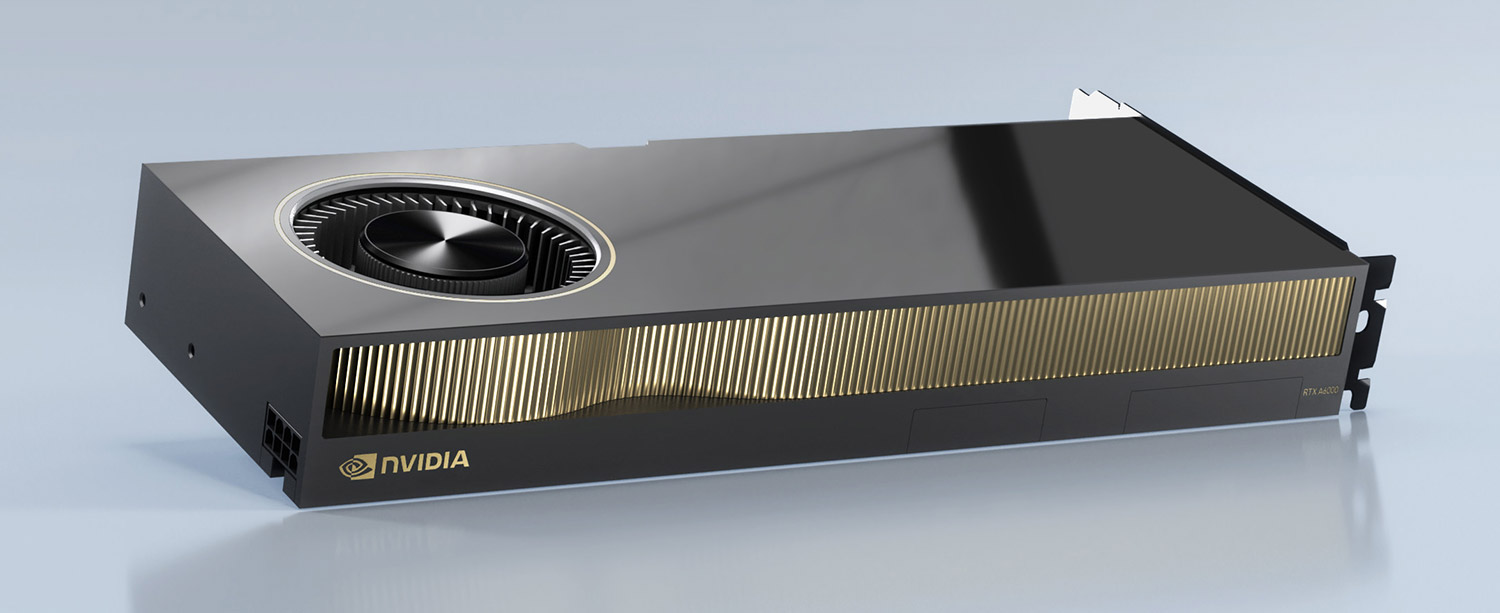

To help boost supply of its high-end professional workstation GPUs, Nvidia recently released a derivative of the RTX A5000 with slightly lower specs. Greg Corke looks at how the RTX A4500 stacks up in real-time viz, GPU rendering and VR workflows

When Nvidia launched the 20 GB Nvidia RTX A4500 professional workstation GPU in November 2021, it took us a bit by surprise. We didn’t really see a need for a product to sit between the RTX A4000 (16 GB) and RTX A5000 (24 GB). The gap simply wasn’t that big.

But these are no ordinary times. The ongoing global semiconductor shortage has meant supply of the RTX A4000 and RTX A5000 has been patchy to say the least. Since these ‘Ampere’ GPUs launched in Spring 2021 they have been very hard to get hold of. And some of the inflated prices we have seen online have been quite eye watering.

The Nvidia RTX A4500 is a cut down version of the RTX A5000. Its launch appears to have been driven largely by the silicon and components that Nvidia had at its disposal. In fact, this week at Nvidia’s GTC event, the company launched another new GPU, the Nvidia RTX A5500, a cut down version of the RTX A6000.

Nvidia told AEC Magazine that the A5500 not only gives customers a bit of a performance boost over the A5000, but improves the ‘overall availability of supply’. The company admits that it would have been limited if it was only producing the RTX A5000 all year long.

What is the Nvidia RTX A4500?

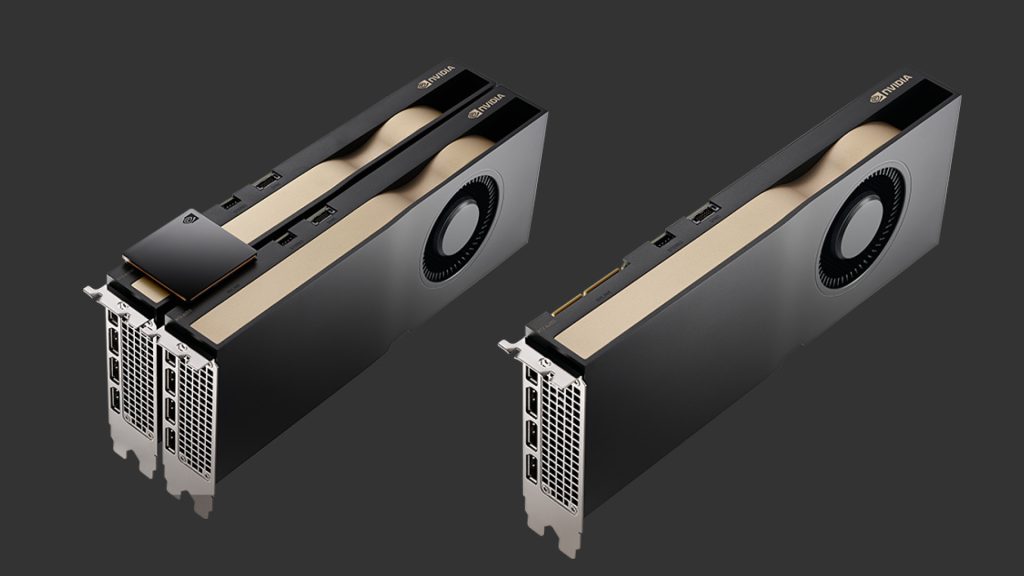

With a dual slot PCIe form factor, the Nvidia RTX A4500 looks identical to the Nvidia RTX A5000 and it is much closer in specs than it is to the single slot Nvidia RTX A4000.

Compared to the RTX A5000, it has 4 GB less memory, but features the same GA102 graphics processor, albeit with some cores disabled, a slightly lower clock speed and a slightly lower power draw (200 Watts vs 230 Watts via a single 8-pin PCIe power connector).

The feature set of the RTX A4500 and A5000 is virtually identical – four DisplayPort 1.4a connectors, 3D stereo, and NVLink — so two A4500 GPUs can be physically connected to scale performance and double the memory to 40 GB in supported applications. This could be to boost 3D frame rates in pro viz applications like Autodesk VRED Professional, or to render large scenes, faster in GPU renderers like Chaos V-Ray.

The one main difference between the GPUs is that the RTX A4500 does not support Nvidia virtual GPU (vGPU) software. So, if you want to virtualise the graphics card to serve multiple users in a virtual workstation, you’ll need the RTX A5000, A5500 or A6000.

On test

We put the RTX A4500 through a series of real-world application benchmarks, for GPU rendering and real-time visualisation.

All tests were carried out using the Intel Core i9-12900K-based Scan 3XS GWP-ME A124C workstation at 4K (3,840 x 2,160) resolution using the latest 511.09 Nvidia driver.

A summary of the specs can be seen below. You can read our full review of the workstation here.

- Intel Core i9 12900K CPU

- 128 GB memory

- 2 TB Samsung 980 Pro NVMe SSD

- Microsoft Windows 11 Pro 64-bit

For comparison we tested an Nvidia RTX A4000 GPU in the same workstation. We did not have an Nvidia RTX A5000 in our possession, so took results from our June 2021 review. Back then our test machine was different, a Scan 3XS GWP-ME A132R with an AMD Ryzen 5950X CPU, running Windows 10 Professional.

With the AMD Ryzen 5950X having a different architecture and lower Instructions Per Clock (IPC), and the workstation running a different OS, there’s a hint of comparing apples and pears here. However, the results should still give a pretty good indication of relative performance.

One might expect the AMD CPU to bring down scores slightly in some of our real-time 3D tests. However, as most of these benchmarks are very GPU limited, rather than CPU limited, we wouldn’t expect the CPU to influence the results that much.

In our GPU rendering tests, as the role of the CPU is minimal, the comparison should be more precise.

Real-time performance

There wasn’t a huge performance difference between the RTX A4500 and A5000 in our real time 3D tests. With our automotive test model in Autodesk VRED Professional, for example, the A5000 was between 1.75% and 3.10% faster than the A4500, depending on the level of anti-aliasing applied.

We experienced similar in arch viz tool Enscape with a difference of 2.85% when testing our large office scene

The RTX A5000 pulled ahead more in Unreal Engine, especially when real time ray tracing was enabled on the Audi automotive model.

The gap between the RTX A4000 and A4500 was much bigger. This isn’t entirely surprising, as the RTX A4000 is a single slot card, draws up to 140 W of power and features the lower spec GA104 processor. The RTX A4500 was around 20-25% faster than the A4000 across the board.

GPU rendering

In our GPU rendering benchmarks, the lead of the RTX A5000 over the RTX A4500 was much bigger.

In the Chaos V-Ray RTX benchmark, for example, it was 9.5% faster, rising to 26% in KeyShot 10. This may be because these benchmarks use all three types of processing cores – CUDA, Tensor and RT (see box out below).

The RTX A4500 was consistently faster than the RTX A4000, between 20% and 26% in most of our tests.

You can read more about our testing process in our June 2021 review of the Nvidia RTX A4000 and RTX A5000.

Conclusion

It’s a very interesting time for professional workstation GPUs, and GPUs in general. While you might have your eye on a specific model in terms of features and price/performance, what you end up buying might be dictated more by what’s available in the channel. UK workstation manufacturer Scan, for example, currently has a ‘significant quantity’ of RTX A4500s available for workstation builds, while the RTX A5000 remains in short supply.

The introduction of the RTX A4500 (and, this week, the RTX A5500) certainly gives customers options. If you’re looking for a powerful viz focused GPU with lots of memory, then the RTX A4500 and RTX A5000 both pack a real punch. The price differential isn’t huge. And, with a little bit of luck, you may find one that fits your budget and workflows precisely.

What are Nvidia RTX GPUs?

Nvidia RTX GPUs are professional graphics cards designed specifically for workstations. They are standard in most HP Z, Dell Precision, Fujitsu Celsius and Lenovo ThinkStation workstations, and are also offered in workstations from smaller manufacturers such as BOXX, Scan and Workstation Specialists.

Nvidia RTX GPUs tend to have more memory than their consumer ‘GeForce RTX’ counterparts, so they can handle larger datasets, both in terms of geometry and textures. In the higher-end models this is Error Correcting Code (ECC) memory to protect against crashes.

There’s also a difference in software. With special drivers, Nvidia RTX GPUs are certified for a range of professional applications and are tested by independent software vendors (ISVs) and the major workstation manufacturers.

Nvidia RTX GPUs feature three types of processing cores:

- Nvidia ‘Ampere’ CUDA cores for general purpose processing.

- 3rd Gen Nvidia Tensor cores for AI and Machine Learning.

- 2nd Gen Nvidia RT cores which are dedicated to ray tracing.

Different applications support these cores in different ways. Most CAD and BIM applications, for example, simply use the CUDA cores for rasterisation, to turn vector data into pixels (a raster image).

On the other hand, an increasing number of visualisation tools can use all three types of cores. Real time arch viz software Enscape, for example, can use CUDA for rasterisation, RT cores to accelerate ray tracing calculations and Tensor cores for Nvidia Deep Learning Super Sampling (DLSS).

DLSS allows scenes to be rendered in real time at lower resolutions and then deep learning-based upscaling techniques are used to output ‘a clean and sharp high-resolution image’. The aim is to boost 3D performance or cut render times.

Other applications that can be accelerated by Nvidia’s RT and Tensor cores include Chaos V-Ray, Chaos Vantage, Solidworks Visualize, Luxion KeyShot, Unity, Nvidia Omniverse, Unreal Engine, Autodesk VRED and others.