Architects and designers are increasingly using text-to-image AI models like Stable Diffusion. Processing is often pushed to the cloud, but the GPU in your workstation may already be perfectly capable, writes Greg Corke

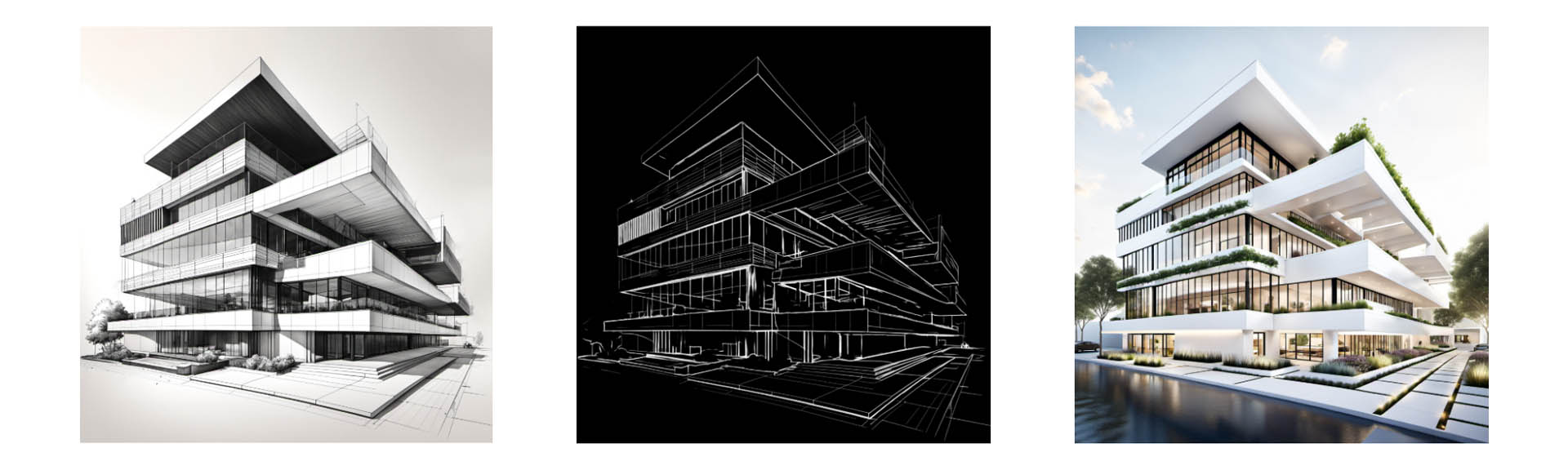

Stable Diffusion is a powerful text-to-image AI model that generates stunning photorealistic images based on textual descriptions. Its versatility, control and precision have made it a popular tool in industries such as architecture and product design.

One of its key benefits is its ability to enhance the conceptual design phase. Architects and product designers can quickly generate hundreds of images, allowing them to explore different design ideas and styles in a fraction of the time it would take to do manually.

This article is part of AEC Magazine’s 2025 Workstation Special report

Stable Diffusion relies on two main processes: inferencing and training. Most architects and designers will primarily engage with inferencing, the process of generating images from text prompts. This can be computationally demanding, requiring significant GPU power.

Training is even more resource intensive. It involves creating a custom diffusion model, which can be tailored to match a specific architectural style, client preference, product type, or brand. Training is often handled by a single expert within a firm.

There are several architecture-specific tools built on top of Stable Diffusion or other AI models, which run in a browser or handle the computation in the cloud. Examples include AI Visualizer (for Archicad, SketchUp, and Vectorworks), Veras, LookX AI, and CrXaI AI Image Generator. While these tools simplify access to the technology, and there are many different ways to run vanilla Stable Diffusion in the cloud, many architects still prefer to keep things local.

Running Stable Diffusion on a workstation offers more options for customisation, guarantees control over sensitive IP, and can turn out cheaper in the long run. Furthermore, if your team already uses real-time viz software, the chances are they already have a GPU powerful enough to handle Stable Diffusion’s computational demands.

While computational power is essential for Stable Diffusion, GPU memory plays an equally important role. Memory usage in Stable Diffusion is impacted by several factors, including:

- Resolution: higher res images (e.g. 1,024 x 1,024 pixels) demand more memory compared to lower res (e.g. 512 x 512).

- Batch size: Generating more images in parallel can decrease time per image, but uses more memory.

- Version: Newer versions of Stable Diffusion (e.g. SDXL) use more memory.

- Control: Using tools to enhance the model’s functionality, such as LoRAs for fine tuning or ControlNet for additional inputs, can add to the memory footprint.

For inferencing to be most efficient, the entire model must fit into GPU memory. When GPU memory becomes full, operations may still run, but at significantly reduced speeds as the GPU must then borrow from the workstation’s system memory, over the PCIe bus.

This is where professional GPUs can benefit some workflows, as they typically have more memory than consumer GPUs. For instance, the Nvidia RTX A4000 professional GPU is roughly the equivalent of the Nvidia GeForce RTX 3070, but it comes with 16 GB of GPU memory compared to 8 GB on the RTX 3070.

Inferencing performance

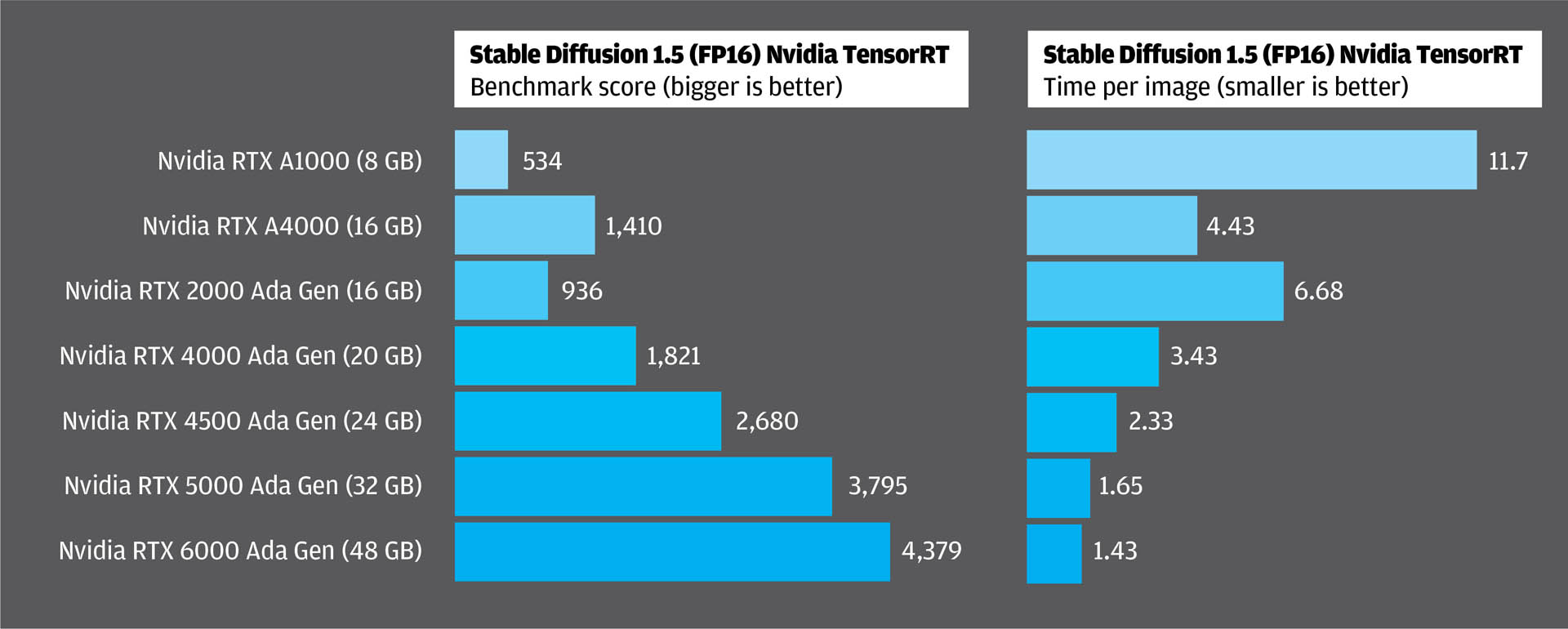

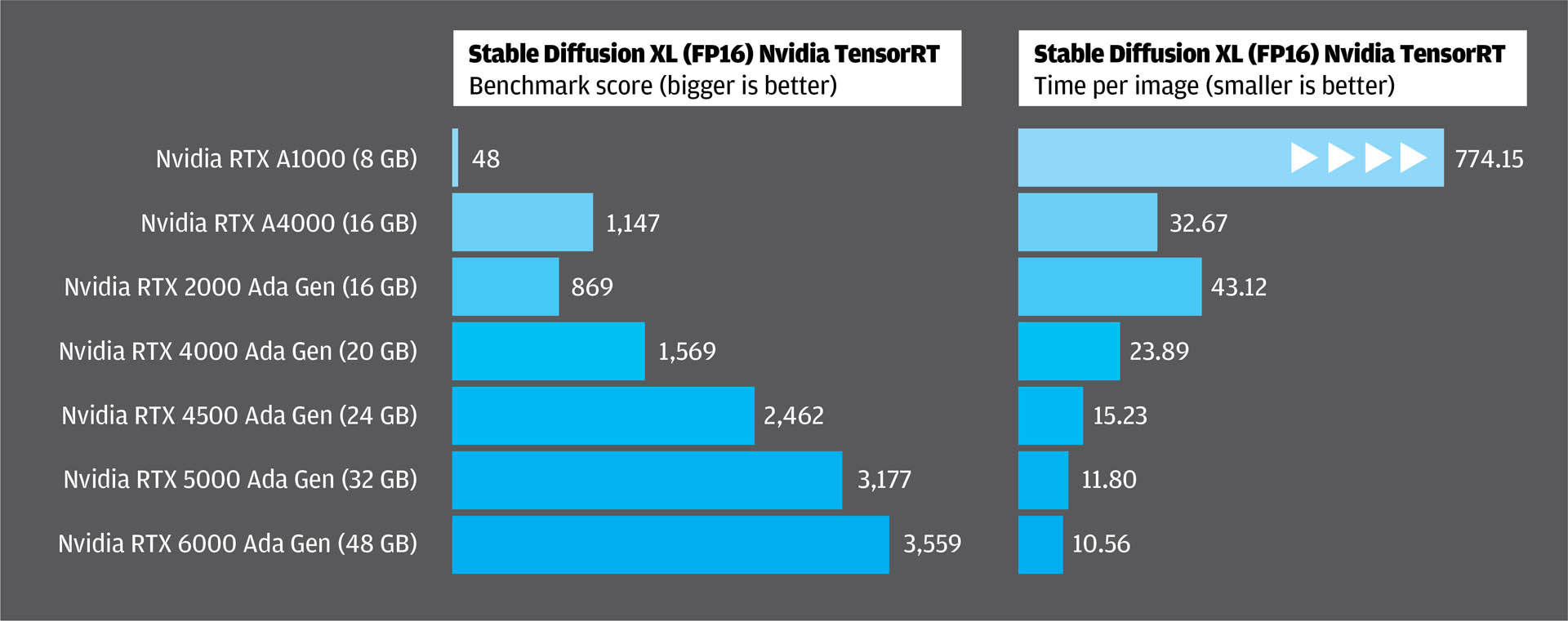

To evaluate GPU performance for Stable Diffusion inferencing, we used the UL Procyon AI Image Generation Benchmark. The benchmark supports multiple inference engines, including Intel OpenVino, Nvidia TensorRT, and ONNX runtime with DirectML. For this article, we focused on Nvidia professional GPUs and the Nvidia TensorRT engine.

This benchmark includes two tests utilising different versions of the Stable Diffusion model — Stable Diffusion 1.5, which generates images at 512 x 512 resolution and Stable Diffusion XL (SDXL), which generates images at 1,024 x 1,024. The SD 1.5 test uses 4.6 GB of GPU memory, while the SDXL test uses 9.8 GB.

In both tests, the UL Procyon benchmark generates a set of 16 images, divided into batches. SD 1.5 uses a batch size of 4, while SDXL uses a batch size of 1. A higher benchmark score indicates better GPU performance. To provide more insight into real-world performance, the benchmark also reports the average image generation speed, measured in seconds per image. All results can be seen in the charts below.

Key takeaways

It’s no surprise that performance goes up as you move up the range of GPUs, although there are diminishing returns at the higher-end. In the SD 1.5 test, even the Nvidia RTX A1000 delivers an image every 11.7 secs, which some will find acceptable.

The Nvidia RTX 4000 Ada Generation GPU looks to be a solid choice for Stable Diffusion, especially as it comes with 20 GB of GPU memory. The Nvidia RTX 6000 Ada Generation (48 GB) is around 2.3 times faster, but considering it costs almost six times more (£6,300 vs £1,066) it will be hard to justify on those performance metrics alone.

The real benefits of the higher end cards are most likely to be found in workflows where you can exploit the extra memory. This includes handling larger batch sizes, running more complex models, and, of course, speeding up training.

Perhaps the most revealing test result comes from SDXL, as it shows what can happen when you run out of GPU memory. The RTX A1000 still delivers results, but its performance slows drastically. Although it’s just 2 GB short of the 10 GB needed for the test, it takes a staggering 13 minutes to generate a single image — 70 times slower than the RTX 6000 Ada.

Of course, AI image generation technology is moving at an incredible pace. Tools including Flux, Runway and Sora can even be used to generate video, which demands even more from the GPU. When considering what GPU to buy now, it’s essential to plan for the future.

AI diffusion models – a guide for AEC professionals

From the rapid generation of high-quality visualisations to process optimisation, diffusion models are having a huge impact in AEC. In this AEC Magazine article, Nvidia’s Sama Bali explains how this powerful generative AI technology works, how it can be applied to different workflows, and how AEC firms can get on board.

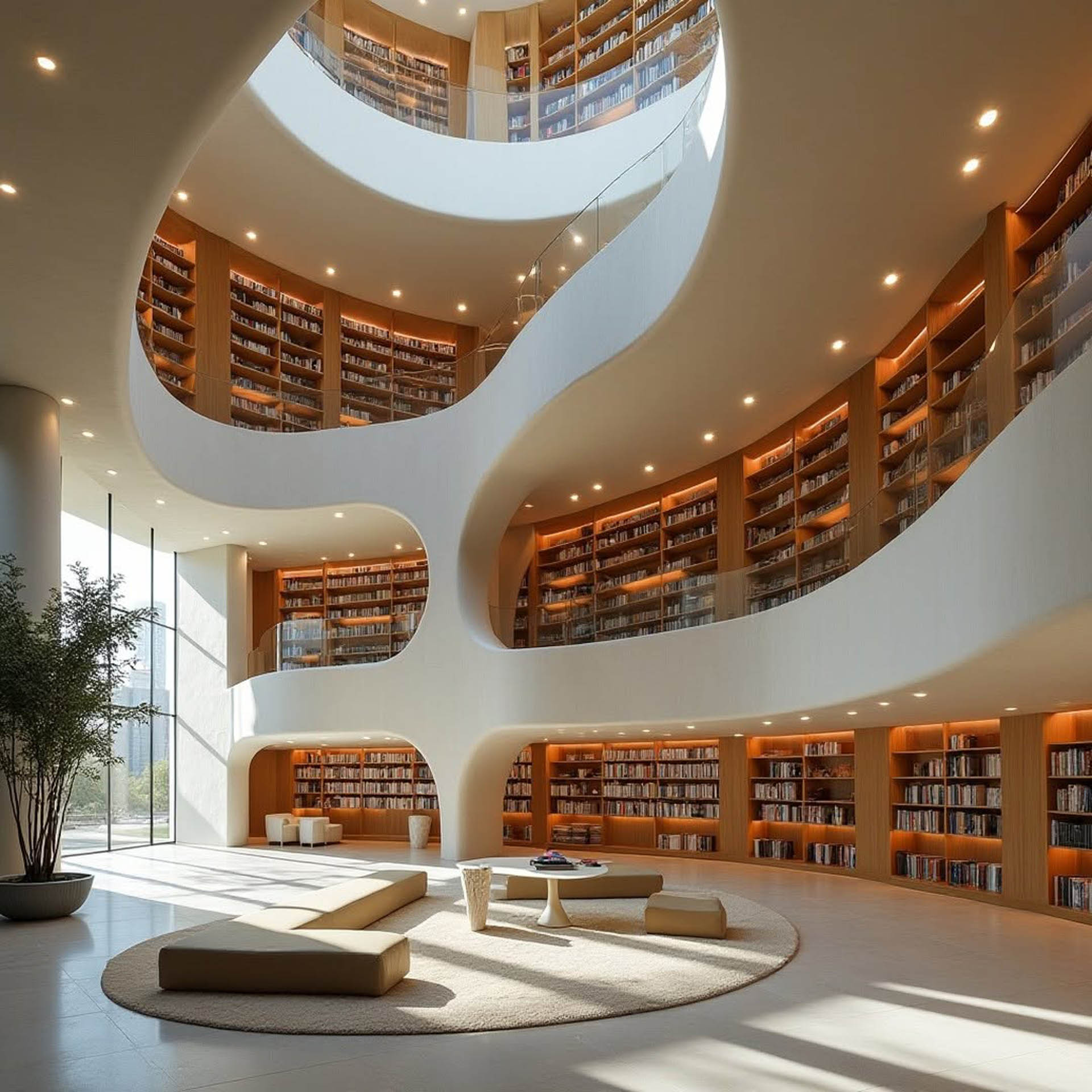

Main image: Image generated with ModelMakerXL, a custom trained LoRA by Ismail Seleit. All Stable Diffusion

architectural images courtesy of James Gray.

This article is part of AEC Magazine’s 2025 Workstation Special report

- Features

- AMD Ryzen AI Max Pro: the integrated GPU comes of age

- AMD Ryzen 9000 vs Intel Core Ultra 200S

- Workstations for arch viz

- The AI enigma – challenges for workstations

- Nvidia RTX GPUs for Stable Diffusion

- Z by HP Boost: GPUs on demand

- Workstations for reality modelling

Reviews

- HP ZBook Firefly 14 G11 A mobile workstation

- Lenovo ThinkPad P14s (AMD) mobile workstation

- Scan 3XS GWP-A1-C24 and GWP-A1-R32 desktop workstations

- Boxx Apexx A3 desktop workstation

- Armari Magnetar MM16R9 desktop workstation

- Comino Grando workstation RM

News

- Reshuffle spells end for Dell Precision workstation brand

- Lenovo powers IMSCAD workstation as a service (WaaS)

- Nvidia unveils ‘Blackwell’ RTX GPUs

- HP to launch 18-inch mobile workstation

- Nvidia reveals AI workstation

- HP bets big on AMD Ryzen AI Max PRO processor

- Intel Core Ultra 200HX and 200H processors launch

- AMD Ryzen AI Max PRO ‘Strix Halo’ processor launches