Greg Corke reports on an easy to use rendering add-on for V-Ray for 3ds Max and Maya that uses lightfields to bring new levels of interactivity and dynamism to 360 VR renders

At Nvidia’s annual GTC event in 2016, CEO Jensen Huang unveiled Iray VR, a new technology that promised to bring photorealism to fully immersive virtual reality. It made for a phenomenal presentation but, as is often the case with GTC keynotes, there was a kicker. It took eight high-end GPUs one hundred hours to render the VR experience and, when viewed in VR, the low-res display of the HTC Vive didn’t really do justice to the photorealistic output. In truth, it was more of a technology demonstration than a viable commercial product.

Iray VR used lightfields, a technology that sits somewhere between a fully interactive VR experience and a static 360 VR render. Like a 360 VR render, it uses ray tracing, so the quality is photorealistic. But instead of viewing the scene from a single fixed point, the person wearing the VR headset can move their head a small distance in any direction — forward/backward, up/down, left/right. In other words, six degrees of freedom (6DoF).

In the case of the Nvidia demo it was a 1m cube, enough to give you the effect of motion parallax, but not enough to go for a wander. Shift your head to one side and objects that are close appear to move more than the ones further away. It meant you could peek around a column or over a balcony, revealing new detail within the scene, or simply get a much better sense of depth and immersion.

The idea behind Iray VR was that an architectural visualiser could create a series of these lightfields for a client presentation. The client could then teleport around the building from lightfield to lightfield, seeing it from different vantage points and at a much higher quality than a game engine.

Lightfields work by essentially rendering a scene from hundreds of points within a fixed volume. The problem with Iray VR was it took a brute force approach, so every single light probe within the cube had to be rendered individually, leading to prohibitively long render times.

Three years on and, even though we now have significantly faster GPUs, Iray VR would still probably be inpractical to use on everyday hardware. But what if you could do things smarter?

Lightfields get smart

PresenZ is an application that takes a different approach to lightfields by rendering the scene selectively. It starts with a ‘detect phase’ that just sends ‘probe rays’ or ‘intersection rays’ that are very fast to cast and don’t need to evaluate any shader or lighting.

During this phase, the software kind of scans and analyses the 3D scene from the Zone of View (ZOV) — the volume box from which you view the scene — to define which are the useful render rays that need to be cast to generate the final render.

The ‘render phase’ then takes this list of rays with different origins and directions and casts them as standard camera rays from the render engine, which in turn will do the shading and lighting as usual.

The net result is you can create lightfields dramatically quicker. At the launch event at Escape Technology’s London office, PresenZ founder Tristan Salome told AEC Magazine that on average it takes only 50% longer than a standard 360 VR render. This equates to tens of minutes on a multi core desktop workstation, significantly faster if you run it though a render farm. It’s also possible to create VR movies, rendered frame by frame.

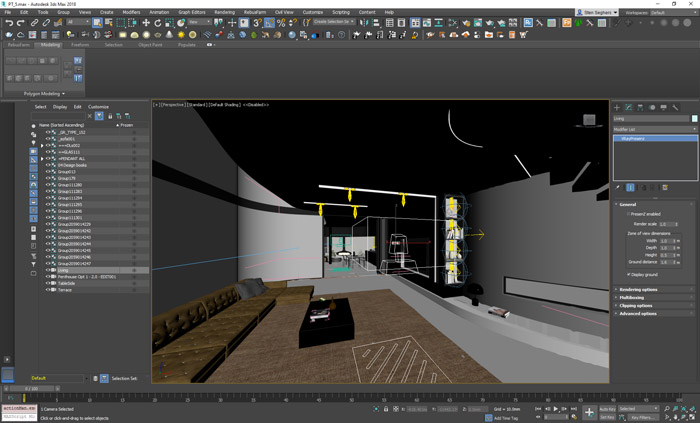

PresenZ is available as a plug-in for 3ds max or Maya. It works with the V-Ray rendering engine, which means you not only get high-quality output, but most arch viz specialists should be able to use the software with little or no training. According to Salome, all you need to do is replace the existing camera with a special PresenZ camera that tells the renderer to shoot rays from certain origins and directions. All other assets remain the same.

PresenZ currently works with V-Ray CPU, but a V-Ray GPU version is coming soon. There’s also an Arnold for Maya and a Unity plug-in in development.

Renders are created from a ZOV volume box with a default size of 1m x 1m and 0.5m height. According to Salome, this is enough to support your natural head movement and feel that are you really inside the space.

It’s technically possible to make this box much bigger so the user can walk freely within the scene. However, this would not only increase render time, but put unnecessary load on the GPU when viewing the scene in VR. Best practice is to create a Multibox, which allows users to subdivide a large space into multiple ZOVs. Each ZOV is then loaded in and out of memory as the viewer moves between them. Salome says the transition between boxes is seamless.

Output can be viewed on a standard desktop display or VR headset using the PresenZ Dashboard, a Windows application that also allows you to organise your renders, create Multibox structures and add teleport points between ZOVs.

The software works with any OpenVR compatible headset, including HTC Vive, Oculus and Windows Mixed Reality. It’s also compatible with the excellent human-eye resolution Varjo headset. We imagine this delivers incredible results, but those on a tighter budget might be better off with the HP Reverb Pro Edition, which offers impressive resolution for the price — 2,160 x 2,160 per eye, which is four times as many pixels as the original HTC Vive.

Controlling the narrative

Real time engines like Unreal Engine and Unity can deliver excellent visual quality and give freedom to explore a building from any angle. However, for client communication, many architects still want to control the narrative, showing off the project from set vantage points. It also gives architects the opportunity to present designs without having to create a fully developed viz asset. “It’s that classic walk from one place to another place and you’re like ‘don’t go around that corner because we haven’t done that bit yet,” laughs Lee Danskin, CTO Escape Technology as he describes a scenario that should resonate with many viz artists.

Conclusion

PresenZ offers an interesting new slant on architectural visualisation in VR. It bridges the gap between photorealistic static 360 VR renders and fully interactive game engine experiences which give you an incredible feeling of scale and immersion.

The big attraction of the software is that it promises to slot seamlessly into established workflows. V-Ray is still the number one renderer for arch viz, and apart from some special treatment that’s needed for transparent objects, PresenZ offers a simple way to extend the life of V-Ray assets and keep control of the narrative in client presentations.

Of course, PresenZ does face competition from game engines. The quality in Unreal Engine might not yet rival V-Ray, but it is excellent and set to get better with the addition of real time ray tracing. But while there is considerable hype behind Nvidia RTX, the GPU horsepower required to deliver ray trace renders at the high frame rates that VR demands is still some way off.

Finally, a quick note about pricing. PresenZ is free to use but all images are watermarked. You can either license the PresenZ Dashboard viewer or pay per render using credits. It means V-Ray users can try out the technology for free, check the results and only pay for the final output if they like it. If you use V-Ray and want to bring a new level of dynamism to your static 360 VR renders, it’s definitely worth giving it a go.

If you enjoyed this article, subscribe to our email newsletter or print / PDF magazine for FREE