Design visualisation technology has seen huge leaps and bounds in the last five years, from real-time ray tracing to the availability of low-cost VR headsets. So, what happened to AR in AEC? It seems to have got lost on the way to the party. Martyn Day searches for answers

Patience is a virtue. I guess it’s a virtue that technology fans are not renowned for. We always want the best processors, fastest graphics, and highest resolution displays in the smallest form factors. The industry does very well to try and keep up with our demands with regular new dollops of power every year, but sometimes it promises exciting new worlds and capabilities that always seem to be a few years away.

This all may sound very “it’s 2021 where is my flying car?” but I can’t help but feel that Augmented Reality (AR) headsets like the Microsoft HoloLens or Magic Leap 1 still seem experimental, too expensive and are so capability-constrained that they ultimately disappoint.

Looking at Virtual Reality (AR’s more popular cousin) there are a number of options, from commodity consumer priced headsets such as the Oculus Quest (£299) and HTC Vive (£599), Valve Index (£1,399) to high-end, high fidelity, enterprise solutions like the Varjo VR 3 (€3,195). While it was years in the making, and it took several decades to shrink the necessary hardware from the size of a room to the size of a phone, VR headsets have since rapidly dropped in price and become commodity items.

Some no longer require incredibly powerful workstations and many have managed to become free from cables and tethers. AR headsets, despite billions being spent in development by many companies, still have high-end price tags, tend to be fairly bulky and are out of reach for most in the AEC industry. All this leaves me wondering if AR will ever go mainstream and become a commodity, beyond holding up the phone or an iPad to get an image augmented overlay?

While there is a lot of talk about Apple working on some lightweight AR glasses, these have never appeared despite always being a year away. And the danger here is with Apple mobile solutions, all products in the ecosystem seem to be extensions of the phone. The Apple Watch is an extension of your phone, iPad is just a big phone, and I fear that the Apple glasses will primarily be a display for notifications and other personal digital assistant features.

Apple has pushed out the boundary for AR and its mobile devices with built-in LiDAR. However, most of the applications and commercial uses which I have seen, have tended to merely offer novelty value. AR is in need of a killer set of spectacles. To find out what the problems were, and if any breakthrough technologies were in the pipeline to change my perception, I talked with industry veterans Martin McDonnell (Soluis, Sublime, Edify), Chris Bryson (Sublime, Edify) and Keith Russell (Magic Leap, but formerly Autodesk, Virtalis and an industry XR consultant).

One of the fundamentals pushing the commoditisation of VR was the huge market for application in games. I asked McDonnell if this was a reason the AR headsets were not as advanced?

Martin McDonnell: AR does have the market appeal, but the current hardware can’t deliver at the price point. But when you can attain it, and the product experience levels up one more generation in terms of field of view, the usefulness will open up many, many, many markets around AR.

It’s the internet multiplied by mobile as a level of disruption. It’s definitely, in my opinion, going to change the game massively. The problem is it needs to be lightweight and cheap. It needs to be a device that has an 8-hour battery life. All of those boxes need to be ticked. When that happens, then everything opens up and it isn’t just enterprise that can afford it.

Chris Bryson: Microsoft [with the HoloLens] has come into the market and included the whole SLAM [Simultaneous Localization and Mapping] technology, all of the AI, the onboard processing, as effectively a proof of concept to enable people to develop high-end AR apps.

This shows you what could be done. The issue is that everything else, the display technology itself, the battery technology, is two or three generations away from doing the same thing that Facebook has just done with the Quest 2. I’d say there’s five plus years to get there.

[AR] is definitely going to change the game massively. The problem is, it needs to be lightweight and cheap. It needs to be a device with an 8-hour battery life

Martin McDonnell, Soluis, Sublime

Now who’s got the deep enough pockets to pay for all that? Well, interestingly, you know Snap (as in Snapchat) just bought WaveOptics which is a provider of AR display technology based in Oxford. Its key technology is using semiconductor processing, high volume processing to make the displays. Microsoft and Magic Leap use waveguide optical technologies, which are almost bespoke, almost one offs. WaveOptics have got a volume manufacturing process going for AR glass and Snap has already proved it’s interested in productising AR glasses. These commodity displays solve one of the key problems and that’s cost.

As to CPUs, Qualcomm is still the only game in town in terms of processors for the masses, while Apple makes its own silicon. Qualcomm is the provider of the processor technology, the 5G technology that’s going into the Oculus Quest 2, but really in the future, instead of having all of that processing on the device, the next step is to use 5G, so you can have that processing in what we call Edge Computing, off the headset, and then you’re just streaming that data to and from the headset.

This means the headset can be super lightweight. You don’t need a big battery, you just need good display technology, 5G and some cameras. All of the cool stuff, all the AI, all of the processing is taken off the device.

Remote control

Removing the processing overhead from the device and using 5G to stream data is a very interesting concept. 5G can deliver in excess of 100 Megabits-per-second (Mbps) and enable headsets to connect to cloud-based compute power. McDonnell and Bryson have already carried out a simple proof of concept on the Glasgow subway 5G network.

Martin McDonnell: It’s not so much bandwidth, but latency that’s the problem. We could almost get there with 4G today, in terms of throughput with compression, but actually super low latency, sub-20 millisecond latency is what’s needed. At that point everything opens up because with the types of BIM models we’re talking about, massive CAD models, all of those can be on your server. You don’t need to then try and lightweight and optimise them to run on a mobile phone type of processor.

That, to me, is the solution looking forward, as you’re always going to prefer to have a big, beefy bit of processing on-site somewhere, and with 5G, as soon as that becomes commonplace, or at least installable where you need it, then we can go really lightweight on the user’s device.

Local delivery

With so many companies already having powerful desktop workstations, especially with GPUs like Nvidia RTX, could local workstations be used instead of expensive high-spec cloud instances?

Martin McDonnell: 100%, yes. We are enabling that for a VR customer right now and they want to go with Quest 2 or Pico G2 headsets, but you can pretty much throw up a custom 5G solution that will give you access to your local RTX power. It’s mind-bendingly awesome when you can throw around colossal datasets and see them on a sub £300 headset. We’ve got a few problems with Oculus, which is owned by Facebook and there are privacy concerns.

Chris Bryson: CloudXR from Nvidia is another solution which enables access to your RTX on your beefy machine, which can be tethered to a 300 quid headset. You could use Wi Fi, but the nice thing is that 5G is low latency to be able to do that too. So in theory, one server, one card can do multiple users, maybe up to four users.

With infrastructure that’s going to be around in the next year or two, we will be able to do some really amazing things and that’s what we’re targeting with Edify, initially for VR, immersively being able to bring in big CAD models, with a server probably on premise with WiFi. And then, very quickly, to be able to expand that to AR with edge compute, which is basically the cloud as long as the datacentre is in your country or near your setting.

In the future, 5G service providers will make much smaller base stations that will also go within offices. So here – offices, factories, large enterprises – will be able to deploy their own 5G on premise as well.

The whole 5G protocol was designed with the specification of ‘a million subscribers within one square kilometre in a city, getting up to a gigabyte of bandwidth each’. Now, that’s not been achieved yet. The concept was designed with countries like South Korea in mind, with really dense coverage of people that need low latency, high bandwidth mobile. As soon as the AR glasses are cheap enough, you could have everybody walking around in a city, and all of that cool processing that currently takes a £4K Hololens to do SLAM like object recognition, etc., you’ll be able to do that do that on the beefier processors in the datacentre.

Development velocity

McDonnell and Bryson come from an application development background. I wondered if part of the reason AR was embryonic was because the developer tools are not there yet?

Martin McDonnell: I think they are there. I think the problem is somewhat been pre-solved by VR. VR is a is a really good test ground for AR applications and with the Varjo-type crossover headset (XR-3 mixed reality) we can start in VR and move to AR and we get to continue to test. We see VR to AR as a continuum, the same data flowing through XR experiences. I believe in the future, VR and AR terminology goes away and it will be XR or something, and we’ll just talk about that digital reality, a digital overlay.

I think what’s missing is a massive body of knowledge and skill and experience in UX design. If we think back to when the web arrived in the 90s everybody lifted their sage desktop layouts and slapped them on the web. We had page layouts from magazines all over the Internet for years until someone said ‘wait a minute, I’m on a screen, I’m scrolling, this is different. Eventually the penny drops, and we get things like apps, and we get a whole different form of experience on the screen. I think that’s the journey we need to go on, particularly for AR.

Hardware limitations

One the major technical limitations of today’s generations of AR headsets is the limited field of view in which overlays can be displayed. Human eyes are amazing things with a very wide field of view. The clipping point where the computer graphics stops is all too apparent and hampers the immersive experience. I asked is the limited view in all AR headsets stops people from adopting?

Martin McDonnell: I don’t think it really matters yet, because they just haven’t got a lightweight cheap device that everyone could have a go at. I think the field of view on Magic Leap and HoloLens 2 is OK for a bunch of tasks. It’s perfectly functional and useful. But not as an immersive experience, to get close to the kind of feeling of emotion you get from VR. Digital objects overlaid in the real world.

Chris Bryson: That’s really difficult to do! It’s definitely a ‘Scotty can you change the laws of physics?’ One technology that might work is micro LEDs, but they are right in their infancy. Facebook bought a couple of micro LED small companies: one actually from Scotland, to create tiny projectors, because the smaller your projector is, the easier it is for you then to control the light and make those wider viewing angles. It’s now kind of a landgrab for some of that key fundamental technology.

Battery technology is never going to improve that quickly, as far as I can see. Again, it’s offload on the cloud, all that processing, and then you don’t need to use so much power.

Martin McDonnell: I actually think the right solution for meeting the short-term needs will be to use the phone. We accept the weight size of the that in our pocket these days. Using that to drive your glasses is a really obvious one – it’s effectively what Magic Leap did, but a physical tether. I think you’ll carry a battery in your pocket to power the device.

That point brings me to the conversation I had with Keith Russell, director, enterprise sales EMEA at Magic Leap. Russell is an industry visualisation veteran, working in both software CGI developers, as well as with the mixed reality, VR and AR hardware firms.

Magic Leap is an infamous company in AR for a number of reasons – having raised $3.5 billion in funding with signifTechnology icant input from Google, Disney, Alibaba and AT&T, being notoriously secretive, yet loving hype, having a real character of a CEO – Rony Abovitz – being almost sold for $10 billion and then hitting a wall when the long hyped product, a pair of $2,300 goggles, eventually shipped.

Magic Leap became the kind of the ‘WeWork of AR’. Rather than describing Rony Abovitz, it’s probably best to watch his TED talk, ‘The synthesis of imagination’ which is probably one of the weirdest TED talks ever given.

However, the device it did produce was beautiful, perhaps not quite as radical and game changing as the company had promised – it still had a narrow field of view, expensive lenses, and the CPU and GPU came in a ‘Lightpack puck’, but these were somewhat underpowered. It became a popular tool amongst developers, less so a commercial success.

In 2020, the company slimmed down and got in new management, headed up by Peggy Johnson (formerly executive Vice President of business development at Microsoft). Magic Leap secured new investment and has since re-focussed on the enterprise market, aiming at applications in the medical, training, factory and construction markets. Its next generation device is currently in development and will be available in 2022.

It promises to challenge existing form factors, being half the size and half as light as the first generation with double the field of view. That really would be an interesting device, but it’s not going to be cheap.

Architectural barriers – AR in AEC

AR has certainly piqued most interest in construction and field work, as opposed to design and architecture. I asked Russell why that was?

Keith Russell: In terms of AR adoption in AEC, it’s key to understand the different groups and types of companies within the AEC market. I think architects, generally, have been slower to adopt the technology, partly because of their need to pick a project to use it on, and the consideration of how they will bill it to a project or client. It’s a bit chicken and egg, and hence it’s the case that their typical billing method makes them cautious of adopting new technology without a project to apply it to.

With engineering and construction companies however, it’s different. If there’s a tool that can be proven to save time or money on a project, or does something faster, they’re much more receptive because they’re not billing it; instead it directly helps them save costs. They tend also to have a budget for internal tools and development.

Hence, if you get the chance to explain the advantages of AR collaborative meetings vs sending six people to site to hold a meeting, it’s an easy time and cost saving.

When Covid restrictions came into play, it wasn’t possible to send six people onsite, so that really accelerated the adoption rate. Hence, the industry started reaching for tools that allowed them to virtually meet or at least have one person onsite guiding the conversation with other remote based colleagues. Therefore, we saw a huge interest in smart glasses to use as a connected device for the ‘see what I see’ use case. It’s not augmented reality, but a simple ‘assisted reality’ use case that allows you to do a multiperson Teams call on a hands-free device.

This has led to a lot of companies and teams in construction getting very comfortable with wearable devices, so now we see a second wave of interested companies as they look to see what else is possible with wearable devices and want to move up to full augmented reality solutions.

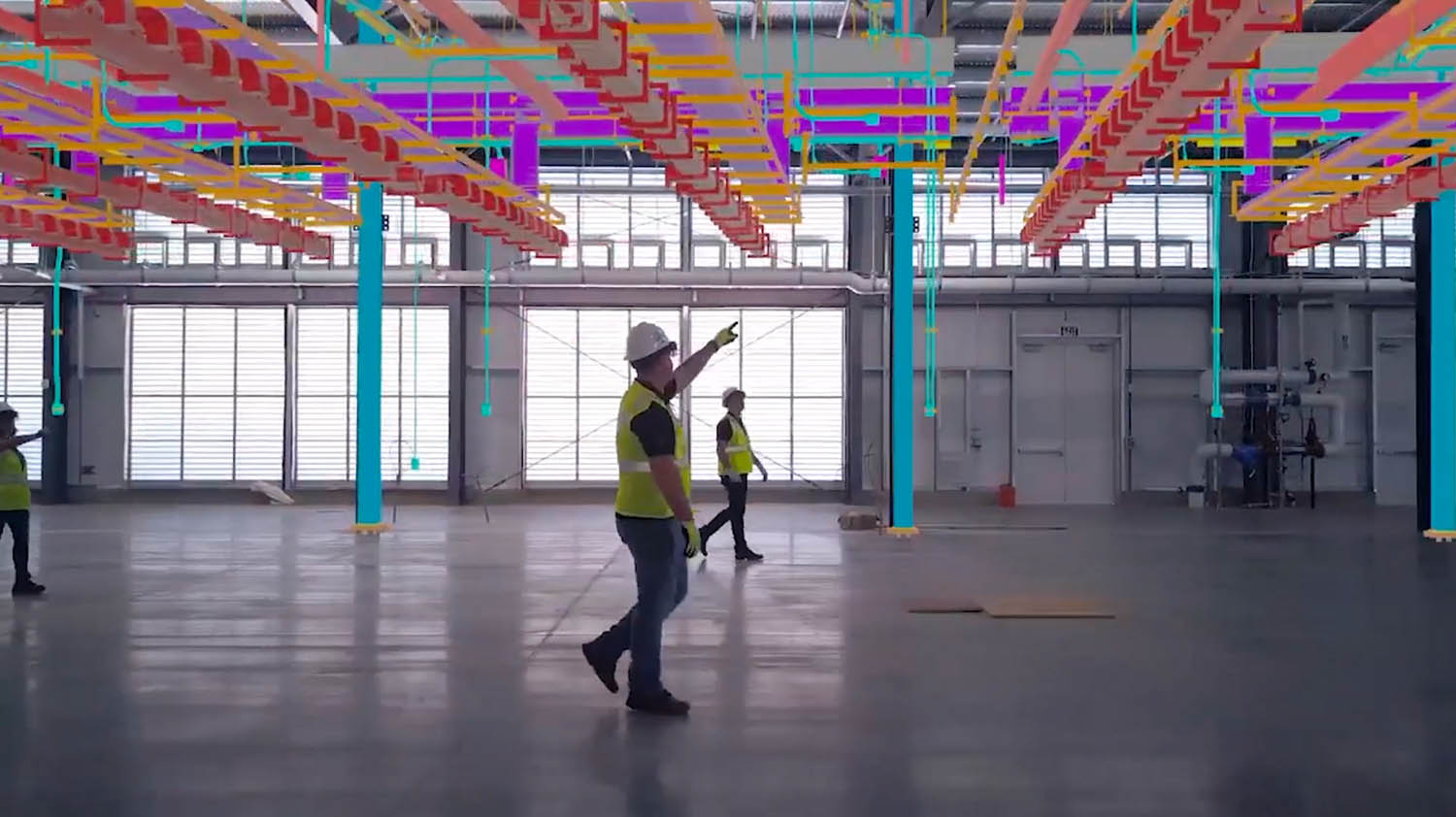

Once you have a full 3D augmented reality device it is possible to project 3D BIM models onto your surroundings at scale. You can selectively look at the HVAC ducting, the electrical conduit runs, the sprinkler systems etc, and turn everything on and off selectively whilst walking through a full-size model.

Then you can bring in remote based colleagues to view the model and effectively hold a MS Teams or Google Meet to discuss what you all are seeing. That is demonstrably a considerable time and cost saving, plus companies don’t need to send their experts out all over the country for a series of single visits. It’s possible to visit multiple sites in a day from a central location.

AR is also a more adoptable technology by a wider team than say VR has been. Because you carry on seeing the world around you, you don’t feel isolated, you are not concerned by trip hazards etc, so is a more comfortable experience, hence a wider group are happy to adopt and use the technology, even senior executives who previously have been reticent to try VR devices.

The first time I took a mixed reality device into a company, one of the very senior guys came into the room to see what was going on, and immediately wanted to try it. We put a large scale 3D model into the space in the boardroom and he was able to walk up to it and review the design, plus he could turn to his team and discuss the design. It meant he was completely comfortable with the process and didn’t feel isolated.

Barriers to mainstream

Compared to VR headsets, AR headsets are still expensive. I asked Keith what will drive adoption of AR?

Keith Russell: There’s a great difference between the adoption of an enterprise device and a consumer device. With a consumer device you are convincing customers to spend their own money for a set of features. For an enterprise device it is different. What I mean by that is, if your day-to-day job could be made easier by using a AR device, and the company is going to purchase it for you, and that company is going to train you on how to use it, you’re probably going to adopt it. Therefore, the cost of the device is only relevant to the company purchasing it on your behalf, and if they can see an immediate return on that investment it is an easy win all round.

It’s not just about the device, it’s about the solution that solves a problem or saves a cost. Users shouldn’t keep fixating on the device, but look at the use cases and the solution

Keith Russell, Magic Leap

The enterprise world is adopting AR on mass. For example, in production environments we’re seeing AR deliver worker instructions and train workers on the assembly line, showing them how to assemble a set of parts, which sequence the seals and washers go on, the torque settings for the bolts or the sequence that you have to put these cables in, all via content rich AR instructions.

Also it’s not just about the device, it’s about the solution that solves a problem or saves a cost. Users shouldn’t keep fixating on the device, but look at the use cases and the solution.

Our approach at Magic Leap is to reach out to companies and ask what problems we can help them with. How can a content rich AR experience enhance and augment their workforce or their design teams? Can we bring together teams in a 3D collaborative meeting environment when you can discuss ideas and concepts with co-presence?

Those users also want that solution to connect to their back-end systems. It has to connect into their device manager, it has to connect into the Wi-Fi and IT systems, it has to be an enterprise ready solution.

Currently I still see adopters worrying about the form factor of the device, but forgetting that it’s going to evolve. Magic Leap has already hinted that our next device will be lighter, with a bigger field of view, because hardware will always improve. As the form factor evolves, the device itself is not what users should be concerned about because they are buying into that total solution.

Conclusion

The augmented reality solutions that have been developed to date, have obviously found a home in more practical engineering and construction firms, requiring information at the point of need. Despite the drawbacks of the current generation of AR glasses, they are good enough for fieldwork. One only has to look at Trimble’s integration of HoloLens and a hard hat, although these are far from commonplace on building sites today.

It seems we need some new magical technologies and more billions spent before AR goes mainstream in common usage and here it might well be Apple creating glasses to extend its iPhone ecosystem. But as with all Apple products, these by definition will not be cheap.

Hopefully the technology developed will see commodity AR glasses in the next five years, probably the development being paid for by one of this decade’s popular social media platform companies.

I think it’s especially interesting thinking of 5G as being such an essential tethering technology to enable lightweight low power mobile devices, which can summon infinite computer power, wherever you are. Well, in saying that, now that I’m living in the Welsh countryside, I actually might have to wait a couple of decades.