With Veras, EvolveLab was the first software firm to apply AI image generation to BIM. Martyn Day talked with company CEO, Bill Allen, on the latest AI tools in development, including an AI assistant to automate drawings

Nothing has shown the potential for AI in architecture more than the generative AI image generators like Midjourney, DALL-E, and Stable Diffusion

Through the simple entry of text (a prompt) to describe a scene, early experimenters produced some amazing work for early-stage architectural design. These tools have increased in capability and moved beyond learning from large databases of images to working from bespoke user input, such as hand drawn sketches, to increase repeatability and enable design evolution. With improved control and predictability, AI conceptual design and rendering is becoming mainstream.

With early text-based generative AI, the output took new cues from geometry modelled with traditional 3D design tools. The first developer to integrate AI image generation with traditional BIM was Colorado-based developer, EvolveLab. The company’s Veras software brought AI image generation and rendering into Revit, SketchUp, Forma, Vectorworks and Rhino. This forced the AI to generate within the constraints of the 3D model and bypassed a lot of the grunt work and skills related to using traditional architectural visualisation tools.

Find this article plus many more in the July / August 2024 Edition of AEC Magazine

👉 Subscribe FREE here 👈

Veras uses 3D model geometry as a substrate guide for the software. The user then adds text input prompts and preferences, and the AI engine quickly generates a spectrum of design ideas.

Veras allows the AI to control the override of BIM geometry and materials with simple sliders like ‘Geometry Override’, ‘Creativity Strength’ and ‘Style Strength’ — the higher the setting, the less it will adhere to the core BIM geometry. There are additional toggles to help, such as interior, nature, atmosphere and aerial view. But even with the same settings you can generate very different ideas with each iteration, which is great for ideation.

Once you have decided on a design, to further refine you select one of the outputs as a ‘seed image’. This allows more subtle changes to be made with user prompts but without the radical design jumps, such as colour of glass or materials.

A recent addition is the ability to create a selection within an image for specific edits, like changing the landscaping, floor material, or to select one façade for regeneration. This is useful if starting from a photograph of a site, as the area for ideation can be selected.

Drawing automation

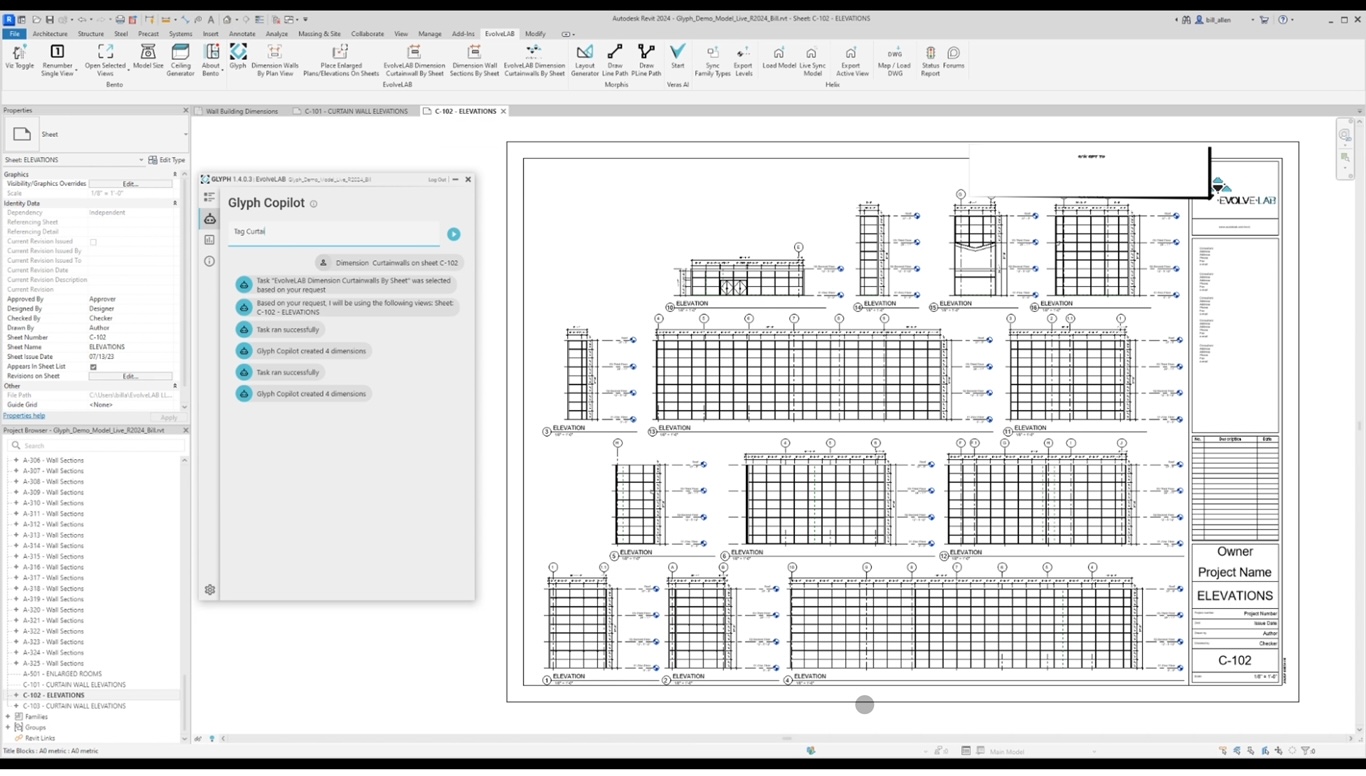

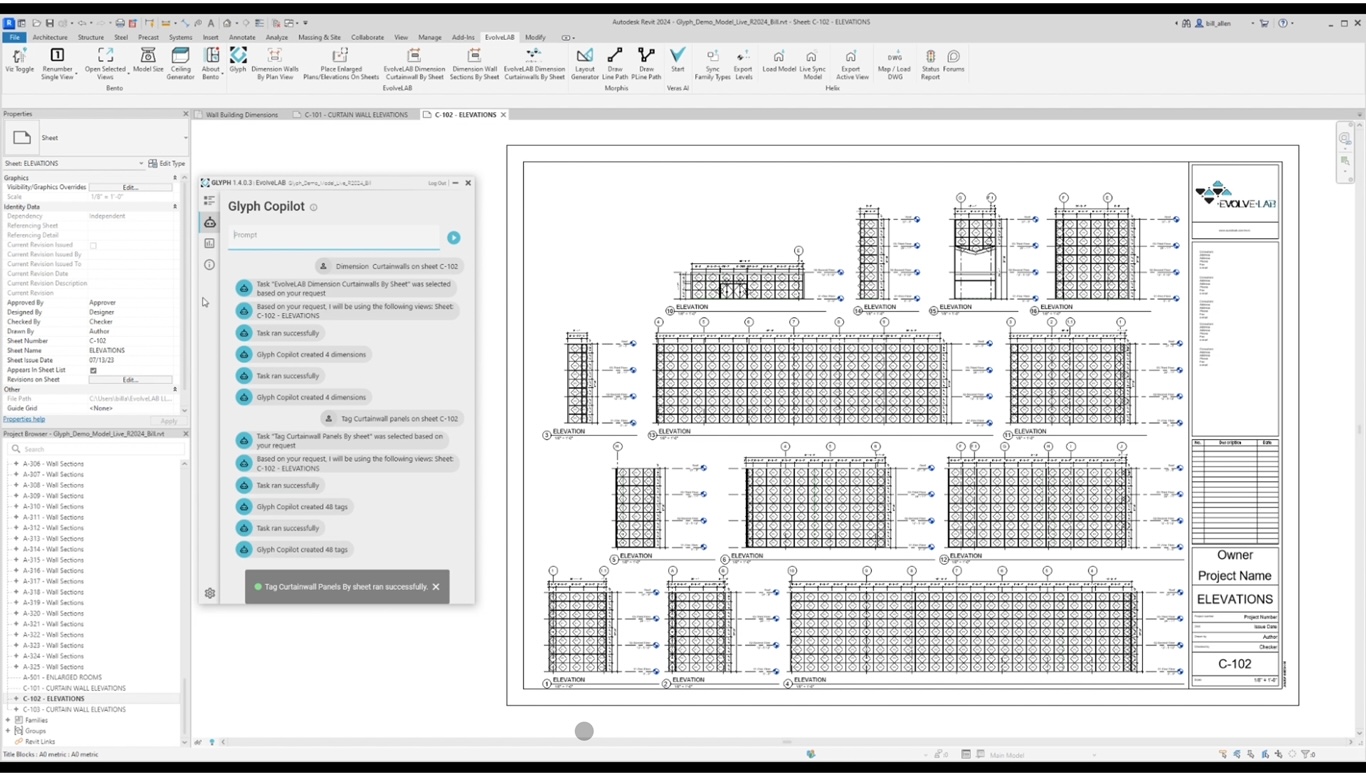

EvolveLab is also working on Glyph Co-Pilot, an AI assistant for its drawing automation tool, Glyph, that uses ChatGPT to help produce drawings.

Glyph is Revit add-in that can perform a range of ‘tasks’ including Create (automate views), dimension (auto dimension), tag (automate annotation), import (into sheets) and place (automate sheet packing).

These tasks can be assembled into a ‘bundle’ which ‘plays’ a customised collection of tasks with a single click. One can automate all the elevation views, auto-dimension the drawings, auto-tag the drawings, automatically place in sheets and automatically arrange the layout. Within the task structure things can get complex, and users can define at a room, view or sheet level just what Glyph does. Once mastered Glyph can save a lot of time in drawing creation, but there are a lot of clicks to set this up.

With Glyph Co-Pilot, currently in closed beta, the development team has fused a ChatGPT front end to the Glyph experience, as Allen explains. “Users can write, dimension all my floorplans for levels 1 through 16 and elevate all my curtain walls on my project and it will go off and do it.

“I can prompt the application by asking it to elevate rooms, 103 through 107, or create enlarged plan of rooms of 103 through 107. Glyph Co-Pilot understands that that I don’t have to list all the rooms in-between,” he says.

Co-Pilot is currently limited to Revit but in the future it will be possible to plug into SketchUp, Archicad, Rhino etc. This means one could to get auto-drawings direct from Rhino, something that many architects are asking for.

But how do you do this, when Rhino lacks the rich meta data of Revit, such as spaces to indicate rooms? “There’s inferred metadata,” explains Allen. “Rhino, has layers and that’s typically how people organise their information and there’s obviously properties too. McNeel is also starting to build out its BIM data components. I expect some data hierarchy that will start to manifest itself within the platform which we can leverage.”

The future of AI

“AI is a bit of a buzzword,” admits Allen. “That means we see firms and products that may have been around for eight to ten years, suddenly claim to be AI applications. We are honest and we offer some AI apps, some prescriptive.”

For example, EvolveLab also develops Morphis for generative design, Helix, a conversion tool for SketchUp and AutoCAD, and Bento, a smorgasbord of Revit productivity apps, none of which are branded AI.

But true AI tools are developing at an incredible pace and while Allen finds it hard to envision where AI will lead to, he is clear that it will have a transformative impact on AEC.

“AI is going to be very disruptive,” he says. “Pre the advent of AI, I did this talk at an AU / Yale, called ‘The Future of BIM will not be BIM – and it’s coming faster than you think.”

“At the time I felt I had a pretty good idea where the industry was going. Now, with the advent of AI, I have no idea where the hell this train is going!

“I feel like the technology is so crazy, every step of the journey, I’m shocked at what is possible, and I didn’t anticipate or expect the rate of development.

“There’s a really good book from Jay Samit called ‘Disrupt You’. In it, the author talks about the value chain, and markets and where money flows. What happens is you get some kind of disruption, like a Netflix that disrupts Blockbuster or Uber that disrupts the taxi market. And AI is definitely doing that.

“A good example is Veras disrupting the rendering market. Users don’t have to go and grab a material and apply it or drop in a sky for their model in Photoshop. You just change the prompt, and you get this beautiful image.

“I feel like [AI] is going to change the market. I do feel like jobs will be lost. But at the end of the day, the AI still needs us, humans, to give us direction. It’s still just a tool like Revit, Rhino, or grasshopper is a tool. AI offers the maximum amount of efficiency and productivity benefits, and it will disrupt jobs and marketplace. But you’re always going to have people driving the tools.”

About EvolveLab

EvolveLab started off exclusively offering consulting, graduating to services. Over time the company saw itself as ‘the mechanic that worked on everyone else’s car’, carrying out all sorts of tasks, like creating dashboards for BIM, and building apps to meet the need of clients. Over the last few years, the company has made an intentional effort to flesh out these apps and productise them and be more like a software developer. It’s a business model that works. The company still does management services for clients but its focus, especially this year has been on the product side.

The company now has five core applications: Veras for AI rendering, Glyph for ‘autodrawings’, Morphis for generative design, Helix, a conversion tool for SketchUp and AutoCAD, and Bento, a smorgasbord of Revit productivity apps. The company will soon launch Glyph Co-Pilot, the AI powered drawing automation assistant.